卷积神经网络 概念

这一章,我觉得最重要的便是要了解好卷积神经网络的概念,这个搞的差不多了,后面看代码才会轻松,

这儿引荐这个视频卷积神经网络

除了这个以外,咱们还能够自行去youtube ,b站 搜索关键词 加深对这个概念的了解, 这儿我就不复述了,

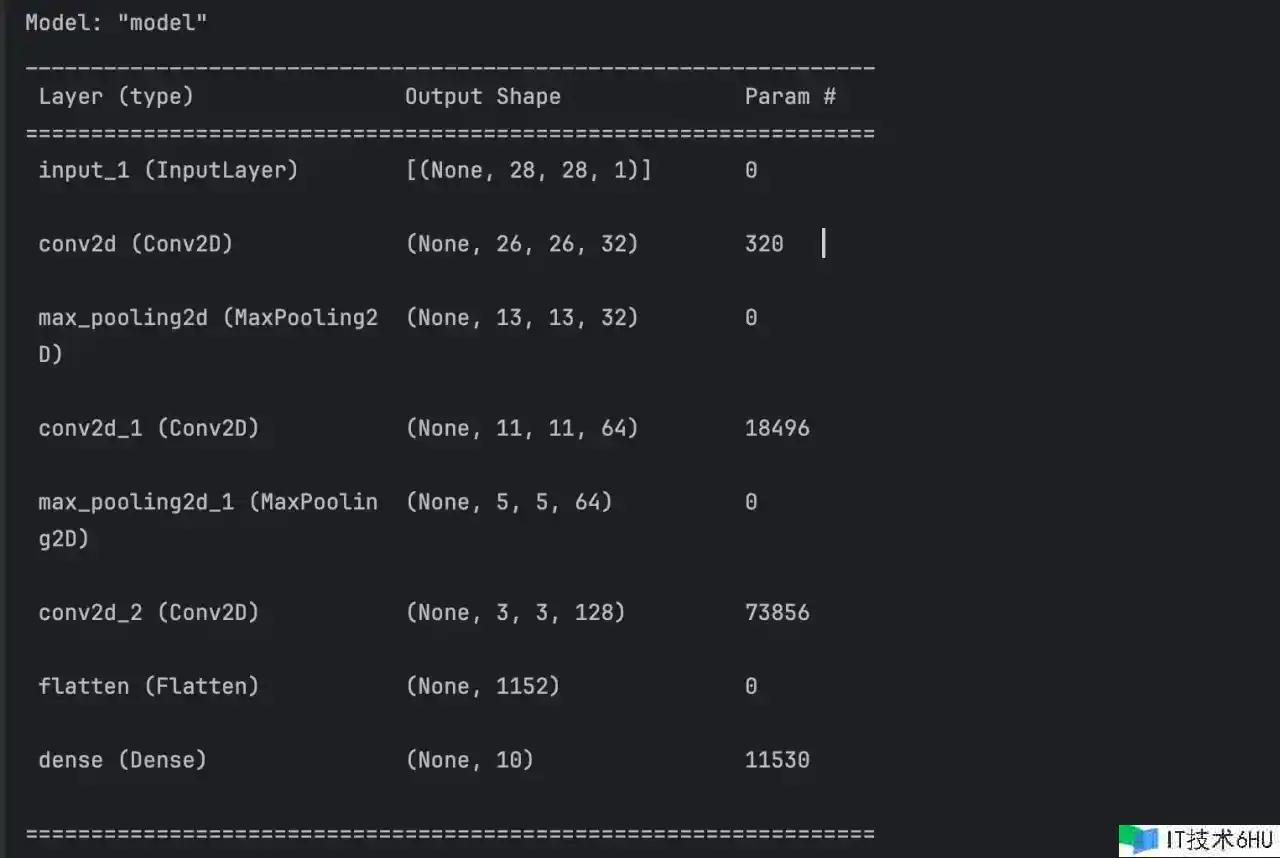

实例化一个小型的卷积神经网络

from tensorflow import keras

from tensorflow.keras import layers

if __name__ == '__main__':

inputs = keras.Input(shape=(28, 28, 1))

# 一般都是3*3 或许 5*5

# 所以通过一次Conv 今后 28*28 会变成 26,会变小

x = layers.Conv2D(filters=32, kernel_size=3, activation="relu")(inputs)

# 最大汇聚下采样 一般都是除2

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.Conv2D(filters=64, kernel_size=3, activation="relu")(x)

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.Conv2D(filters=128, kernel_size=3, activation="relu")(x)

# 将三维输出展开为1维,最终才能增加dense

x = layers.Flatten()(x)

outputs = layers.Dense(10, activation="softmax")(x)

model = keras.Model(inputs=inputs, outputs=outputs)

model.summary()

其实你只需前面的视频看了,这儿模型的输出为啥是这样 就必定能够了解了。

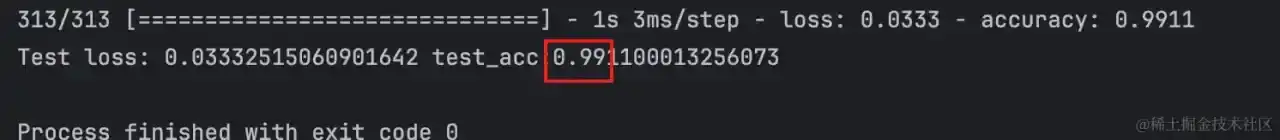

在mnist图画上练习卷积神经网络

(images, labels), (test_images, test_labels) = mnist.load_data()

images = images.reshape(60000, 28, 28, 1).astype('float32') / 255

test_images = test_images.reshape(10000, 28, 28, 1).astype('float32') / 255

model.compile(optimizer="rmsprop", loss="sparse_categorical_crossentropy", metrics=["accuracy"])

model.fit(images, labels, epochs=5, batch_size=64)

test_loss, test_acc = model.evaluate(test_images, test_labels)

print(f"Test loss: {test_loss} test_acc:{test_acc}")

练习精度能够到99.1% ,比之前咱们的密布衔接型网络作用要好的太多了

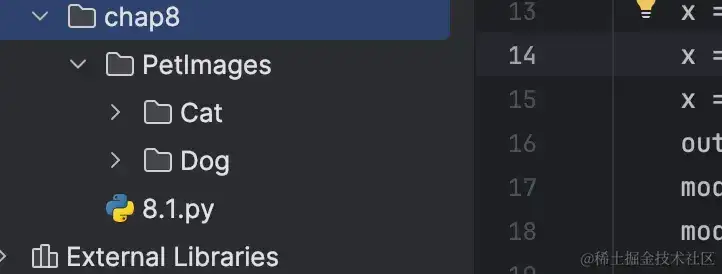

猫狗分类问题

数据从kaggle上下载kaggle猫狗数据

原始的 文件夹

每个文件夹下都有几万张 猫和狗的图片

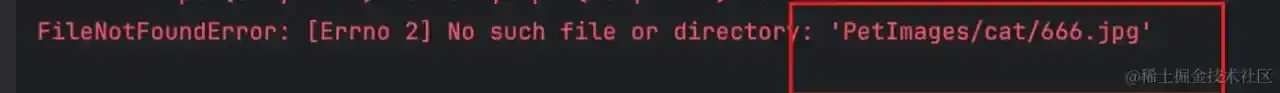

留意这个数据是有问题的,她的索引断掉了, 例如:

这会导致你的练习代码犯错 ,而且一时难以排查,所以咱们从kaggle上下载练习数据时,尤其是图画类的,最好自己写个代码遍历下,看看是不是每个文件都能够用

记住 kaggele上的数据不是彻底可信

当然你也能够用我处理好的数据 也能够github地址

梳理数据

import os, shutil, pathlib

original_dir = pathlib.Path("PetImages")

new_base_dir = pathlib.Path("cats_vs_dogs_small")

def make_subset(subset_name, start_idx, end_idx):

for category in ("cat", "dog"):

dir = f"{new_base_dir}/{subset_name}/{category}"

os.makedirs(dir, exist_ok=True)

fnames = [f"{category}/{i}.jpg" for i in range(start_idx, end_idx)]

for fname in fnames:

shutil.copyfile(src=f"{original_dir}/{fname}", dst=f"{new_base_dir}/{subset_name}/{fname}")

if __name__ == '__main__':

make_subset("train", 0, 1000)

make_subset("validation", 1000, 1500)

make_subset("test", 1500, 2500)

咱们把这些数据梳理一下,分红 练习数据,验证数据,测试数据

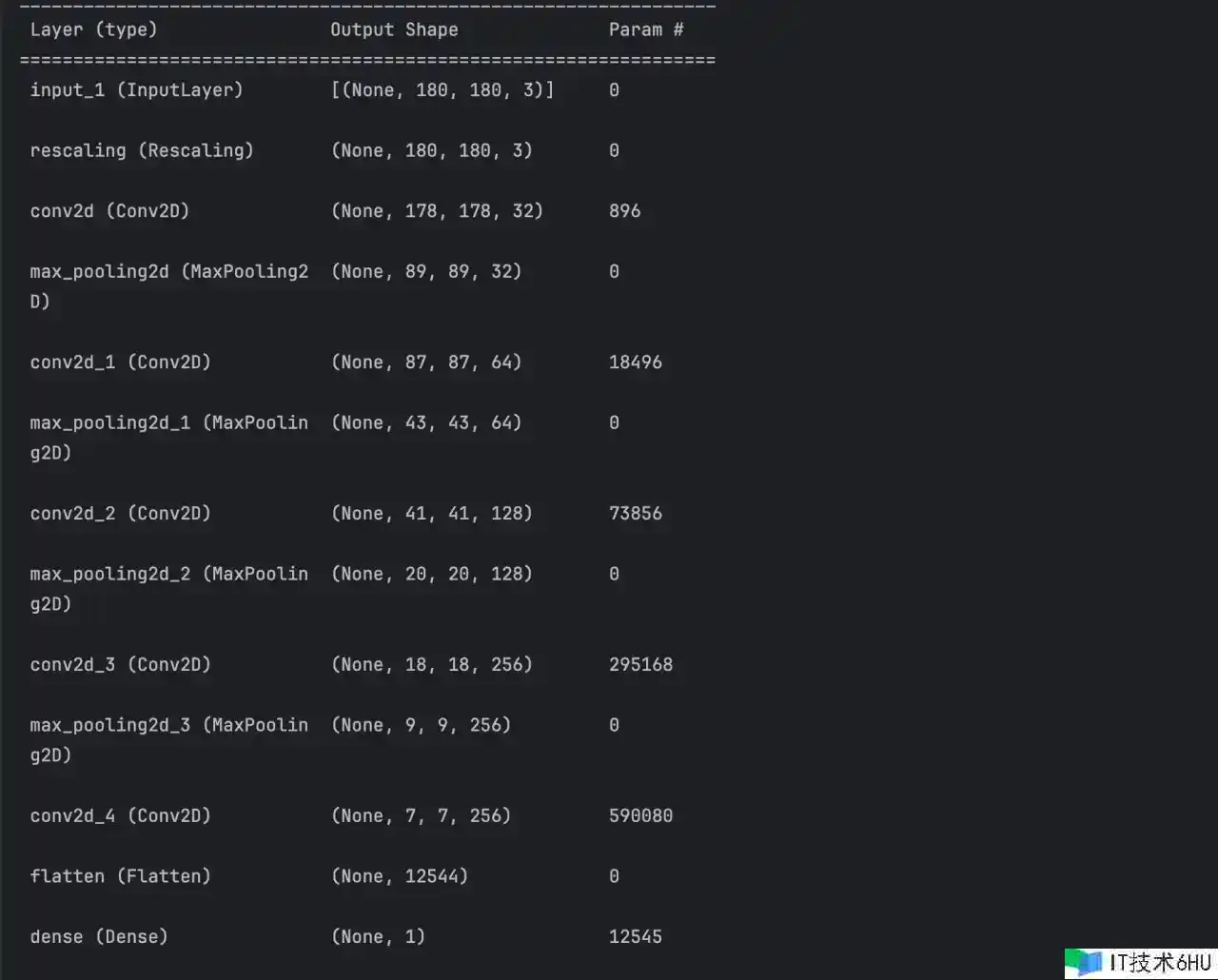

树立模型

inputs = keras.Input(shape=(180, 180, 3))

# 意图是把 0~255的 取值规模缩放到 0,1 这个区间

x = layers.Rescaling(1. / 255)(inputs)

x = layers.Conv2D(filters=32, kernel_size=3, activation="relu")(x)

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.Conv2D(filters=64, kernel_size=3, activation="relu")(x)

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.Conv2D(filters=128, kernel_size=3, activation="relu")(x)

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.Conv2D(filters=256, kernel_size=3, activation="relu")(x)

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.Conv2D(filters=256, kernel_size=3, activation="relu")(x)

x = layers.Flatten()(x)

outputs = layers.Dense(1, activation="sigmoid")(x)

model = keras.Model(inputs=inputs, outputs=outputs)

model.summary()

model.compile(loss="binary_crossentropy", optimizer="rmsprop", metrics=["accuracy"])

看下这个模型长啥样

生成批量数据

前面咱们是把图片分类到不同的文件夹,可是要给tensorflow 来用 还不够,咱们还要读取文件,然后转成对应的180 180 向量

好在ts提供了方便的api 能够迅速的帮咱们把这些事情做好

# image_dataset_from_directory 会帮咱们主动将图片打乱顺序,而且调理成一致大小,并打包成批量

train_dataset = image_dataset_from_directory(new_base_dir / "train", image_size=(180, 180), batch_size=32)

validation_dataset = image_dataset_from_directory(new_base_dir / "validation", image_size=(180, 180), batch_size=32)

test_dataset = image_dataset_from_directory(new_base_dir / "test", image_size=(180, 180), batch_size=32)

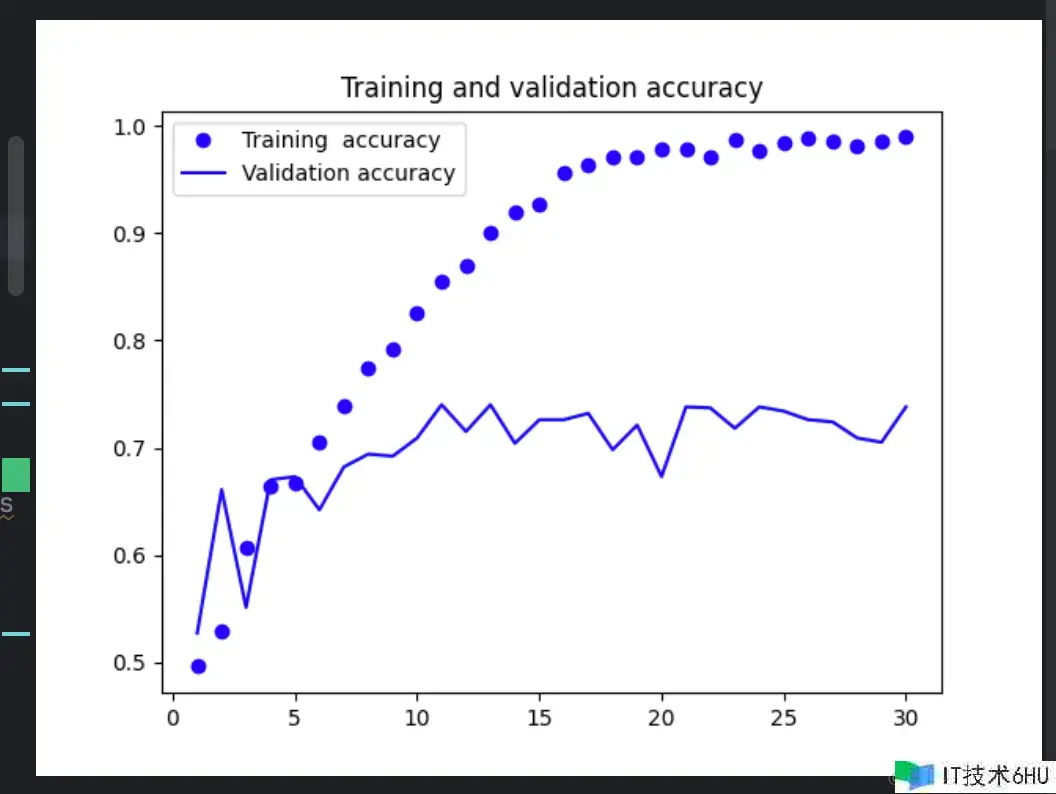

练习看作用

# 利用回调函数,在每轮过后保存模型,保存的都是最佳模型状态

# 留意看save和monitor这2个参数,每次保存的val_loss最好的模型

callbacks = [

keras.callbacks.ModelCheckpoint(filepath="convent_from_scratch.keras", save_best_only=True,

monitor="val_loss", )

]

history = model.fit(train_dataset, epochs=30, validation_data=validation_dataset, callbacks=callbacks)

accuracy = history.history["accuracy"]

val_accuracy = history.history["val_accuracy"]

loss = history.history["loss"]

val_loss = history.history["val_loss"]

epochs = range(1, len(accuracy) + 1)

plt.plot(epochs, accuracy, "bo", label="Training accuracy")

plt.plot(epochs, val_accuracy, "b", label="Validation accuracy")

plt.title("Training and validation accuracy")

plt.legend()

plt.figure()

plt.plot(epochs, loss, "bo", label="Training loss")

plt.plot(epochs, val_loss, "bo", label="Validation loss")

plt.title("Training and validation loss")

plt.legend()

plt.show()

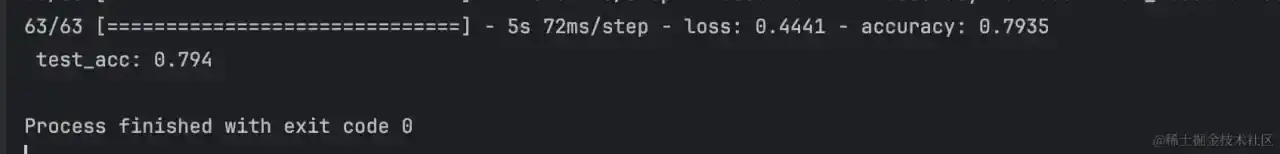

这儿能够看出来,练习数据的精度最终简直能到100%,验证数据大概便是70%左右。明显的过拟合现象

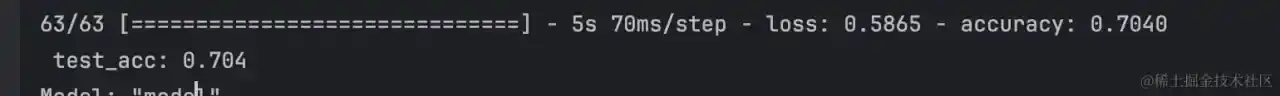

咱们来看下在测试数据上的精度怎么

test_model = keras.models.load_model("convent_from_scratch.keras")

test_loss, test_acc = test_model.evaluate(test_dataset)

print(f" test_acc: {test_acc:.3f}")

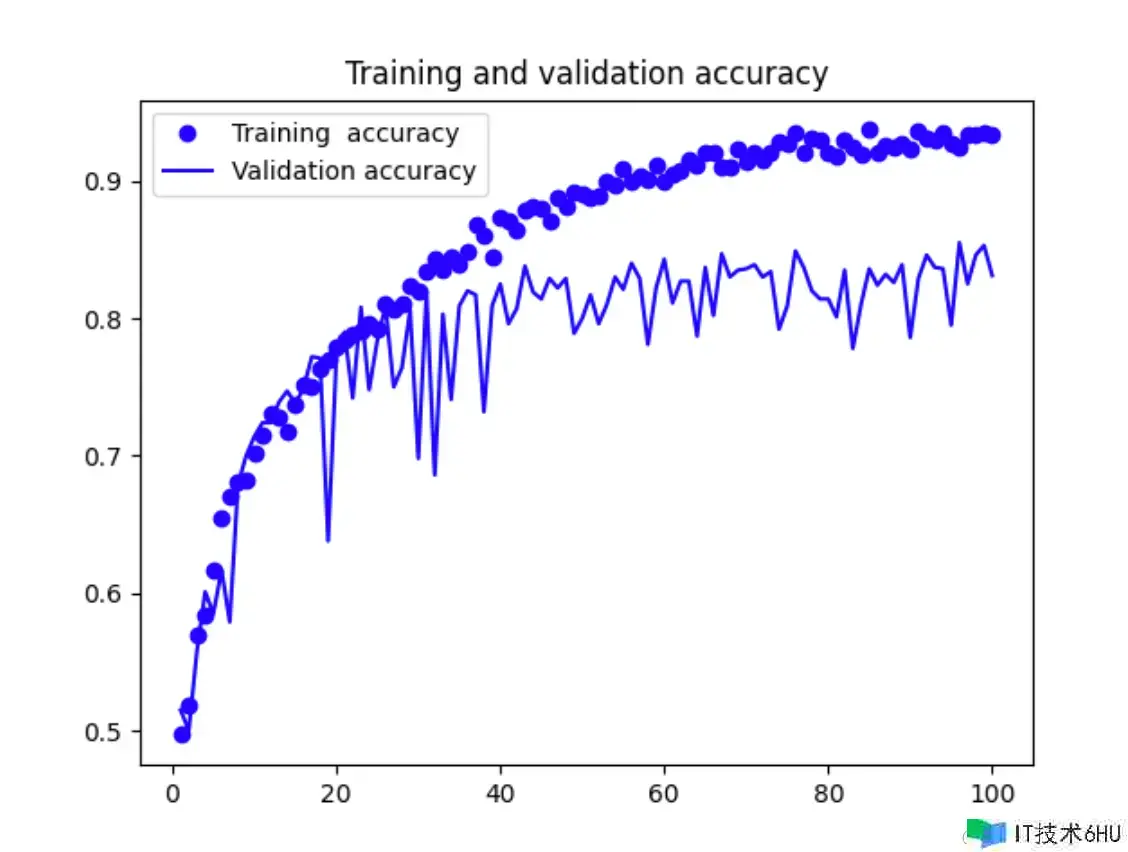

数据增强

这个概念是指 从现有的练习样本中生成更多的练习数据,做法是利用一些能够生成可信图画的随机变换来增强样本

数据曾强的目标是 在练习时不会两次查看 彻底相同的图片。

其实便是你在练习数据不够多的时分 能够利用这种办法 来增加练习数据

inputs = keras.Input(shape=(180, 180, 3))

data_aug = keras.Sequential([

# 图片 随机抽取50%的图画 做水平反转

layers.RandomFlip("horizontal"),

# 图画视点改变

layers.RandomRotation(0.1),

# 扩大缩小

layers.RandomZoom(0.2)

])

x = data_aug(inputs)

# 这儿不再是inputs参数了 是咱们转换过的 数据增强过的 x参数

x = layers.Rescaling(1. / 255)(x)

x = layers.Conv2D(filters=32, kernel_size=3, activation="relu")(x)

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.Conv2D(filters=64, kernel_size=3, activation="relu")(x)

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.Conv2D(filters=128, kernel_size=3, activation="relu")(x)

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.Conv2D(filters=256, kernel_size=3, activation="relu")(x)

x = layers.MaxPooling2D(pool_size=2)(x)

x = layers.Conv2D(filters=256, kernel_size=3, activation="relu")(x)

x = layers.Flatten()(x)

# 留意这儿

x = layers.Dropout(0.5)(x)

另外由于估计到 过拟合现象会较晚的出现,所以咱们 要扩大练习次数到100

history = model.fit(train_dataset, epochs=100, validation_data=validation_dataset, callbacks=callbacks)

实践的测试集作用 相比之前 提高了10个点左右 很不错的成果

运用预练习模型

能够用之前他人练习好的模型 ,然后从头应用于某个没关系的使命,深度模型 不同问题之间具备必定的 可移植性

运用预练习模型有两种方法 特征提取和微调模型

特征提取

咱们能够从ImageNet上练习的VGG16 网络 来做模型提取

# 将vgg16 卷积基 实例化, 而且

conv_base = keras.applications.vgg16.VGG16(

# weights 模型初始化的权重点

# 是否包含密布衔接分类器,由于咱们计划运用自己的猫狗分类,所以这儿显然用false

weights="imagenet", include_top=False, input_shape=(180, 180, 3)

)

这儿除了这个还有其他的图画分类模型,有兴趣的能够自行查询api进行探索

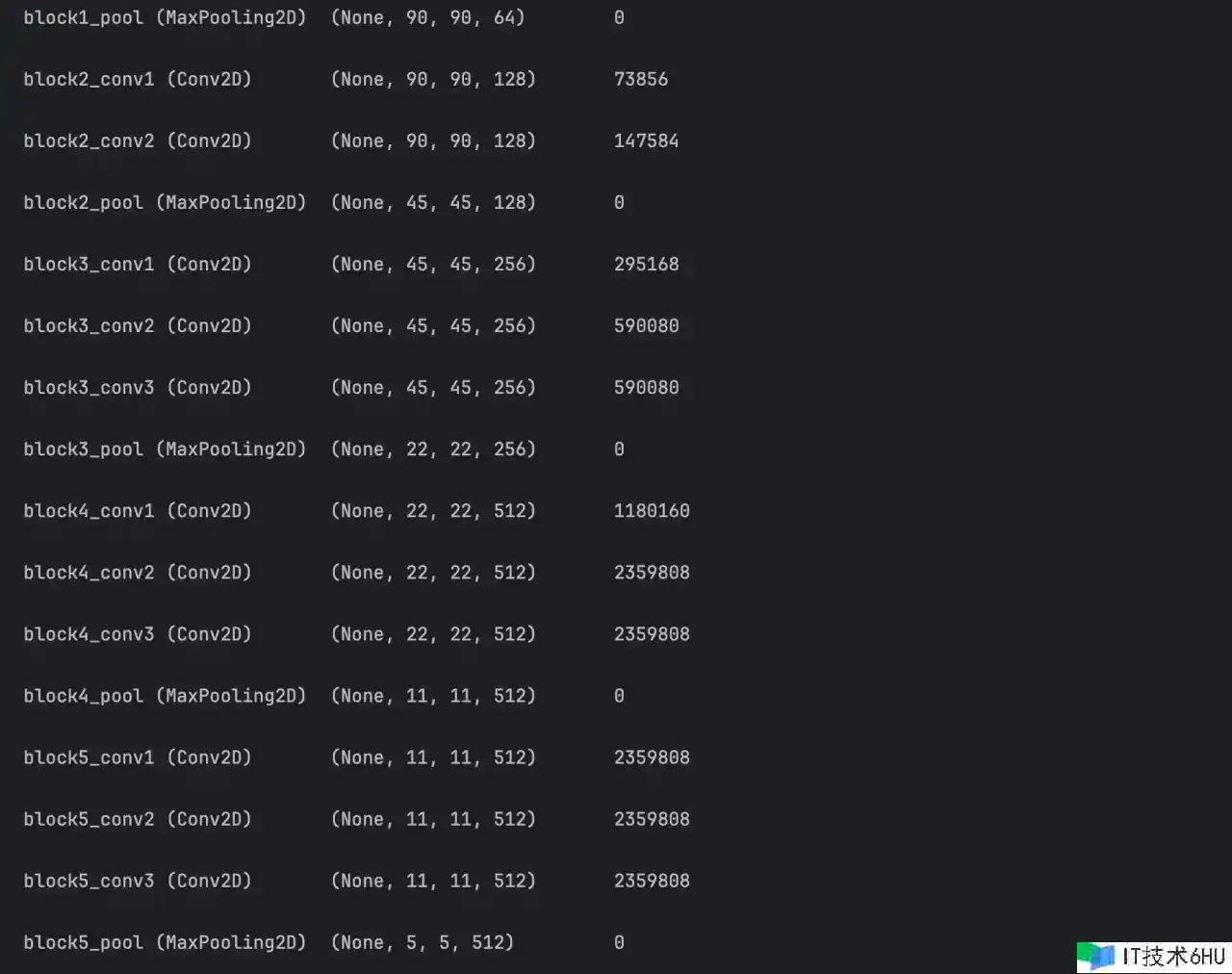

留意看下这个模型提取出来今后 输出是 5 5 512

有了它,咱们就能够开端准备练习了,完整代码如下:

import os

import pathlib

import shutil

import keras

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras import layers

from tensorflow.keras.utils import image_dataset_from_directory

original_dir = pathlib.Path("PetImages")

new_base_dir = pathlib.Path("cats_vs_dogs_small")

# 将vgg16 卷积基 实例化, 而且

conv_base = keras.applications.vgg16.VGG16(

# weights 模型初始化的权重点

# 是否包含密布衔接分类器,由于咱们计划运用自己的猫狗分类,所以这儿显然用false

weights="imagenet", include_top=False, input_shape=(180, 180, 3)

)

def make_subset(subset_name, start_idx, end_idx):

for category in ("cat", "dog"):

dir = f"{new_base_dir}/{subset_name}/{category}"

os.makedirs(dir, exist_ok=True)

fnames = [f"{category}/{i}.jpg" for i in range(start_idx, end_idx)]

for fname in fnames:

shutil.copyfile(src=f"{original_dir}/{fname}", dst=f"{new_base_dir}/{subset_name}/{fname}")

def get_features_and_labels(dataset):

all_features = []

all_labels = []

for images, labels in dataset:

# preprocess_input 只接纳图画作为参数 所以要预处理一下

preprocessed_images = keras.applications.vgg16.preprocess_input(images)

features = conv_base.predict(preprocessed_images)

all_features.append(features)

all_labels.append(labels)

return np.concatenate(all_features), np.concatenate(all_labels)

if __name__ == '__main__':

# image_dataset_from_directory 会帮咱们主动将图片打乱顺序,而且调理成一致大小,并打包成批量

train_dataset = image_dataset_from_directory(new_base_dir / "train", image_size=(180, 180), batch_size=32)

validation_dataset = image_dataset_from_directory(new_base_dir / "validation", image_size=(180, 180), batch_size=32)

test_dataset = image_dataset_from_directory(new_base_dir / "test", image_size=(180, 180), batch_size=32)

train_features, train_labels = get_features_and_labels(train_dataset)

validation_features, validation_labels = get_features_and_labels(validation_dataset)

test_features, test_labels = get_features_and_labels(test_dataset)

conv_base.summary()

print(f"Train features shape: {train_features.shape}")

# 这儿的参数是5,5,512 了 否则input对不上要犯错

inputs = keras.Input(shape=(5, 5, 512))

# 将特征传入dense层之前,必须先通过flattern层

x = layers.Flatten()(inputs)

x = layers.Dense(256)(x)

x = layers.Dropout(0.5)(x)

outputs = layers.Dense(1, activation="sigmoid")(x)

model = keras.Model(inputs=inputs, outputs=outputs)

model.compile(loss="binary_crossentropy", optimizer="rmsprop", metrics=["accuracy"])

model.summary()

callbacks = [

keras.callbacks.ModelCheckpoint(filepath="feature_extraction.keras", save_best_only=True,

monitor="val_loss", )

]

# 这儿练习的参数 要改一下

history = model.fit(train_features, train_labels, epochs=20, validation_data=(validation_features,validation_labels),

callbacks=callbacks)

accuracy = history.history["accuracy"]

val_accuracy = history.history["val_accuracy"]

loss = history.history["loss"]

val_loss = history.history["val_loss"]

epochs = range(1, len(accuracy) + 1)

plt.plot(epochs, accuracy, "bo", label="Training accuracy")

plt.plot(epochs, val_accuracy, "b", label="Validation accuracy")

plt.title("Training and validation accuracy")

plt.legend()

plt.figure()

plt.plot(epochs, loss, "bo", label="Training loss")

plt.plot(epochs, val_loss, "bo", label="Validation loss")

plt.title("Training and validation loss")

plt.legend()

plt.show()

test_model = keras.models.load_model("feature_extraction.keras")

test_loss, test_acc = test_model.evaluate(test_features, test_labels)

print(f" test_acc: {test_acc:.3f}")

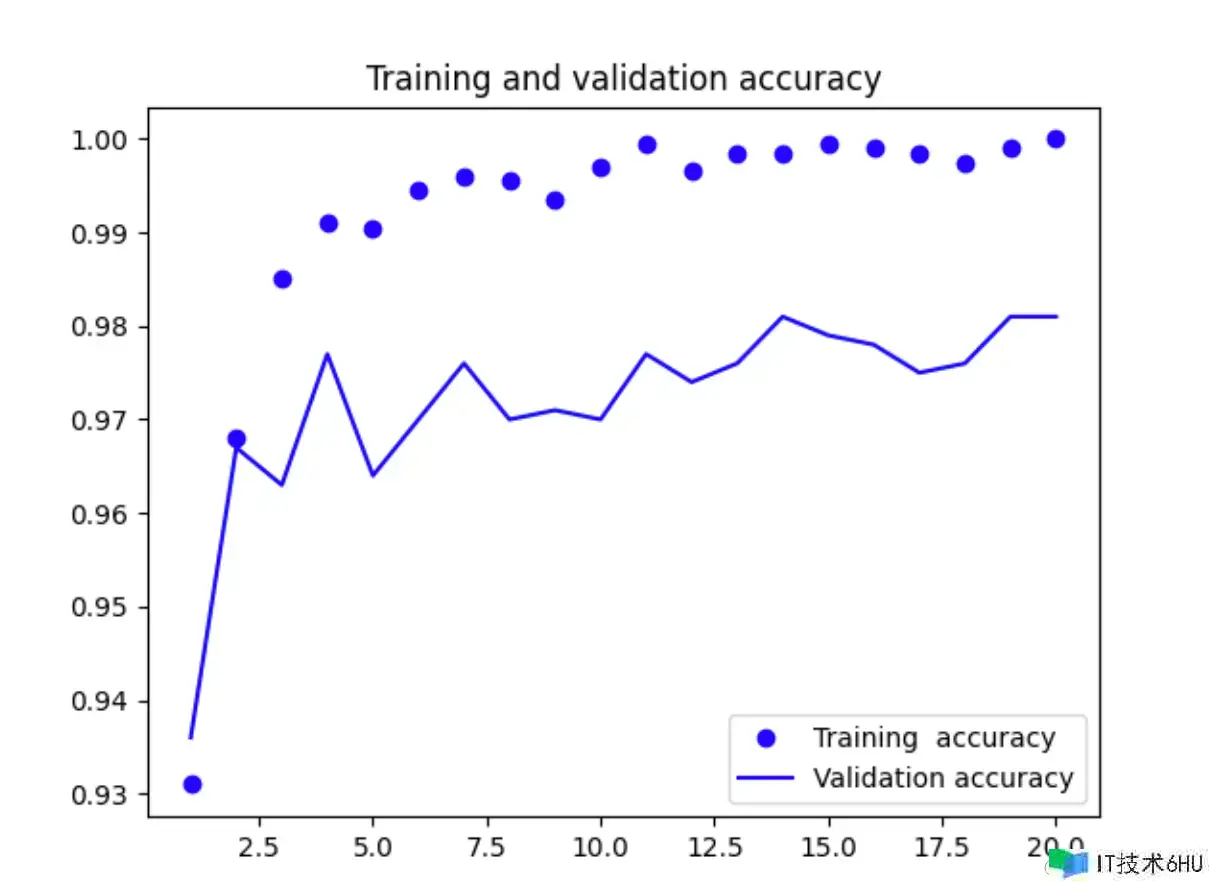

这儿能够看下验证数据集的精度:

在测试数据上的精度也有97%

当然这个精度这么高的原因是ImageNet自身有足够做的猫狗样本,也便是说 咱们的预练习模型已经具有完结当前使命所需要的知识, 假如你用其他事务的时分,往往或许不会有这么好的作用

微调预练习模型

回忆下咱们之前的conv模型,能够对他进行微调

conv_base.trainable = True

# 冻结除最终4层外的一切层

# 为什么不对更多的层进行微调? 原理上是能够的,可是这样做作用欠好

# 卷积层中较早增加的是更通用的可复用特征,较晚增加的则是针对性更强的特征,微调较早增加的层,收益会更小

for layer in conv_base.layers[:-4]:

layer.trainable = False

完整代码展示

import pathlib

import keras

import numpy as np

from matplotlib import pyplot as plt

from tensorflow.keras import layers

from tensorflow.keras.utils import image_dataset_from_directory

original_dir = pathlib.Path("PetImages")

new_base_dir = pathlib.Path("cats_vs_dogs_small")

# 将vgg16 卷积基 实例化, 而且

conv_base = keras.applications.vgg16.VGG16(

# weights 模型初始化的权重点

# 是否包含密布衔接分类器,由于咱们计划运用自己的猫狗分类,所以这儿显然用false

weights="imagenet", include_top=False, input_shape=(180, 180, 3)

)

def get_features_and_labels(dataset):

all_features = []

all_labels = []

for images, labels in dataset:

# preprocess_input 只接纳图画作为参数 所以要预处理一下

preprocessed_images = keras.applications.vgg16.preprocess_input(images)

features = conv_base.predict(preprocessed_images)

all_features.append(features)

all_labels.append(labels)

return np.concatenate(all_features), np.concatenate(all_labels)

if __name__ == '__main__':

conv_base.trainable = True

# 冻结除最终4层外的一切层

# 为什么不对更多的层进行微调? 原理上是能够的,可是这样做作用欠好

# 卷积层中较早增加的是更通用的可复用特征,较晚增加的则是针对性更强的特征,微调较早增加的层,收益会更小

for layer in conv_base.layers[:-4]:

layer.trainable = False

# image_dataset_from_directory 会帮咱们主动将图片打乱顺序,而且调理成一致大小,并打包成批量

train_dataset = image_dataset_from_directory(new_base_dir / "train", image_size=(180, 180), batch_size=32)

validation_dataset = image_dataset_from_directory(new_base_dir / "validation", image_size=(180, 180), batch_size=32)

test_dataset = image_dataset_from_directory(new_base_dir / "test", image_size=(180, 180), batch_size=32)

train_features, train_labels = get_features_and_labels(train_dataset)

validation_features, validation_labels = get_features_and_labels(validation_dataset)

test_features, test_labels = get_features_and_labels(test_dataset)

conv_base.summary()

print(f"Train features shape: {train_features.shape}")

# 这儿的参数是5,5,512 了 否则input对不上要犯错

inputs = keras.Input(shape=(5, 5, 512))

# 将特征传入dense层之前,必须先通过flattern层

x = layers.Flatten()(inputs)

x = layers.Dense(256)(x)

x = layers.Dropout(0.5)(x)

outputs = layers.Dense(1, activation="sigmoid")(x)

model = keras.Model(inputs=inputs, outputs=outputs)

model.compile(loss="binary_crossentropy", optimizer=keras.optimizers.RMSprop(learning_rate=1e-5), metrics=["accuracy"])

model.summary()

callbacks = [

keras.callbacks.ModelCheckpoint(filepath="fine_tuning.keras", save_best_only=True,

monitor="val_loss", )

]

# 这儿练习的参数 要改一下

history = model.fit(train_features, train_labels, epochs=30, validation_data=(validation_features, validation_labels),

callbacks=callbacks)

accuracy = history.history["accuracy"]

val_accuracy = history.history["val_accuracy"]

loss = history.history["loss"]

val_loss = history.history["val_loss"]

epochs = range(1, len(accuracy) + 1)

plt.plot(epochs, accuracy, "bo", label="Training accuracy")

plt.plot(epochs, val_accuracy, "b", label="Validation accuracy")

plt.title("Training and validation accuracy")

plt.legend()

plt.figure()

plt.plot(epochs, loss, "bo", label="Training loss")

plt.plot(epochs, val_loss, "bo", label="Validation loss")

plt.title("Training and validation loss")

plt.legend()

plt.show()

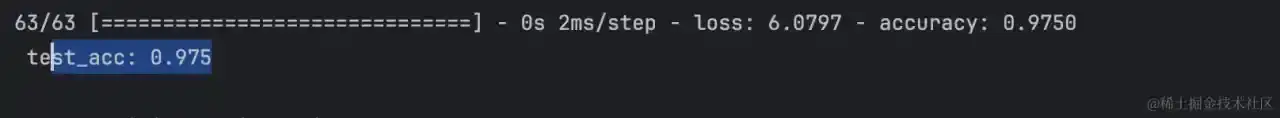

test_model = keras.models.load_model("fine_tuning.keras")

test_loss, test_acc = test_model.evaluate(test_features, test_labels)

print(f" test_acc: {test_acc:.3f}")