预测温度

wget https://s3.amazonaws.com/keras-datasets/jena_climate_2009_2016.csv.zip

unzip jena_climate_2009_2016.csv.zip

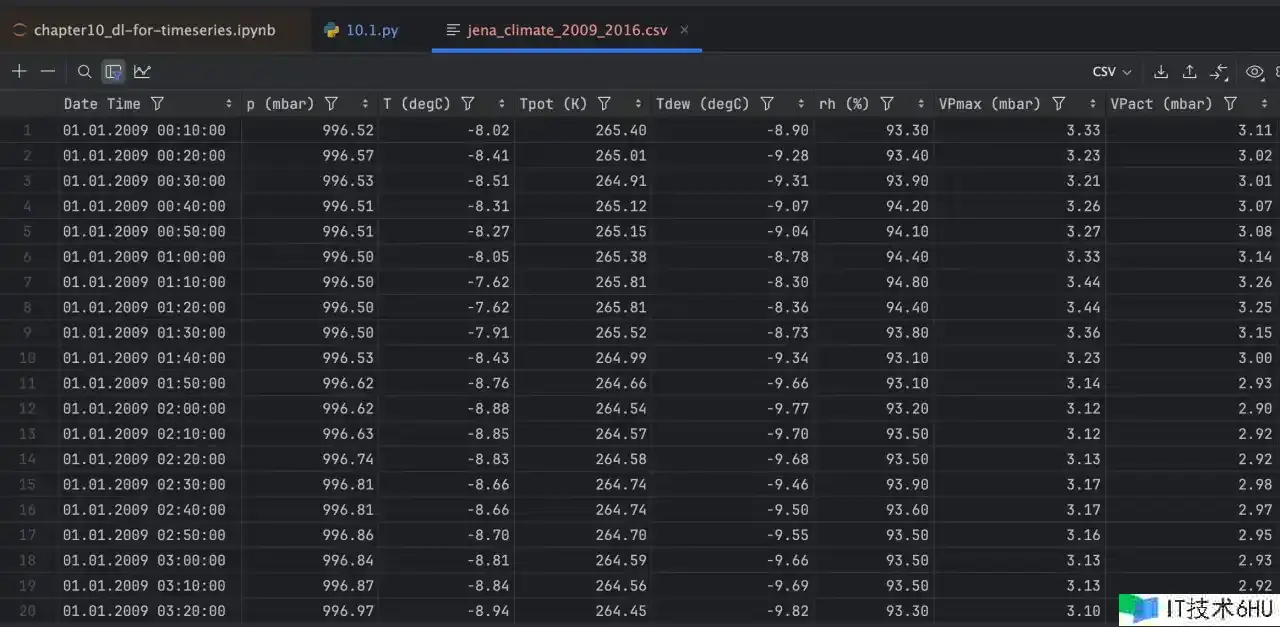

这份数据大概就这样:

预备数据

import os

import matplotlib.pyplot as plt

import numpy as np

# 这份数据是 每10分钟记载一次 一共是14个物理量

fname = os.path.join("jena_climate_2009_2016.csv")

if __name__ == '__main__':

with open(fname) as f:

data = f.read()

lines = data.split("n")

header = lines[0].split(",")

lines = lines[1:]

print(header)

print(len(lines))

# 放弃了 date time 这一项

temperature = np.zeros((len(lines),))

raw_data = np.zeros((len(lines), len(header) - 1))

for i, line in enumerate(lines):

values = [float(x) for x in line.split(",")[1:]]

temperature[i] = values[1]

raw_data[i, :] = values[:]

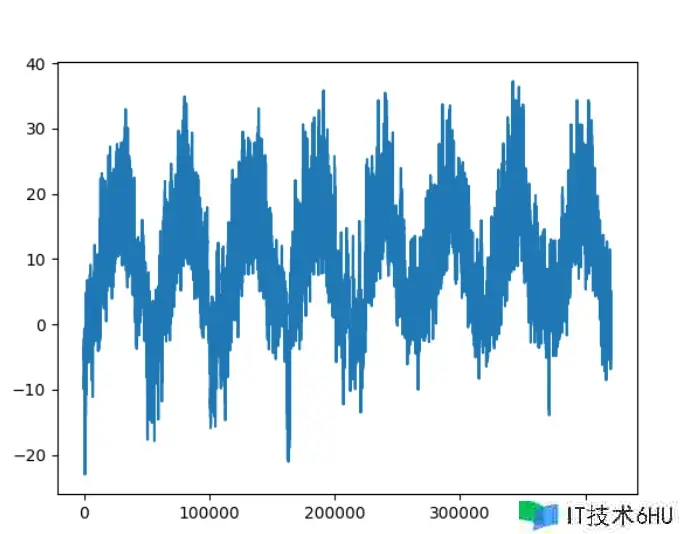

plt.plot(range(len(temperature)), temperature)

# 时刻跨度为8年的温度曲线改变

plt.show()

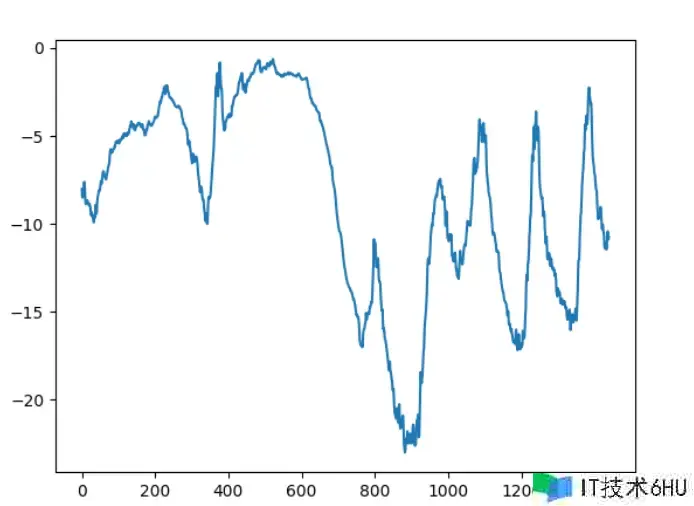

# 看下前10天的数据

plt.plot(range(1440), temperature[:1440])

plt.show()

能够看出来这8年的温度改变曲线是差不多的

再看下头几天的温度曲线

预备练习集

# 创建3个数据集

# 每6个数据点保存一个,这个很好理解,因为数据是10分钟收集一次 2个10分钟之间 温度的改变其实很小 因此没必要保留这么多数据

sampling_rate = 6

# 给定曩昔5天的数据

sequence_length = 120

delay = sampling_rate * (sequence_length + 24 - 1)

batch_size = 256

train_dataset = keras.utils.timeseries_dataset_from_array(

raw_data[:-delay],

targets=temperature[delay:],

sampling_rate=sampling_rate,

sequence_length=sequence_length,

shuffle=True,

batch_size=batch_size,

start_index=0,

end_index=num_train_samples)

val_dataset = keras.utils.timeseries_dataset_from_array(

raw_data[:-delay],

targets=temperature[delay:],

sampling_rate=sampling_rate,

sequence_length=sequence_length,

shuffle=True,

batch_size=batch_size,

start_index=num_train_samples,

end_index=num_train_samples + num_val_samples)

test_dataset = keras.utils.timeseries_dataset_from_array(

raw_data[:-delay],

targets=temperature[delay:],

sampling_rate=sampling_rate,

sequence_length=sequence_length,

shuffle=True,

batch_size=batch_size,

start_index=num_train_samples + num_val_samples)

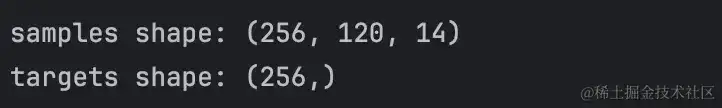

能够看下这个输出

for samples, targets in train_dataset:

print("samples shape:", samples.shape)

print("targets shape:", targets.shape)

break

sample便是包含256个样本的批量,每个样本是接连120小时的数据, targets 是对应的256个方针温度的数组

留意这儿因为shuffle是true。所以sample[0] 和 sample[1] 不一定在时刻上是接连挨近的

根据LSTM的简单模型

有一种专门处理 因果联系和顺序联系都很重要的序列 的神经网络架构 RNN

其间LSTM 是应用规模最广的

这儿用LSTM 来处理上述的使命

inputs = keras.Input(shape=(sequence_length, raw_data.shape[-1]))

x = layers.LSTM(16)(inputs)

outputs = layers.Dense(1)(x)

model = keras.Model(inputs, outputs)

callbacks = [

keras.callbacks.ModelCheckpoint("jena_lstm.keras", save_best_only=True)

]

model.compile(optimizer="rmsprop", loss="mse", metrics=["mae"])

history = model.fit(train_dataset, epochs=10, validation_data=val_dataset, callbacks=callbacks)

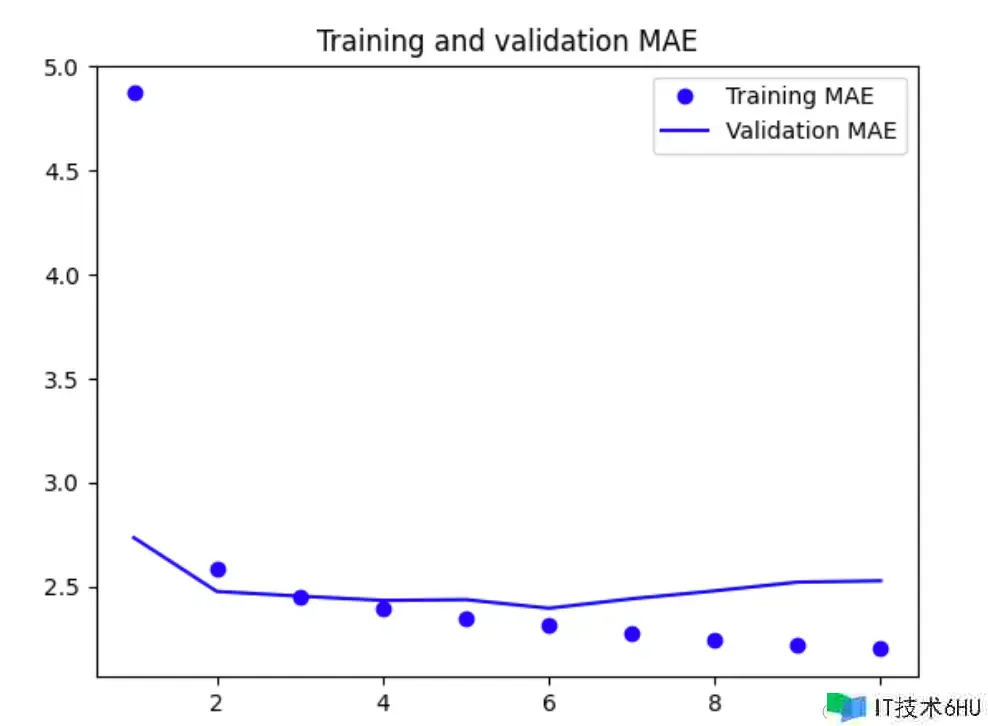

loss = history.history["mae"]

val_loss = history.history["val_mae"]

epochs = range(1, len(loss) + 1)

plt.figure()

plt.plot(epochs, loss, "bo", label="Training MAE")

plt.plot(epochs, val_loss, "b", label="Validation MAE")

plt.title("Training and validation MAE")

plt.legend()

plt.show()

model = keras.models.load_model("jena_lstm.keras")

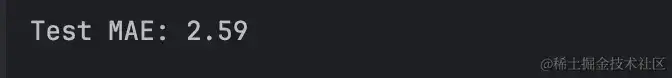

print(f"Test MAE: {model.evaluate(test_dataset)[1]:.2f}")

测试的mae为

换一种写法

使用dropout正则化 总是需要更长时刻才能完全收敛,所以这儿 模型的练习书调整为本来的5倍

inputs = keras.Input(shape=(sequence_length, raw_data.shape[-1]))

# 正则化

x = layers.LSTM(32, recurrent_dropout=0.25)(inputs)

x = layers.Dropout(0.5)(x)

outputs = layers.Dense(1)(x)

model = keras.Model(inputs, outputs)

callbacks = [

keras.callbacks.ModelCheckpoint("jena_lstm_dropout.keras", save_best_only=True)

]

model.compile(optimizer="rmsprop", loss="mse", metrics=["mae"])

history = model.fit(train_dataset, epochs=50, validation_data=val_dataset, callbacks=callbacks)

总结

如果顺序对数据很重要,特别是关于时刻序列数据,那么循环神经网络RNN是一种很合适的办法