背景

学习总结一下 LeakCanary 相关的常识。

接入办法

比较简略,只需求引入下面的依靠就能够了。无需增加其他代码。

内部会经过 MainProcessAppWatcherInstaller 完结主动初始化 LeakCanary 的逻辑。

dependencies {

// debugImplementation because LeakCanary should only run in debug builds.

debugImplementation 'com.squareup.leakcanary:leakcanary-android:3.0-alpha-1'

}

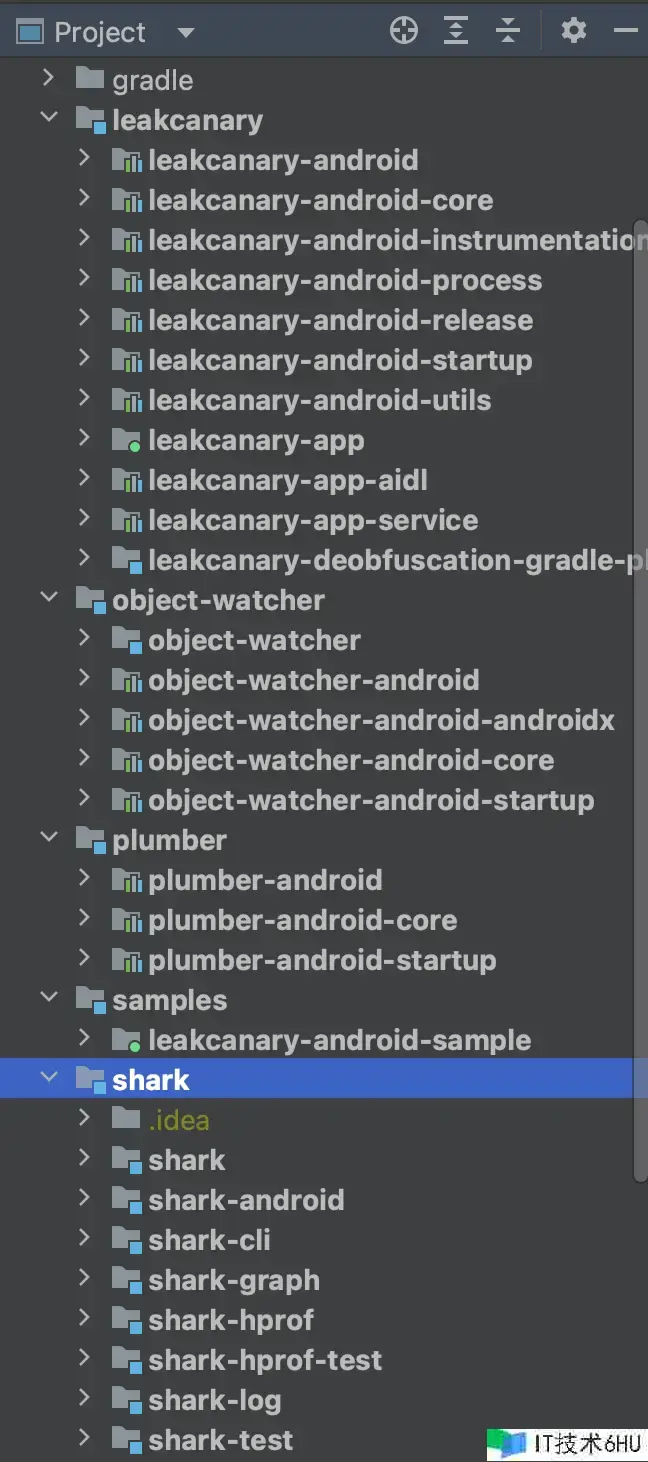

代码结构

版别:3.0-SNAPSHOT

各模块功用

- leakcanary

- leakcanary-android:集成进口模块,提供 LeakCanary 安装,揭露 API 等能力。

- leakcanary-android-core:中心代码,包含显现内存走漏信息的页面,告诉等代码。

- leakcanary-android-release:专门给 release 的版别用的。

- object-watcher

- object-watcher:调查者相关代码,中心类为 ObjectWatcher 和 KeyedWeakReference。

- object-watcher-android、object-watcher-androidx、object-watcher-android-core:各种支撑主动分析内存走漏的目标的 watcher 目标。例如 ActivityWatcher 和 ServiceWatcher。

- object-watcher-android-startup:发动主动初始化逻辑,AppWatcherStartupInitializer。

- shark:各种分析模块的父 Module

- shark-android:分析安卓设备,包含型号安卓版别等信息

- shark-cli:指令行工具,运用指令行调用 shark 的相关功用进行内存分析。

- shark-graph:分析堆中目标的联系图。

- shark-hprof:解析 hprof 文件。

- shark-log:日志模块。

- plumber

- plumber-android:主动修正工具,关于已知的内存走漏问题,尝试在进行时进行主动修正(例如经过反射直接将其置空)。

整体流程

- 主要了解一下初始化的逻辑,MainProcessAppWatcherInstaller。

- 检测前:在什么时分开端检测。InstallableWatcher 完结类,例如 ActivityWatcher。

- 检测中:检测的依据是什么,ObjectWatcher。

- 检测后:主要是 dump 出内存快照文件并进行分析。

初始化

默许状况下,导入 leakCanary 库就行了,不需求开发者进行手动初始化。

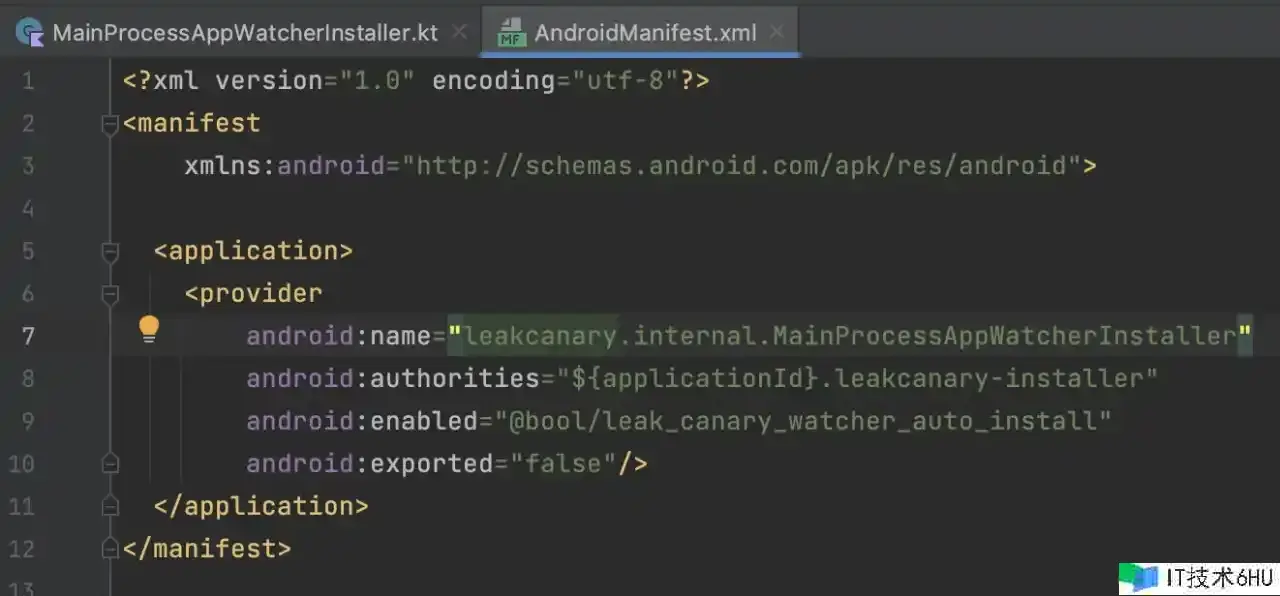

MainProcessAppWatcherInstaller

经过 ContentProvider,完结主动初始化。

- 默许状况下,AndroidManifest 中注册的 ContentProvider 创立和初始化逻辑,会在 application 发动的时分履行

- AppWatcherInstaller 承继 ContentProvider 并完结 onCreate 办法,在 AndroidManifest 文件中注册

- 在 onCreate 中调用 AppWatcher.manualInstall() 履行 LeakCanary 的初始化逻辑。

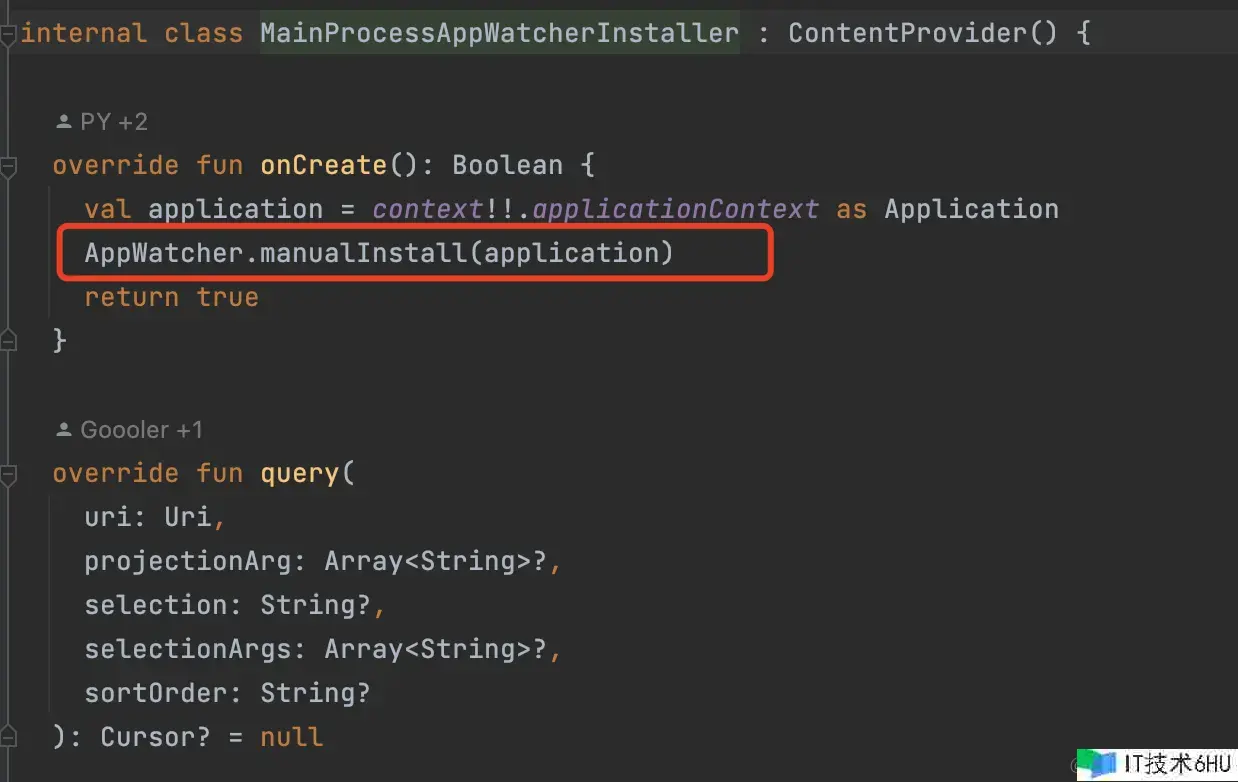

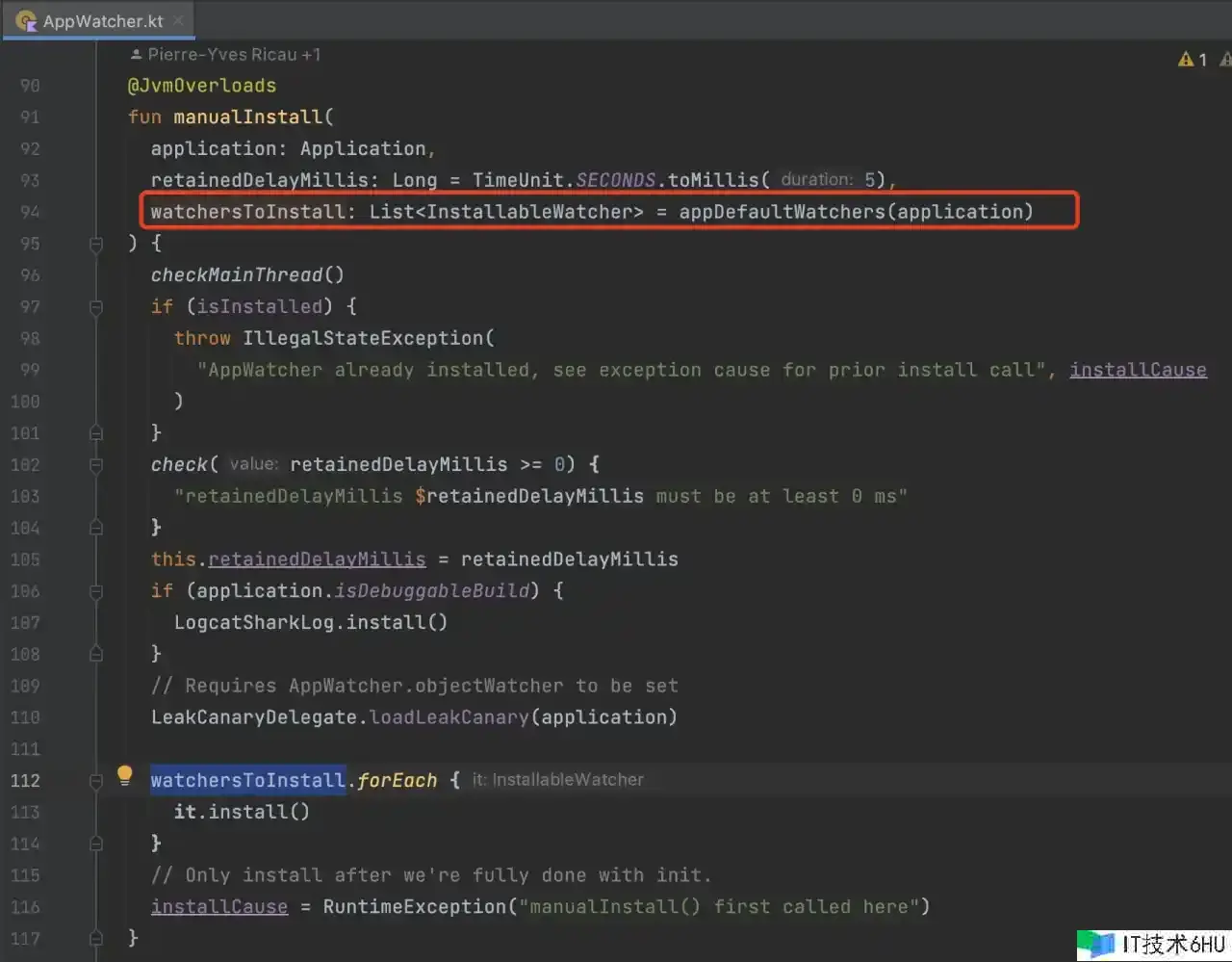

AppWatcher.manualInstall

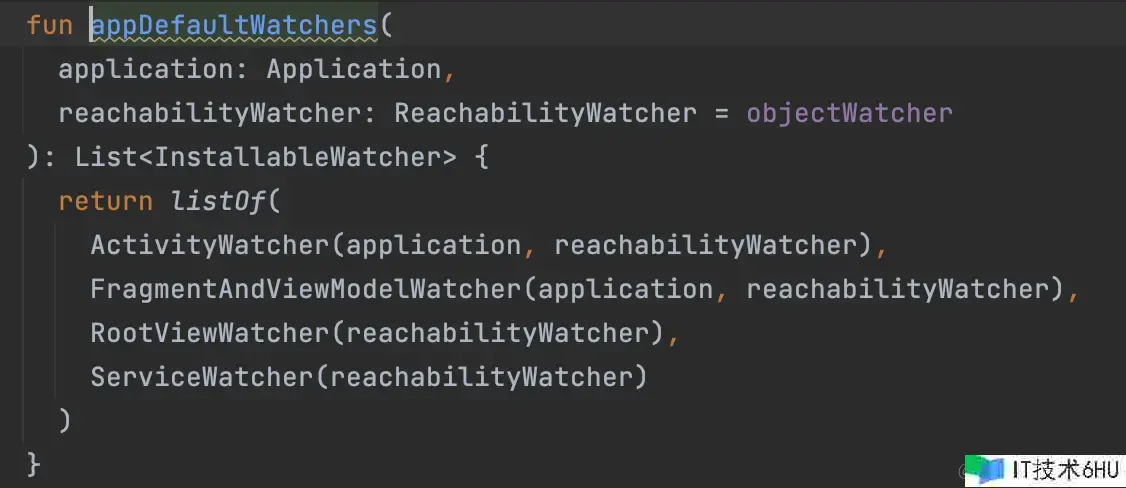

- 办法中的 watchersToInstall 参数,默许值是 appDefaultWatchers,回来了四个默许的 Watcher,别离负责监控对应类的实例。

- 遍历调用四个 watcher 的 install 办法,履行相应的初始化逻辑。

- ActivityWatcher、FragmentAndViewModelWatcher、RootViewWatcher、ServiceWatcher。

InternalLeakCanary

LeakCanary 发动初始化后的中心逻辑。

override fun invoke(application: Application) {

_application = application

// 查看是否运行在Debuggable Build中,用于开发时检测内存走漏

checkRunningInDebuggableBuild()

// 向AppWatcher的目标监视器增加一个监听器,用于监测目标的保存状况

AppWatcher.objectWatcher.addOnObjectRetainedListener(this)

// 创立废物收回触发器

val gcTrigger = GcTrigger.Default

// 获取装备提供者

val configProvider = { LeakCanary.config }

// 创立处理线程

val handlerThread = HandlerThread(LEAK_CANARY_THREAD_NAME)

handlerThread.start()

val backgroundHandler = Handler(handlerThread.looper)

// 创立HeapDumpTrigger实例,用于触发堆转储操作

heapDumpTrigger = HeapDumpTrigger(

application, backgroundHandler, AppWatcher.objectWatcher, gcTrigger, configProvider

)

// 注册运用程序可见性监听器,用于在运用程序可见性更改时触发堆转储

application.registerVisibilityListener { applicationVisible ->

this.applicationVisible = applicationVisible

heapDumpTrigger.onApplicationVisibilityChanged(applicationVisible)

}

// 注册运用程序已康复的活动监听器

registerResumedActivityListener(application)

// 增加动态快捷办法到运用程序(金丝雀)

addDynamicShortcut(application)

// 在主线程上发布一个使命,使得日志在Application.onCreate()之后输出。

// We post so that the log happens after Application.onCreate()

mainHandler.post {

// 在后台线程上发布一个使命,由于HeapDumpControl.iCanHasHeap()查看一个同享的偏好设置,

// 这会堵塞直到加载完结,然后导致StrictMode违规。

// https://github.com/square/leakcanary/issues/1981

// We post to a background handler because HeapDumpControl.iCanHasHeap() checks a shared pref

// which blocks until loaded and that creates a StrictMode violation.

SharkLog.d {

when (val iCanHasHeap = HeapDumpControl.iCanHasHeap()) {

is Yup -> application.getString(R.string.leak_canary_heap_dump_enabled_text)

is Nope -> application.getString(

R.string.leak_canary_heap_dump_disabled_text, iCanHasHeap.reason()

)

}

}

}

}

VisibilityTracker

LeakCanary 如何感知运用是否处于前台。

经过 ActivityLifecycleCallbacks 计算 start-stop 的 Activity 个数来完结来判别运用是否在前台。

internal class VisibilityTracker(

private val listener: (Boolean) -> Unit

) : Application.ActivityLifecycleCallbacks by noOpDelegate(), BroadcastReceiver() {

private var startedActivityCount = 0

/**

* Visible activities are any activity started but not stopped yet. An activity can be paused

* yet visible: this will happen when another activity shows on top with a transparent background

* and the activity behind won't get touch inputs but still need to render / animate.

*/

private var hasVisibleActivities: Boolean = false

/**

* Assuming screen on by default.

*/

private var screenOn: Boolean = true

private var lastUpdate: Boolean = false

override fun onActivityStarted(activity: Activity) {

startedActivityCount++

if (!hasVisibleActivities && startedActivityCount == 1) {

hasVisibleActivities = true

updateVisible()

}

}

override fun onActivityStopped(activity: Activity) {

// This could happen if the callbacks were registered after some activities were already

// started. In that case we effectively considers those past activities as not visible.

if (startedActivityCount > 0) {

startedActivityCount--

}

if (hasVisibleActivities && startedActivityCount == 0 && !activity.isChangingConfigurations) {

hasVisibleActivities = false

updateVisible()

}

}

override fun onReceive(

context: Context,

intent: Intent

) {

screenOn = intent.action != ACTION_SCREEN_OFF

updateVisible()

}

private fun updateVisible() {

val visible = screenOn && hasVisibleActivities

if (visible != lastUpdate) {

lastUpdate = visible

listener.invoke(visible)

}

}

}

internal fun Application.registerVisibilityListener(listener: (Boolean) -> Unit) {

val visibilityTracker = VisibilityTracker(listener)

registerActivityLifecycleCallbacks(visibilityTracker)

registerReceiver(visibilityTracker, IntentFilter().apply {

addAction(ACTION_SCREEN_ON)

addAction(ACTION_SCREEN_OFF)

})

}

GcTrigger

fun interface GcTrigger {

object Default : GcTrigger {

override fun runGc() {

Runtime.getRuntime()

.gc()

enqueueReferences()

System.runFinalization()

}

private fun enqueueReferences() {

Thread.sleep(100)

} catch (e: InterruptedException) {

throw AssertionError()

}

}

}

}

经过调用 Runtime.getRuntime().gc() 来触发废物收回,然后调用 enqueueReferences() 办法将引证参加行列,最终调用 System.runFinalization() 来运行终结器。在 enqueueReferences() 办法中,运用 Thread.sleep(100) 来模仿延迟,以确保引证行列守护进程有足够的时刻将引证移动到恰当的行列中。

触发检测的机遇

个人理解,四个 watcher 的主要工作,便是去寻觅一个适宜的机遇,预备履行内存走漏的检测 。详细的内存走漏检测工作是交给 ObjectWatcher 来。

ActivityWatcher

ActivityWatcher 经过 application.registerActivityLifecycleCallbacks 注册回调,监听一切Activity的生命周期,在每个Activity onDestory 的时分,调用 ObjectWatcher 的 expectWeaklyReachable 判别是否出现内存走漏。

class ActivityWatcher(

private val application: Application,

private val reachabilityWatcher: ReachabilityWatcher

) : InstallableWatcher {

private val lifecycleCallbacks =

object : Application.ActivityLifecycleCallbacks by noOpDelegate() {

override fun onActivityDestroyed(activity: Activity) {

reachabilityWatcher.expectWeaklyReachable(

activity, "${activity::class.java.name} received Activity#onDestroy() callback"

)

}

}

override fun install() {

application.registerActivityLifecycleCallbacks(lifecycleCallbacks)

}

override fun uninstall() {

application.unregisterActivityLifecycleCallbacks(lifecycleCallbacks)

}

}

FragmentAndViewModelWatcher

顾名思义,便是监控了 Fragment 和 ViewModel,在某一个机遇履行走漏检测。

- fragmentDestroyWatchers是一个watcher数组,为了兼容androidX和support以及不同版别的Fragment。

AndroidOFragmentDestroyWatcher

AndroidXFragmentDestroyWatcher

AndroidSupportFragmentDestroyWatcher - 每个Fragment Watcher的完结都是差不多的,经过fragmentManager#registerFragmentLifecycleCallbacks注册回调,监听 Fragment 的生命周期,在onFragmentDestroyed 的时分,触发内测走漏的检测逻辑。

- AndroidXFragmentDestroyWatcher

现在比较多用的是AndroidX了,所以咱们简略看下详细的完结。

internal class AndroidXFragmentDestroyWatcher(

private val reachabilityWatcher: ReachabilityWatcher

) : (Activity) -> Unit {

private val fragmentLifecycleCallbacks = object : FragmentManager.FragmentLifecycleCallbacks() {

override fun onFragmentCreated(

fm: FragmentManager,

fragment: Fragment,

savedInstanceState: Bundle?

) {

ViewModelClearedWatcher.install(fragment, reachabilityWatcher)

}

override fun onFragmentViewDestroyed(

fm: FragmentManager,

fragment: Fragment

) {

val view = fragment.view

if (view != null) {

reachabilityWatcher.expectWeaklyReachable(

view, "${fragment::class.java.name} received Fragment#onDestroyView() callback " +

"(references to its views should be cleared to prevent leaks)"

)

}

}

override fun onFragmentDestroyed(

fm: FragmentManager,

fragment: Fragment

) {

reachabilityWatcher.expectWeaklyReachable(

fragment, "${fragment::class.java.name} received Fragment#onDestroy() callback"

)

}

}

override fun invoke(activity: Activity) {

if (activity is FragmentActivity) {

val supportFragmentManager = activity.supportFragmentManager

supportFragmentManager.registerFragmentLifecycleCallbacks(fragmentLifecycleCallbacks, true)

ViewModelClearedWatcher.install(activity, reachabilityWatcher)

}

}

}

- 在 onFragmentViewDestroyed 的时分调查 view, 在 onFragmentDestroyed 的时分调查 fragment。由于在 fragment 中,view 和 fragment 的生命周期是不一定是同步的。

- 在 onFragmentCreated 的时分,创立一个自界说的 ViewModel:ViewModelClearedWatcher。

- 当 fragment destroy的时分,就会履行到 ViewModelClearedWatcher 的 onCleared 办法,在这个时分检测其他 viewModel 是否发生走漏。

ViewModelClearedWatcher

先了解一下ViewModel。ViewModelStoreOwner(一般是activity或者fragment)内部会持有一个ViewModelStore,运用一个 mMap 寄存创立的 ViewModel。当 activity onDestroy 的时分,就会遍历履行viewModel 的 onClear 办法。

完结思路:

- ViewModelStore 中 mMap 是私有的,需求经过反射获取到,这就能够拿到当时 ViewModelStore 内的一切的 ViewModel。

- 往监测的 activity 或 fragment,创立一个自界说的 ViewModel。那么当这个 ViewModel 被毁掉履行onCleared(也便是页面被毁掉)的时分,去遍历 mMap 中的其他 ViewModel,检测每个 ViewModel 的内存走漏状况。

//该watcher 承继了ViewModel,生命周期被 ViewModelStoreOwner 办理。

internal class ViewModelClearedWatcher(

storeOwner: ViewModelStoreOwner,

private val reachabilityWatcher: ReachabilityWatcher

) : ViewModel() {

private val viewModelMap: Map<String, ViewModel>?

init {

//(1.3.2.3)经过反射获取一切的 store 存储的一切viewModelMap

viewModelMap = try {

val mMapField = ViewModelStore::class.java.getDeclaredField("mMap")

mMapField.isAccessible = true

@Suppress("UNCHECKED_CAST")

mMapField[storeOwner.viewModelStore] as Map<String, ViewModel>

} catch (ignored: Exception) {

null

}

}

override fun onCleared() {

///(1.3.2.4) viewmodle 被清理释放的时分回调,查看一切viewmodle 是否会有走漏

viewModelMap?.values?.forEach { viewModel ->

reachabilityWatcher.expectWeaklyReachable(

viewModel, "${viewModel::class.java.name} received ViewModel#onCleared() callback"

)

}

}

companion object {

fun install(

storeOwner: ViewModelStoreOwner,

reachabilityWatcher: ReachabilityWatcher

) {

val provider = ViewModelProvider(storeOwner, object : Factory {

@Suppress("UNCHECKED_CAST")

override fun <T : ViewModel?> create(modelClass: Class<T>): T =

ViewModelClearedWatcher(storeOwner, reachabilityWatcher) as T

})

///(1.3.2.2) 获取ViewModelClearedWatcher实例

provider.get(ViewModelClearedWatcher::class.java)

}

}

}

判别是否出现内存走漏

class ObjectWatcher constructor(

private val clock: Clock,

private val checkRetainedExecutor: Executor,

/**

* Calls to [watch] will be ignored when [isEnabled] returns false

*/

private val isEnabled: () -> Boolean = { true }

) : ReachabilityWatcher {

private val onObjectRetainedListeners = mutableSetOf<OnObjectRetainedListener>()

/**

* References passed to [watch].

*/

private val watchedObjects = mutableMapOf<String, KeyedWeakReference>()

private val queue = ReferenceQueue<Any>()

/**

* Returns true if there are watched objects that aren't weakly reachable, and

* have been watched for long enough to be considered retained.

*/

val hasRetainedObjects: Boolean

@Synchronized get() {

removeWeaklyReachableObjects()

return watchedObjects.any { it.value.retainedUptimeMillis != -1L }

}

/**

* Returns the number of retained objects, ie the number of watched objects that aren't weakly

* reachable, and have been watched for long enough to be considered retained.

*/

val retainedObjectCount: Int

@Synchronized get() {

removeWeaklyReachableObjects()

return watchedObjects.count { it.value.retainedUptimeMillis != -1L }

}

/**

* Returns true if there are watched objects that aren't weakly reachable, even

* if they haven't been watched for long enough to be considered retained.

*/

val hasWatchedObjects: Boolean

@Synchronized get() {

removeWeaklyReachableObjects()

return watchedObjects.isNotEmpty()

}

/**

* Returns the objects that are currently considered retained. Useful for logging purposes.

* Be careful with those objects and release them ASAP as you may creating longer lived leaks

* then the one that are already there.

*/

val retainedObjects: List<Any>

@Synchronized get() {

removeWeaklyReachableObjects()

val instances = mutableListOf<Any>()

for (weakReference in watchedObjects.values) {

if (weakReference.retainedUptimeMillis != -1L) {

val instance = weakReference.get()

if (instance != null) {

instances.add(instance)

}

}

}

return instances

}

@Synchronized fun addOnObjectRetainedListener(listener: OnObjectRetainedListener) {

onObjectRetainedListeners.add(listener)

}

@Synchronized fun removeOnObjectRetainedListener(listener: OnObjectRetainedListener) {

onObjectRetainedListeners.remove(listener)

}

@Synchronized override fun expectWeaklyReachable(

watchedObject: Any,

description: String

) {

if (!isEnabled()) {

return

}

removeWeaklyReachableObjects()

val key = UUID.randomUUID()

.toString()

val watchUptimeMillis = clock.uptimeMillis()

val reference =

KeyedWeakReference(watchedObject, key, description, watchUptimeMillis, queue)

SharkLog.d {

"Watching " +

(if (watchedObject is Class<*>) watchedObject.toString() else "instance of ${watchedObject.javaClass.name}") +

(if (description.isNotEmpty()) " ($description)" else "") +

" with key $key"

}

watchedObjects[key] = reference

checkRetainedExecutor.execute {

moveToRetained(key)

}

}

/**

* Clears all [KeyedWeakReference] that were created before [heapDumpUptimeMillis] (based on

* [clock] [Clock.uptimeMillis])

*/

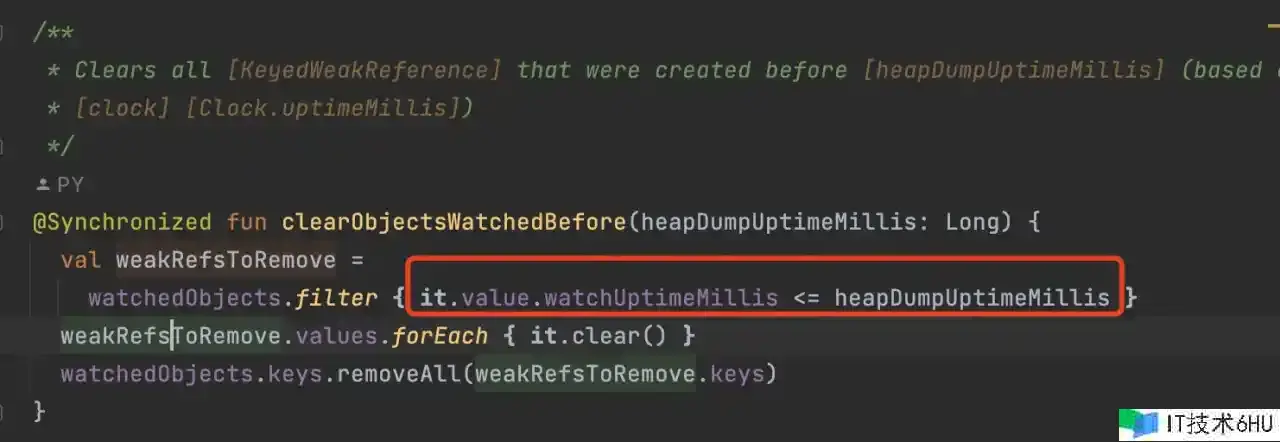

@Synchronized fun clearObjectsWatchedBefore(heapDumpUptimeMillis: Long) {

val weakRefsToRemove =

watchedObjects.filter { it.value.watchUptimeMillis <= heapDumpUptimeMillis }

weakRefsToRemove.values.forEach { it.clear() }

watchedObjects.keys.removeAll(weakRefsToRemove.keys)

}

/**

* Clears all [KeyedWeakReference]

*/

@Synchronized fun clearWatchedObjects() {

watchedObjects.values.forEach { it.clear() }

watchedObjects.clear()

}

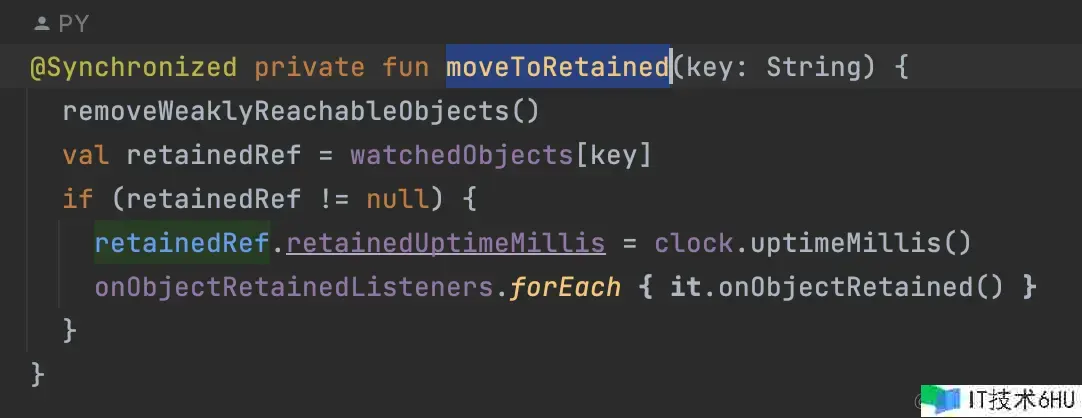

@Synchronized private fun moveToRetained(key: String) {

removeWeaklyReachableObjects()

val retainedRef = watchedObjects[key]

if (retainedRef != null) {

retainedRef.retainedUptimeMillis = clock.uptimeMillis()

onObjectRetainedListeners.forEach { it.onObjectRetained() }

}

}

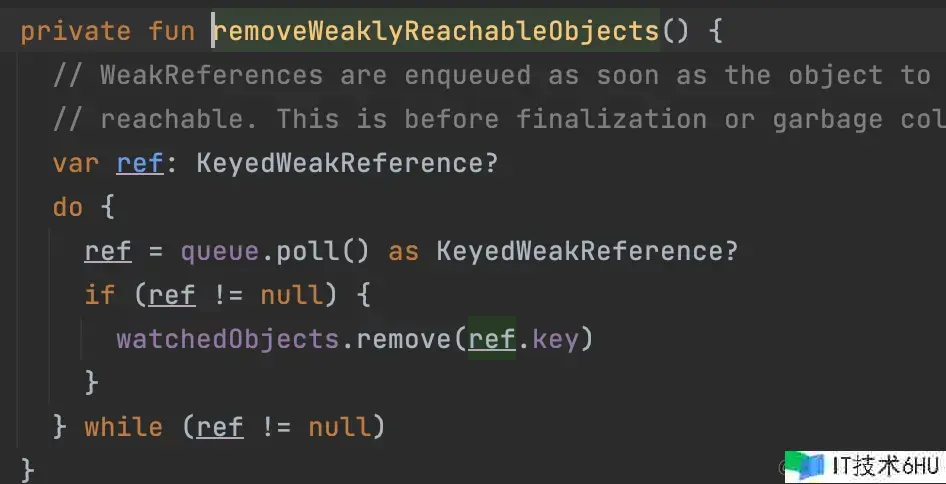

private fun removeWeaklyReachableObjects() {

// WeakReferences are enqueued as soon as the object to which they point to becomes weakly

// reachable. This is before finalization or garbage collection has actually happened.

var ref: KeyedWeakReference?

do {

ref = queue.poll() as KeyedWeakReference?

if (ref != null) {

watchedObjects.remove(ref.key)

}

} while (ref != null)

}

}

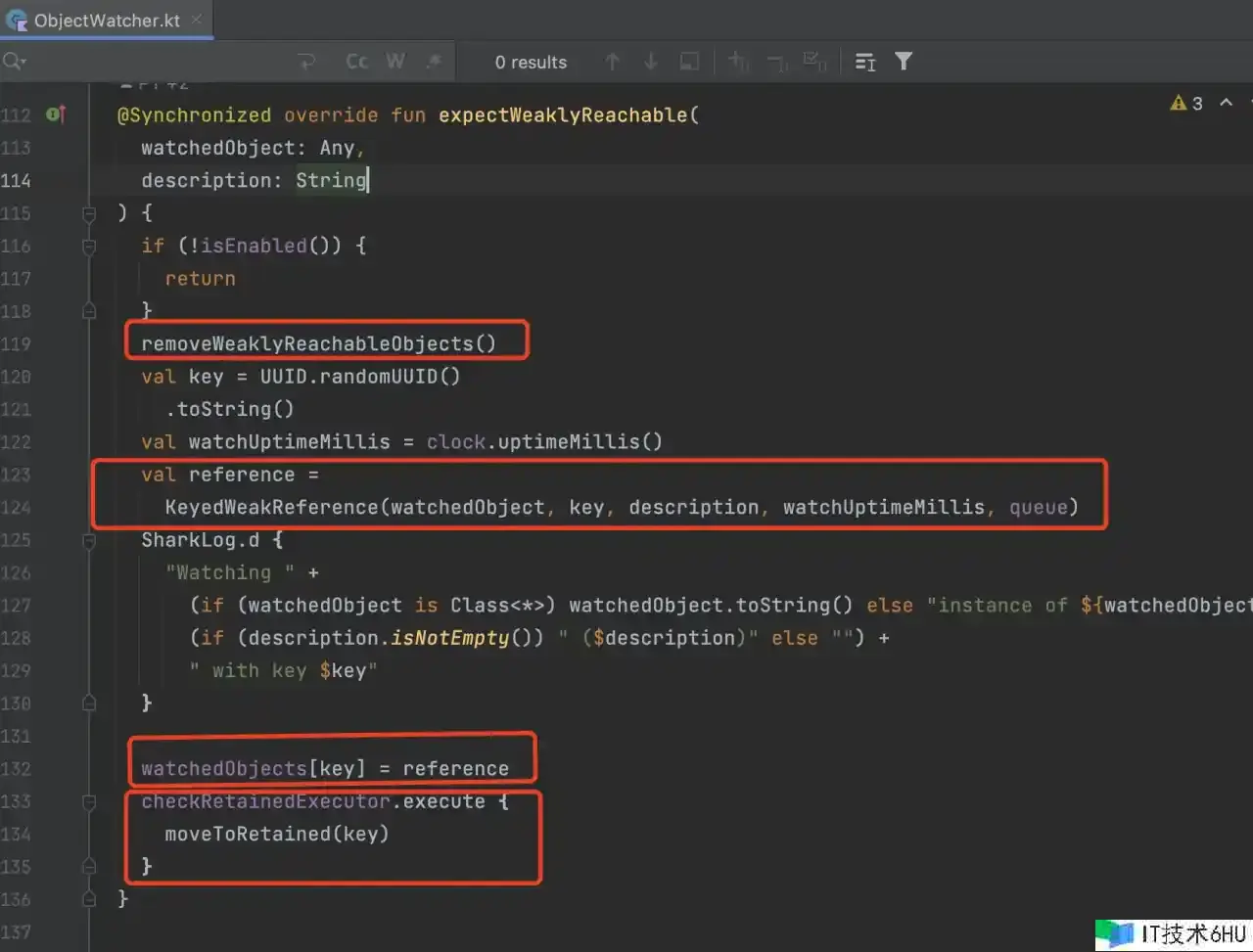

ObjectWatcher#expectWeaklyReachable

-

queue:引证行列,用于寄存被 GC 后的目标。

-

watchedObjects 调集:mutableMapOf<String, KeyedWeakReference>(),寄存正在调查的一切引证目标。

-

假如 KeyedWeakReference#retainedUptimeMillis 的时刻戳不为 -1的话,阐明目标仍存活着。

-

KeyedWeakReference

- KeyedWeakReference 承继于 WeakReference,弱引证是不会阻止 GC 收回目标的,一起咱们能够在结构函数中传递一个 ReferenceQueue,用于目标被 GC 后寄存的行列。

- 创立要调查目标的 KeyedWeakReference,传入引证行列 queue 。当 WeakReference 中的目标被 gc 收回的时分,会将弱引证包装的目标参加到引证行列中。

- 能够经过判别引证行列中,是否有指定的实例,来判别实例是否被收回。假如目标被收回了,删去在 watchedObjects 调集 中的 key value 数据。

-

checkRetainedExecutor:延迟 5 秒后,履行 moveToRetained 办法。

- removeWeaklyReachableObjects:将现已被 GC 的目标从 watchedObjects 调集中删去。

- 先调用一次 removeWeaklyReachableObjects() 删去现已 GC 的目标,那么剩下的目标就能够认为是被保存(没办法 GC)的目标。

- 遍历 queue 引证行列,假如目标现已被收回,删去 watchedObjects 调集里边对应的元素。

- 判别 watchedObjects 是否存在对应的引证。假如没有,阐明目标现已被收回。假如有的话,阐明可能发生了内存走漏。(注意这里的差异,最终是经过 watchedObjects 进行判别的)

- 调用 onObjectRetained 办法,告诉外部的 listener,处理后续逻辑。比方调用Debug.dumpHprofData() 办法从虚拟机中 dump hprof 文件。

- 假如 dump了内存快照文件之后,就需求履行 clearObjectsWatchedBefore,依据当时时刻戳,清空掉 watchedObjects 调集里边的调查目标了,能够避免再次重复判别。

dump出内存快照

HeapDumpTrigger#dumpHeap

private fun dumpHeap(

retainedReferenceCount: Int,

retry: Boolean,

reason: String

) {

val directoryProvider =

InternalLeakCanary.createLeakDirectoryProvider(InternalLeakCanary.application)

val heapDumpFile = directoryProvider.newHeapDumpFile()

val durationMillis: Long

if (currentEventUniqueId == null) {

currentEventUniqueId = UUID.randomUUID().toString()

}

try {

InternalLeakCanary.sendEvent(DumpingHeap(currentEventUniqueId!!))

if (heapDumpFile == null) {

throw RuntimeException("Could not create heap dump file")

}

saveResourceIdNamesToMemory()

val heapDumpUptimeMillis = SystemClock.uptimeMillis()

KeyedWeakReference.heapDumpUptimeMillis = heapDumpUptimeMillis

durationMillis = measureDurationMillis {

configProvider().heapDumper.dumpHeap(heapDumpFile)

}

if (heapDumpFile.length() == 0L) {

throw RuntimeException("Dumped heap file is 0 byte length")

}

lastDisplayedRetainedObjectCount = 0

lastHeapDumpUptimeMillis = SystemClock.uptimeMillis()

objectWatcher.clearObjectsWatchedBefore(heapDumpUptimeMillis)

currentEventUniqueId = UUID.randomUUID().toString()

InternalLeakCanary.sendEvent(HeapDump(currentEventUniqueId!!, heapDumpFile, durationMillis, reason))

} catch (throwable: Throwable) {

InternalLeakCanary.sendEvent(HeapDumpFailed(currentEventUniqueId!!, throwable, retry))

if (retry) {

scheduleRetainedObjectCheck(

delayMillis = WAIT_AFTER_DUMP_FAILED_MILLIS

)

}

showRetainedCountNotification(

objectCount = retainedReferenceCount,

contentText = application.getString(

R.string.leak_canary_notification_retained_dump_failed

)

)

return

}

}

- HeapDumper:dump 出内存快照文件的完结类,看起来是能够自界说完结和装备的。

- 默许完结是 AndroidDebugHeapDumper,调用 Debug.dumpHprofData() 办法从虚拟机中 dump hprof 文件。

- dumpHeap 的条件:走漏个数超越5个,并且 60s 内只会 dump 一次。

private fun checkRetainedObjects() {

val iCanHasHeap = HeapDumpControl.iCanHasHeap()

val config = configProvider()

if (iCanHasHeap is Nope) {

if (iCanHasHeap is NotifyingNope) {

// Before notifying that we can't dump heap, let's check if we still have retained object.

var retainedReferenceCount = objectWatcher.retainedObjectCount

if (retainedReferenceCount > 0) {

gcTrigger.runGc()

retainedReferenceCount = objectWatcher.retainedObjectCount

}

val nopeReason = iCanHasHeap.reason()

val wouldDump = !checkRetainedCount(

retainedReferenceCount, config.retainedVisibleThreshold, nopeReason

)

if (wouldDump) {

val uppercaseReason = nopeReason[0].toUpperCase() + nopeReason.substring(1)

onRetainInstanceListener.onEvent(DumpingDisabled(uppercaseReason))

showRetainedCountNotification(

objectCount = retainedReferenceCount,

contentText = uppercaseReason

)

}

} else {

SharkLog.d {

application.getString(

R.string.leak_canary_heap_dump_disabled_text, iCanHasHeap.reason()

)

}

}

return

}

var retainedReferenceCount = objectWatcher.retainedObjectCount

if (retainedReferenceCount > 0) {

gcTrigger.runGc()

retainedReferenceCount = objectWatcher.retainedObjectCount

}

if (checkRetainedCount(retainedReferenceCount, config.retainedVisibleThreshold)) return

val now = SystemClock.uptimeMillis()

val elapsedSinceLastDumpMillis = now - lastHeapDumpUptimeMillis

if (elapsedSinceLastDumpMillis < WAIT_BETWEEN_HEAP_DUMPS_MILLIS) {

onRetainInstanceListener.onEvent(DumpHappenedRecently)

showRetainedCountNotification(

objectCount = retainedReferenceCount,

contentText = application.getString(R.string.leak_canary_notification_retained_dump_wait)

)

scheduleRetainedObjectCheck(

delayMillis = WAIT_BETWEEN_HEAP_DUMPS_MILLIS - elapsedSinceLastDumpMillis

)

return

}

dismissRetainedCountNotification()

val visibility = if (applicationVisible) "visible" else "not visible"

dumpHeap(

retainedReferenceCount = retainedReferenceCount,

retry = true,

reason = "$retainedReferenceCount retained objects, app is $visibility"

)

}

- 先经过 objectWatcher.retainedObjectCount 办法拿到了被 objectWatcher 持有的目标的个数。假如残留的目标大于0就主动履行一次 gc,然后再次获取到残留目标的个数。

- 经过 checkRetainedCount 办法判别是否需求立刻 dump,假如需求就回来 false

- 需求立刻 dump 之后,会查看两次 dump 之间的时刻距离是否小于1分钟,假如小于一分钟就会弹出一个告诉说:Last heap dump was less than a minute ago,然后过一段时刻再次履行scheduleRetainedObjectCheck 办法。

- 假如俩次 dump 时刻距离现已大于等于一分钟了,就会调用 dumpHeap 办法。

分析内存快照

BackgroundThreadHeapAnalyzer 和 AndroidDebugHeapAnalyzer

开启后台线程,经过 AndroidDebugHeapAnalyzer.runAnalysisBlocking 办法来分析堆快照的,并在分析进程中和分析完结后发送回调事情。

底层是调用 shark 库,分析目标图,依据 AndroidObjectInspectors 界说好的走漏规则,寻觅出走漏目标。

object BackgroundThreadHeapAnalyzer : EventListener {

internal val heapAnalyzerThreadHandler by lazy {

val handlerThread = HandlerThread("HeapAnalyzer")

handlerThread.start()

Handler(handlerThread.looper)

}

override fun onEvent(event: Event) {

if (event is HeapDump) {

heapAnalyzerThreadHandler.post {

val doneEvent = AndroidDebugHeapAnalyzer.runAnalysisBlocking(event) { event ->

InternalLeakCanary.sendEvent(event)

}

InternalLeakCanary.sendEvent(doneEvent)

}

}

}

}

fun runAnalysisBlocking(

heapDumped: HeapDump,

isCanceled: () -> Boolean = { false },

progressEventListener: (HeapAnalysisProgress) -> Unit

): HeapAnalysisDone<*> {

...

// 获取堆转储文件、继续时刻和原因

val heapDumpFile = heapDumped.file

val heapDumpReason = heapDumped.reason

// 依据堆转储文件是否存在进行堆分析

val heapAnalysis = if (heapDumpFile.exists()) {

analyzeHeap(heapDumpFile, progressListener, isCanceled)

} else {

missingFileFailure(heapDumpFile)

}

// 依据堆分析成果进行处理

val fullHeapAnalysis = when (heapAnalysis) {

...

}

// 更新进展,表示正在生成陈述

progressListener.onAnalysisProgress(REPORTING_HEAP_ANALYSIS)

// 在数据库中记载分析成果

val analysisDoneEvent = ScopedLeaksDb.writableDatabase(application) { db ->

val id = HeapAnalysisTable.insert(db, heapAnalysis)

when (fullHeapAnalysis) {

is HeapAnalysisSuccess -> {

val showIntent = LeakActivity.createSuccessIntent(application, id)

val leakSignatures = fullHeapAnalysis.allLeaks.map { it.signature }.toSet()

val leakSignatureStatuses = LeakTable.retrieveLeakReadStatuses(db, leakSignatures)

val unreadLeakSignatures = leakSignatureStatuses.filter { (_, read) ->

!read

}.keys.toSet()

HeapAnalysisSucceeded(

heapDumped.uniqueId,

fullHeapAnalysis,

unreadLeakSignatures,

showIntent

)

}

is HeapAnalysisFailure -> {

val showIntent = LeakActivity.createFailureIntent(application, id)

HeapAnalysisFailed(heapDumped.uniqueId, fullHeapAnalysis, showIntent)

}

}

}

// 触发堆分析完结的监听器

LeakCanary.config.onHeapAnalyzedListener.onHeapAnalyzed(fullHeapAnalysis)

return analysisDoneEvent

}

例如 Activity 的走漏,mDestroyed 为 true,可是 activity 仍存在,则能够认为是 activity 发生了走漏。

ACTIVITY {

override val leakingObjectFilter = { heapObject: HeapObject ->

heapObject is HeapInstance &&

heapObject instanceOf "android.app.Activity" &&

heapObject["android.app.Activity", "mDestroyed"]?.value?.asBoolean == true

}

override fun inspect(

reporter: ObjectReporter

) {

reporter.whenInstanceOf("android.app.Activity") { instance ->

// Activity.mDestroyed was introduced in 17.

// https://android.googlesource.com/platform/frameworks/base/+

// /6d9dcbccec126d9b87ab6587e686e28b87e5a04d

val field = instance["android.app.Activity", "mDestroyed"]

if (field != null) {

if (field.value.asBoolean!!) {

leakingReasons += field describedWithValue "true"

} else {

notLeakingReasons += field describedWithValue "false"

}

}

}

}

}

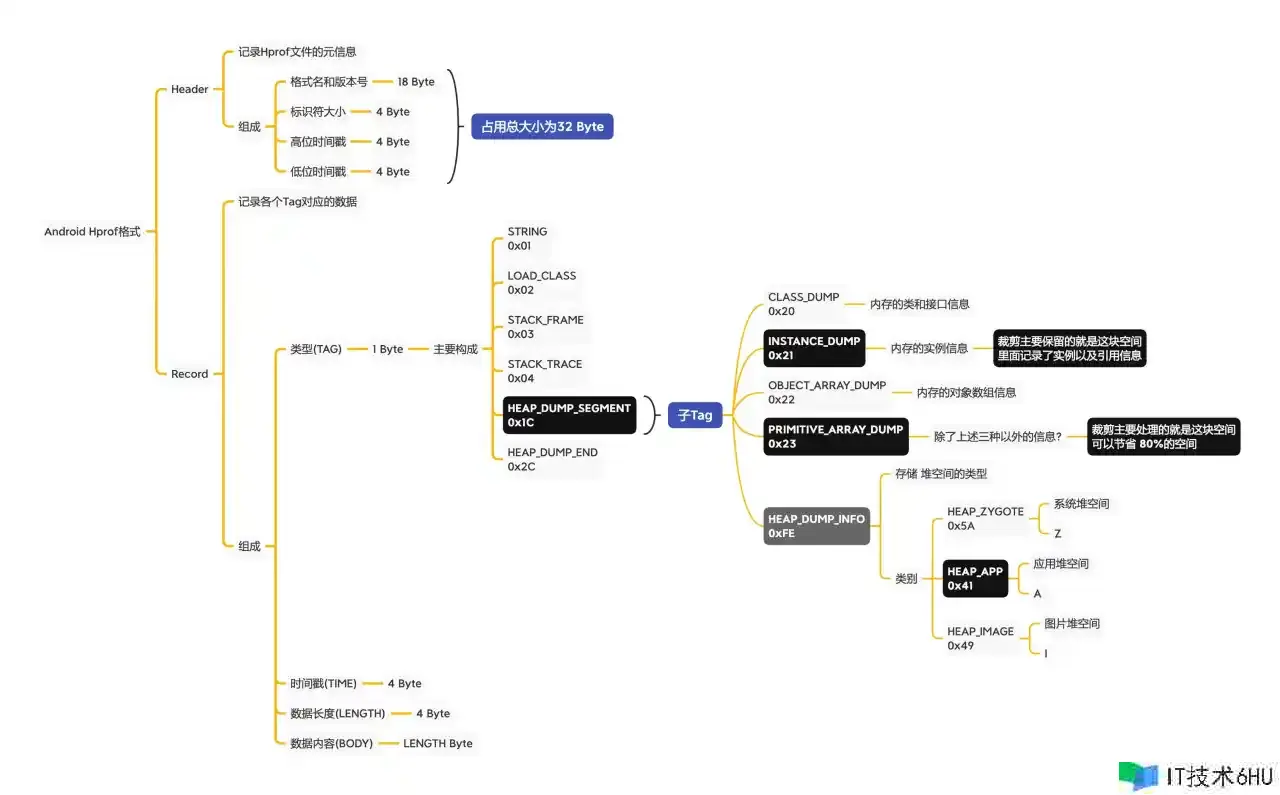

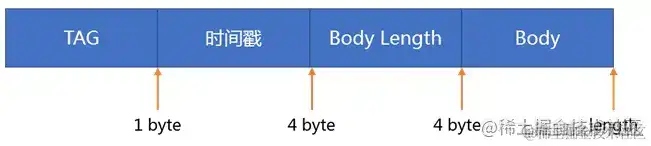

了解 Hprof 文件结构

文件格局界说

hprof 是由 JVMTI Agent HPROF 生成的一种二进制文件。详细文件格局能够看官方文档:hprof文件格局界说

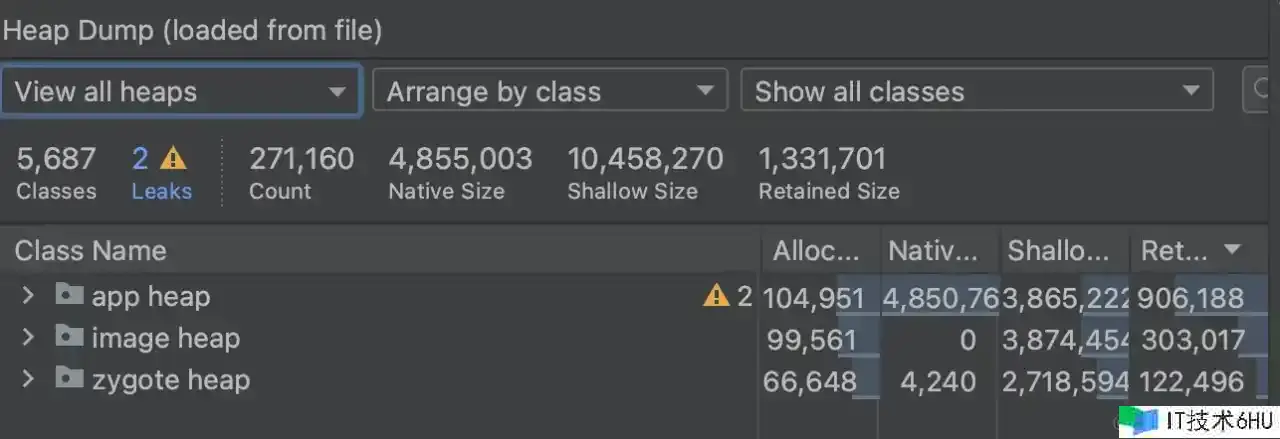

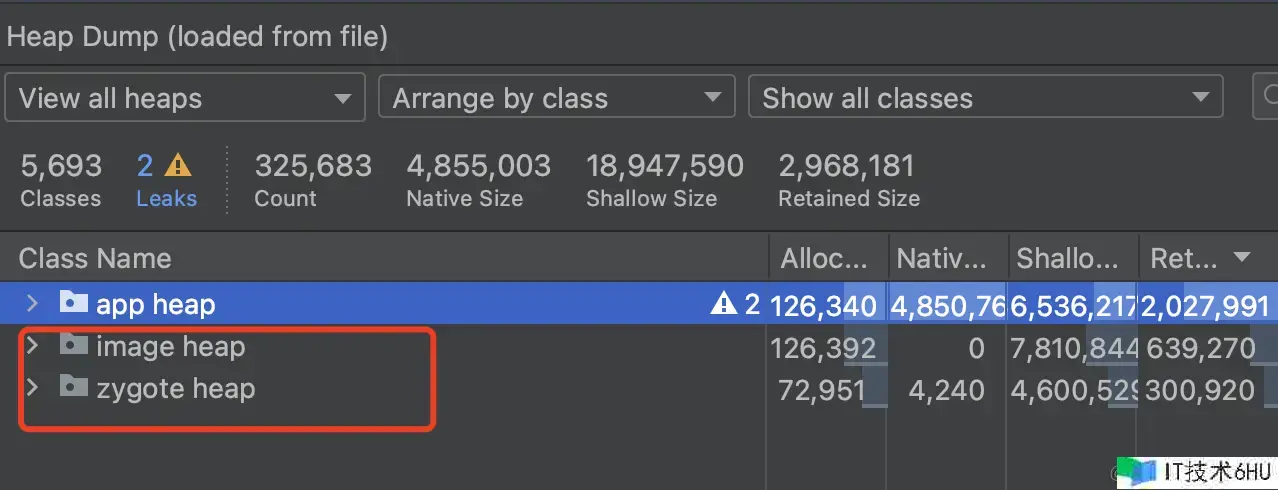

如上图所示,Hprof 文件是由文件头和文件内容两部分组成,文件内容是由一系列的 record 组成,record 的类型经过 TAG 进行区别。

对应的数据结构界说

头文件:HprofHeader,记载 hprof 文件的元信息。

文件内容:HprofRecord 就代表了一个 Hprof 记载,比方 StringRecord 代表字符串,ClassDumpRecord 代表内存的类和接口信息,InstanceDumpRecord 代表内存的实例信息。

hprof文件读取

整体进程:先读取文件头,再读取 record,依据 TAG 区别 record 的类型,接着按照 HPROF Agent 给出的格局顺次读取各种信息。

- 头文件解析

经过调用 HprofHeader#parseHeaderOf,就能够解析得到对应的头文件信息了。

fun parseHeaderOf(source: BufferedSource): HprofHeader {

require(!source.exhausted()) {

throw IllegalArgumentException("Source has no available bytes")

}

//版别号

val endOfVersionString = source.indexOf(0)

val versionName = source.readUtf8(endOfVersionString)

val version = supportedVersions[versionName]

checkNotNull(version) {

"Unsupported Hprof version [$versionName] not in supported list ${supportedVersions.keys}"

}

// Skip the 0 at the end of the version string.

source.skip(1)

//标识符大小

val identifierByteSize = source.readInt()

//时刻戳

val heapDumpTimestamp = source.readLong()

return HprofHeader(heapDumpTimestamp, version, identifierByteSize)

}

- Record内容解析

从头文件完毕的位置,开端进行读取。

开启 while 循环,不断的读取出每个 record 的内容。

依据对应的 TAG 和 length 信息,读取到 Body 内容,创立对应的 Record 目标进行保存起来,便利后面查询。

while (!source.exhausted()) {

// type of the record

val tag = reader.readUnsignedByte()

// number of microseconds since the time stamp in the header

reader.skip(intByteSize)

// number of bytes that follow and belong to this record

val length = reader.readUnsignedInt()

when (tag) {

//String类型

STRING_IN_UTF8.tag -> {

if (STRING_IN_UTF8 in recordTags) {

listener.onHprofRecord(STRING_IN_UTF8, length, reader)

} else {

reader.skip(length)

}

}

}

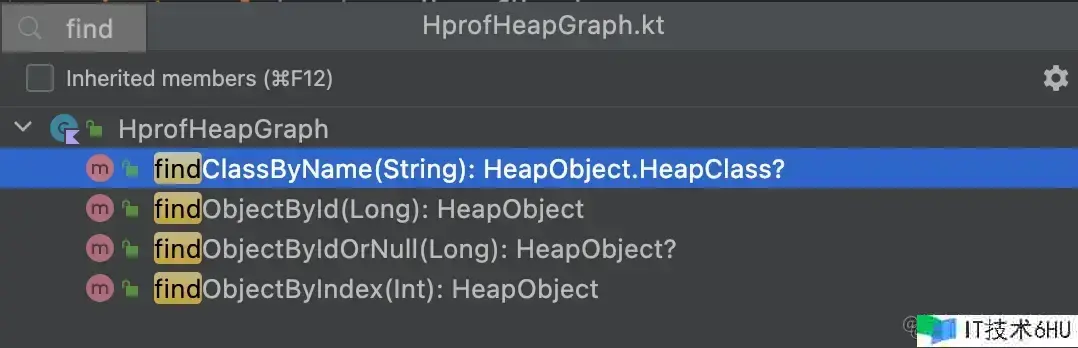

HprofHeapGraph 查找

上面读取后的 Record 信息,都会保存到 HprofHeapGraph 里边,经过 HprofHeapGraph 能够依据条件查找到对应的内存数据。

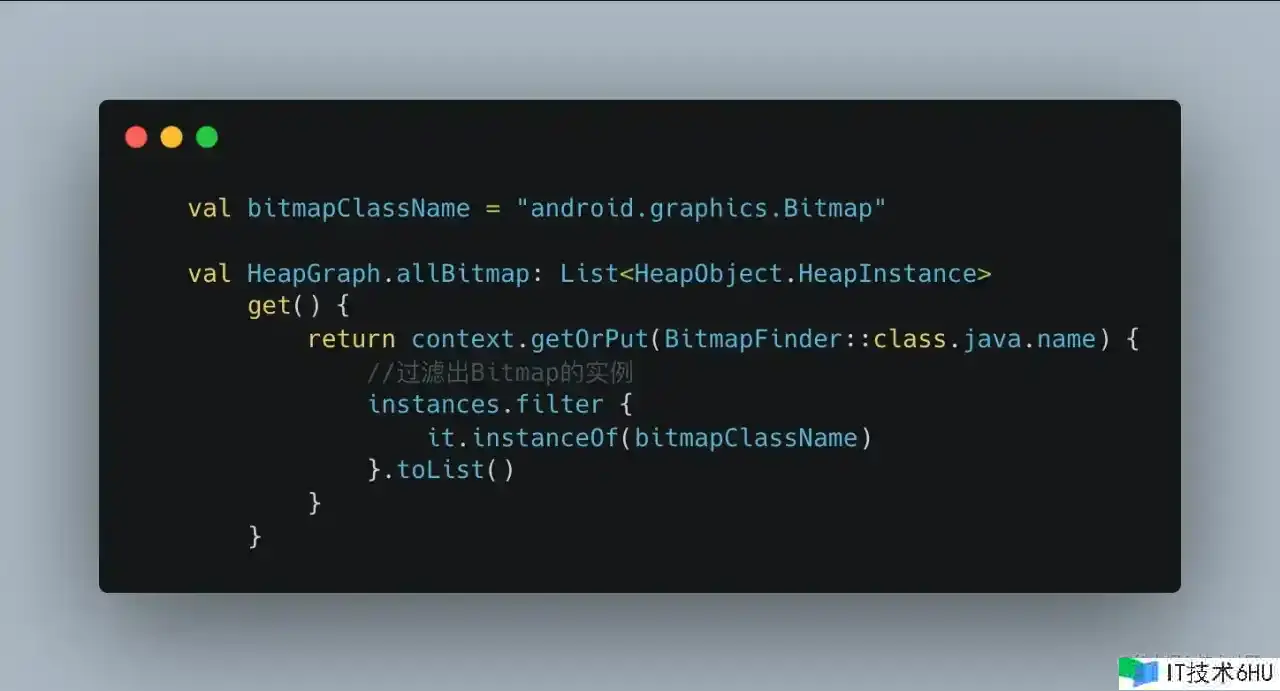

比方找到内存中一切的 Bitmap 目标。

- 找到 Bitmap 对应的 Class。

- 找出内存中的 instance,过滤出 class 是 bitmapClassName 的 instance。

自界说 Dumper 完结

- 默许运用 leakCanary 的 AndroidHeapDumper,会卡主进程。

- 能够考虑接入 koom 的 koom-fast-dump,在子进程dump hprof文件。

子进程 dump 内存快照

- 由于原生的 dump 操作会挂起对应进程中一切的线程,然后进行快照文件抓取,最终康复 JVM 一切线程。需求继续一段时刻,可能导致卡顿,甚至 ANR。也便是说,就算开启后台线程也会卡顿,这也是 Leakcanary 不能线上部署的原因。

- 而快手运用了 linux 的 COW技能,fork 子进程进行 dump 操作。并且为了解决在子进程中卡住的问题,先在主进程中进程 JVM 的线程暂停。

KOOM 提出了一个在不冻住 APP 的状况下 dump hprof 的思路:fork 出子进程,整体流程是这样的:

- 父进程 suspend JVM

- 父进程 fork 出子进程

- 父进程 resume JVM 后,线程等待子进程完毕然后拿到 hprof

- 子进程调用 Debug.dumpHprofData 生成 hprof 后退出

- 父进程发动 Service 在另一个进程里解析 hprof 并结构 GC ROOT PATH

整个进程 APP 只在 fork 前后冻住了一小会,这么短的时刻是能够接受的,由于 fork 采用 Copy-On-Write 机制,子进程能够承继父进程的内存。

源码完结:ForkJvmHeapDumper 是 Java 层接口,hprof_dump 完结底层的逻辑。

public class ForkJvmHeapDumper {

@Override

public boolean dump(String path) {

// ...

boolean dumpRes = false;

try {

int pid = trySuspendVMThenFork();

if (pid == 0) { // 子进程

Debug.dumpHprofData(path);

KLog.i(TAG, "notifyDumped:" + dumpRes);

//System.exit(0);

exitProcess();

} else { // 父进程

resumeVM();

dumpRes = waitDumping(pid);

KLog.i(TAG, "hprof pid:" + pid + " dumped: " + path);

}

} catch (IOException e) {

e.printStackTrace();

KLog.e(TAG, "dump failed caused by IOException!");

}

return dumpRes;

}

}

HprofDump::HprofDump() : init_done_(false), android_api_(0) {

android_api_ = android_get_device_api_level();

}

void HprofDump::Initialize() {

if (init_done_ || android_api_ < __ANDROID_API_L__) {

return;

}

void *handle = kwai::linker::DlFcn::dlopen("libart.so", RTLD_NOW);

KCHECKV(handle)

if (android_api_ < __ANDROID_API_R__) {

suspend_vm_fnc_ =

(void (*)())DlFcn::dlsym(handle, "_ZN3art3Dbg9SuspendVMEv");

KFINISHV_FNC(suspend_vm_fnc_, DlFcn::dlclose, handle)

resume_vm_fnc_ = (void (*)())kwai::linker::DlFcn::dlsym(

handle, "_ZN3art3Dbg8ResumeVMEv");

KFINISHV_FNC(resume_vm_fnc_, DlFcn::dlclose, handle)

} else if (android_api_ <= __ANDROID_API_S__) {

// Over size for device compatibility

ssa_instance_ = std::make_unique<char[]>(64);

sgc_instance_ = std::make_unique<char[]>(64);

ssa_constructor_fnc_ = (void (*)(void *, const char *, bool))DlFcn::dlsym(

handle, "_ZN3art16ScopedSuspendAllC1EPKcb");

KFINISHV_FNC(ssa_constructor_fnc_, DlFcn::dlclose, handle)

ssa_destructor_fnc_ =

(void (*)(void *))DlFcn::dlsym(handle, "_ZN3art16ScopedSuspendAllD1Ev");

KFINISHV_FNC(ssa_destructor_fnc_, DlFcn::dlclose, handle)

sgc_constructor_fnc_ =

(void (*)(void *, void *, GcCause, CollectorType))DlFcn::dlsym(

handle,

"_ZN3art2gc23ScopedGCCriticalSectionC1EPNS_6ThreadENS0_"

"7GcCauseENS0_13CollectorTypeE");

KFINISHV_FNC(sgc_constructor_fnc_, DlFcn::dlclose, handle)

sgc_destructor_fnc_ = (void (*)(void *))DlFcn::dlsym(

handle, "_ZN3art2gc23ScopedGCCriticalSectionD1Ev");

KFINISHV_FNC(sgc_destructor_fnc_, DlFcn::dlclose, handle)

mutator_lock_ptr_ =

(void **)DlFcn::dlsym(handle, "_ZN3art5Locks13mutator_lock_E");

KFINISHV_FNC(mutator_lock_ptr_, DlFcn::dlclose, handle)

exclusive_lock_fnc_ = (void (*)(void *, void *))DlFcn::dlsym(

handle, "_ZN3art17ReaderWriterMutex13ExclusiveLockEPNS_6ThreadE");

KFINISHV_FNC(exclusive_lock_fnc_, DlFcn::dlclose, handle)

exclusive_unlock_fnc_ = (void (*)(void *, void *))DlFcn::dlsym(

handle, "_ZN3art17ReaderWriterMutex15ExclusiveUnlockEPNS_6ThreadE");

KFINISHV_FNC(exclusive_unlock_fnc_, DlFcn::dlclose, handle)

}

DlFcn::dlclose(handle);

init_done_ = true;

}

pid_t HprofDump::SuspendAndFork() {

KCHECKI(init_done_)

if (android_api_ < __ANDROID_API_R__) {

suspend_vm_fnc_();

} else if (android_api_ <= __ANDROID_API_S__) {

void *self = __get_tls()[TLS_SLOT_ART_THREAD_SELF];

sgc_constructor_fnc_((void *)sgc_instance_.get(), self, kGcCauseHprof,

kCollectorTypeHprof);

ssa_constructor_fnc_((void *)ssa_instance_.get(), LOG_TAG, true);

// avoid deadlock with child process

exclusive_unlock_fnc_(*mutator_lock_ptr_, self);

sgc_destructor_fnc_((void *)sgc_instance_.get());

}

pid_t pid = fork();

if (pid == 0) {

// Set timeout for child process

alarm(60);

prctl(PR_SET_NAME, "forked-dump-process");

}

return pid;

}

bool HprofDump::ResumeAndWait(pid_t pid) {

KCHECKB(init_done_)

if (android_api_ < __ANDROID_API_R__) {

resume_vm_fnc_();

} else if (android_api_ <= __ANDROID_API_S__) {

void *self = __get_tls()[TLS_SLOT_ART_THREAD_SELF];

exclusive_lock_fnc_(*mutator_lock_ptr_, self);

ssa_destructor_fnc_((void *)ssa_instance_.get());

}

int status;

for (;;) {

if (waitpid(pid, &status, 0) != -1) {

if (!WIFEXITED(status)) {

ALOGE("Child process %d exited with status %d, terminated by signal %d",

pid, WEXITSTATUS(status), WTERMSIG(status));

return false;

}

return true;

}

// if waitpid is interrupted by the signal,just call it again

if (errno == EINTR){

continue;

}

return false;

}

}

} // namespace leak_monitor

}

- HprofDump::Initialize,获取设备的 Android Api 版别号

- 依据不同的版别号,经过 dlopen 和 dlsym,查找相应的 native 函数调用。

- HprofDump::SuspendAndFork,父进程 suspend JVM,父进程 fork 出子进程,回来子进程 pid。

- 子进程用 Debug.dumpHprofData 生成 hprof 文件后完毕,父进程拿到 hprof 文件后进行分析。

为什么fork进程前要挂起子线程?

JVM 虚拟机在 dump 的时分,需求提早挂起一切的线程,才干进行内存的 dump。

咱们就提早把进程中的一切子线程挂起,这样 fork 之后再去 dump 内存时,由于线程本身现已挂起了,自然就不需求再次履行挂起操作,然后能够顺畅的进行内存dump操作了。

如何调用native挂起线程的办法

dlopen、dlsys 去查找相应的 native 函数进行调用。

裁剪 Hprof 文件

分析hprof文件的两种主要裁剪门户 – Yorek’s Blog

裁剪分为两大门户:

- dump 之后,对文件进行读取并裁剪:比方Shark、微信的Matrix等。

- dump 时直接对数据进行实时裁剪,需求 hook 数据的写入进程:比方美团的Probe、快手的KOOM等。

Matrix 裁剪

object MatrixDump {

fun doShrinkHprofAndReport(heapDump: HeapDump) {

val hprofDir = heapDump.hprofFile.parentFile

val shrinkedHProfFile = File(hprofDir, getShrinkHprofName(heapDump.hprofFile))

val zipResFile = File(hprofDir, getResultZipName("dump_result_" + Process.myPid()))

val hprofFile = heapDump.hprofFile

var zos: ZipOutputStream? = null

try {

val startTime = System.currentTimeMillis()

HprofBufferShrinker().shrink(hprofFile, shrinkedHProfFile)

MatrixLog.i(

TAG,

"shrink hprof file %s, size: %dk to %s, size: %dk, use time:%d",

hprofFile.path,

hprofFile.length() / 1024,

shrinkedHProfFile.path,

shrinkedHProfFile.length() / 1024,

System.currentTimeMillis() - startTime

)

zos = ZipOutputStream(BufferedOutputStream(FileOutputStream(zipResFile)))

val resultInfoEntry = ZipEntry("result.info")

val shrinkedHProfEntry = ZipEntry(shrinkedHProfFile.name)

zos.putNextEntry(resultInfoEntry)

val pw = PrintWriter(OutputStreamWriter(zos, Charset.forName("UTF-8")))

pw.println("# Resource Canary Result Infomation. THIS FILE IS IMPORTANT FOR THE ANALYZER !!")

pw.println("sdkVersion=" + Build.VERSION.SDK_INT)

// pw.println(

// "manufacturer=" + Matrix.with().getPluginByClass<ResourcePlugin>(

// ResourcePlugin::class.java

// ).config.getManufacture()

// )

pw.println("hprofEntry=" + shrinkedHProfEntry.name)

pw.println("leakedActivityKey=" + heapDump.referenceKey)

pw.flush()

zos.closeEntry()

zos.putNextEntry(shrinkedHProfEntry)

StreamUtil.copyFileToStream(shrinkedHProfFile, zos)

zos.closeEntry()

// shrinkedHProfFile.delete()

// hprofFile.delete()

MatrixLog.i(

TAG, "process hprof file use total time:%d",

System.currentTimeMillis() - startTime

)

// CanaryResultService.reportHprofResult(

// app,

// zipResFile.absolutePath,

// heapDump.activityName

// )

} catch (e: IOException) {

MatrixLog.printErrStackTrace(TAG, e, "")

} finally {

StreamUtil.closeQuietly(zos)

}

}

private fun getShrinkHprofName(origHprof: File): String? {

val origHprofName = origHprof.name

val extPos = origHprofName.indexOf(".hprof")

val namePrefix = origHprofName.substring(0, extPos)

return namePrefix + "_shrink.hprof"

}

private fun getResultZipName(prefix: String): String? {

val sb = StringBuilder()

sb.append(prefix).append('_')

.append(SimpleDateFormat("yyyyMMddHHmmss", Locale.ENGLISH).format(Date()))

.append(".zip")

return sb.toString()

}

}

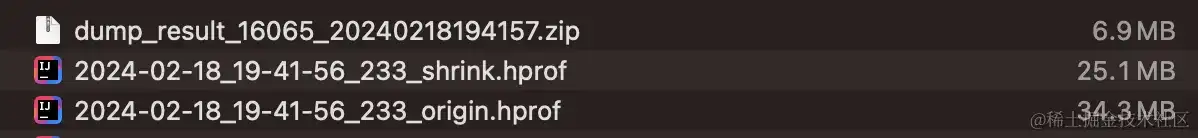

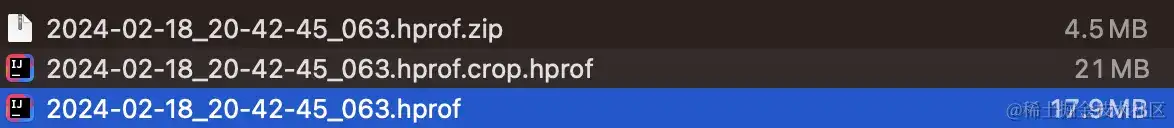

Matrix dump出来的文件,裁剪后的 hprof 文件,进行 zip 压缩后的大小。

裁剪后的内存快照文件:

完结思路:先读后写。

- 首先运用 HprofReader 来解析 hprof 文件,然后别离调用 HprofInfoCollectVisitor、HprofKeptBufferCollectVisitor、HprofBufferShrinkVisitor 这三个 Visitor 来完结 hprof 的裁剪流程,最终经过HprofWriter 重写 hprof。

- Matrix 计划裁剪 hprof 文件时,裁剪的是 HEAP_DUMP、HEAP_DUMP_SEGMENT 里边的PRIMITIVE_ARRAY_DUMP 段。该计划仅仅会保存字符串的数据以及重复的那一份 Bitmap 的 buffer 数据,其他根本类型数组会被剔除。

Koom 裁剪

ForkStripHeapDumper:边 dump 边裁剪。

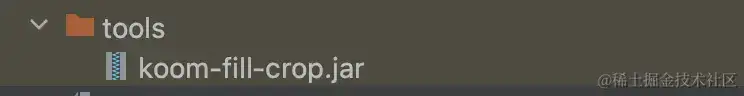

Koom dump出来的文件,需求运用项目下的 jar 包进行复原。

完结思路:hook 了数据的 io 进程,在写入时对数据流进行裁剪。

public class ForkStripHeapDumper implements HeapDumper {

private static final String TAG = "OOMMonitor_ForkStripHeapDumper";

private boolean mLoadSuccess;

private static class Holder {

private static final ForkStripHeapDumper INSTANCE = new ForkStripHeapDumper();

}

public static ForkStripHeapDumper getInstance() {

return ForkStripHeapDumper.Holder.INSTANCE;

}

private ForkStripHeapDumper() {}

private void init() {

if (mLoadSuccess) {

return;

}

if (loadSoQuietly("koom-strip-dump")) {

mLoadSuccess = true;

initStripDump();

}

}

@Override

public synchronized boolean dump(String path) {

MonitorLog.i(TAG, "dump " + path);

if (!sdkVersionMatch()) {

throw new UnsupportedOperationException("dump failed caused by sdk version not supported!");

}

init();

if (!mLoadSuccess) {

MonitorLog.e(TAG, "dump failed caused by so not loaded!");

return false;

}

boolean dumpRes = false;

try {

hprofName(path);

dumpRes = ForkJvmHeapDumper.getInstance().dump(path);

MonitorLog.i(TAG, "dump result " + dumpRes);

} catch (Exception e) {

MonitorLog.e(TAG, "dump failed caused by " + e);

e.printStackTrace();

}

return dumpRes;

}

public native void initStripDump();

public native void hprofName(String name);

}

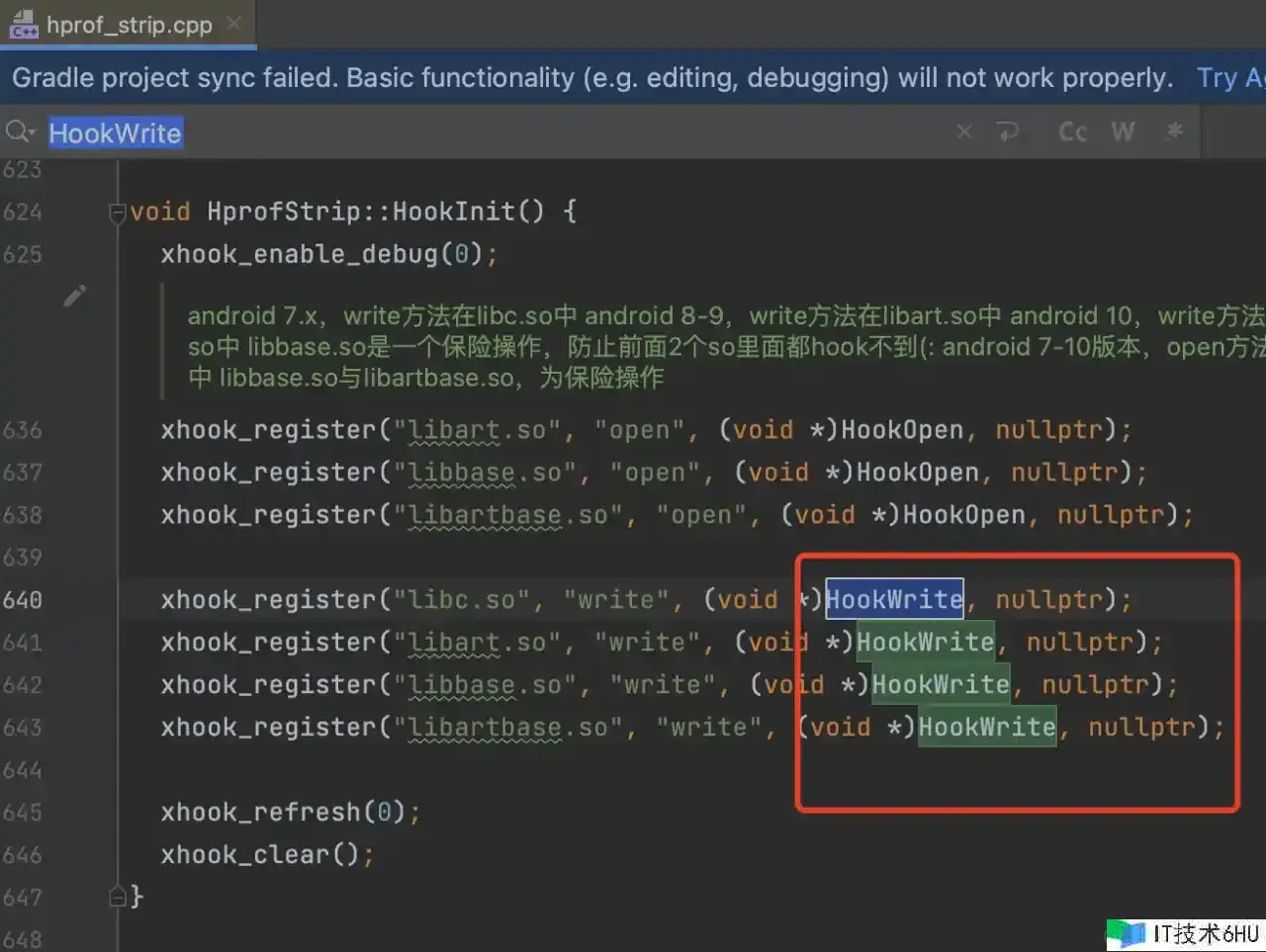

- 在 dump 的时分会传入文件途径,

- 在文件 open 的 hook 回调中依据文件途径进行匹配,匹配成功之后记载下文件的 fd。

- 在文件 write 的 hook 回调中,内容写入时匹配fd,这样就能够精准拿到hprof写入时的内容了。

- 裁剪逻辑,都在 ProcessHeap 里边进行处理。运用一个一位数组,记载需求裁剪的区间,偶数位记载的是要裁剪区间的开始位置,奇数位是完毕区间。

//设置内存快照文件名称,hprof_name_

void HprofStrip::SetHprofName(const char *hprof_name) {

hprof_name_ = hprof_name;

}

//hook open 办法,记载内存快照文件的fd,hprof_fd_

int HprofStrip::HookOpenInternal(const char *path_name, int flags, ...) {

va_list ap;

va_start(ap, flags);

int fd = open(path_name, flags, ap);

va_end(ap);

if (hprof_name_.empty()) {

return fd;

}

if (path_name != nullptr && strstr(path_name, hprof_name_.c_str())) {

hprof_fd_ = fd;

is_hook_success_ = true;

}

return fd;

}

//hook write 办法,匹配 hprof_fd_

ssize_t HprofStrip::HookWriteInternal(int fd, const void *buf, ssize_t count) {

if (fd != hprof_fd_) {

return write(fd, buf, count);

}

// 每次hook_write,初始化重置

reset();

//对heap进行处理

const unsigned char tag = ((unsigned char *)buf)[0];

// 删去掉无关record tag类型匹配,只匹配heap相关提高功能

switch (tag) {

case HPROF_TAG_HEAP_DUMP:

case HPROF_TAG_HEAP_DUMP_SEGMENT: {

ProcessHeap(

buf,

HEAP_TAG_BYTE_SIZE + RECORD_TIME_BYTE_SIZE + RECORD_LENGTH_BYTE_SIZE,

count, heap_serial_num_, 0);

heap_serial_num_++;

} break;

default:

break;

}

//......

}

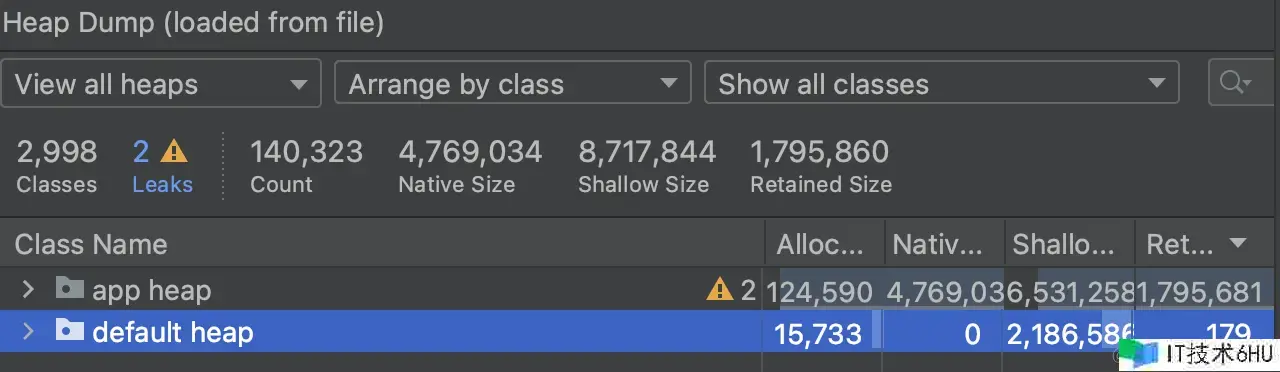

- KOOM 裁剪掉了 system(Zygote、Image) 空间的记载,只保存了 app heap。

KOOM裁剪掉了 system(Zygote、Image) 空间的一切数据,对 app heap 进行部分裁剪。

- 针对system space(Zygote Space、Image Space):会裁剪PRIMITIVE_ARRAY_DUMP、HEAP_DUMP_INFO、INSTANCE_DUMP和OBJECT_ARRAY_DUMP这4个子TAG,会删去这四个子TAG的全部内容(包函子TAG全都会删去)。

- 针对 app space:会处理 PRIMITIVE_ARRAY_DUMP 这一块数据,裁剪掉根本类型数组。

// Android.

case HPROF_HEAP_DUMP_INFO: {

const unsigned char heap_type =

((unsigned char *)buf)[first_index + HEAP_TAG_BYTE_SIZE + 3];

is_current_system_heap_ =

(heap_type == HPROF_HEAP_ZYGOTE || heap_type == HPROF_HEAP_IMAGE);

if (is_current_system_heap_) {

strip_index_list_pair_[strip_index_ * 2] = first_index;

strip_index_list_pair_[strip_index_ * 2 + 1] =

first_index + HEAP_TAG_BYTE_SIZE /*TAG*/

+ HEAP_TYPE_BYTE_SIZE /*heap type*/

+ STRING_ID_BYTE_SIZE /*string id*/;

strip_index_++;

strip_bytes_sum_ += HEAP_TAG_BYTE_SIZE /*TAG*/

+ HEAP_TYPE_BYTE_SIZE /*heap type*/

+ STRING_ID_BYTE_SIZE /*string id*/;

}

array_serial_no = ProcessHeap(buf,

first_index + HEAP_TAG_BYTE_SIZE /*TAG*/

+ HEAP_TYPE_BYTE_SIZE /*heap type*/

+ STRING_ID_BYTE_SIZE /*string id*/,

max_len, heap_serial_no, array_serial_no);

} break;

case HPROF_INSTANCE_DUMP: {

int instance_dump_index =

first_index + HEAP_TAG_BYTE_SIZE + OBJECT_ID_BYTE_SIZE +

STACK_TRACE_SERIAL_NUMBER_BYTE_SIZE + CLASS_ID_BYTE_SIZE;

int instance_size =

GetIntFromBytes((unsigned char *)buf, instance_dump_index);

// 裁剪掉system space

if (is_current_system_heap_) {

strip_index_list_pair_[strip_index_ * 2] = first_index;

strip_index_list_pair_[strip_index_ * 2 + 1] =

instance_dump_index + U4 /*占位*/ + instance_size;

strip_index_++;

strip_bytes_sum_ +=

instance_dump_index + U4 /*占位*/ + instance_size - first_index;

}

array_serial_no =

ProcessHeap(buf, instance_dump_index + U4 /*占位*/ + instance_size,

max_len, heap_serial_no, array_serial_no);

}

case HPROF_OBJECT_ARRAY_DUMP: {

int length = GetIntFromBytes((unsigned char *)buf,

first_index + HEAP_TAG_BYTE_SIZE +

OBJECT_ID_BYTE_SIZE +

STACK_TRACE_SERIAL_NUMBER_BYTE_SIZE);

// 裁剪掉system space

if (is_current_system_heap_) {

strip_index_list_pair_[strip_index_ * 2] = first_index;

strip_index_list_pair_[strip_index_ * 2 + 1] =

first_index + HEAP_TAG_BYTE_SIZE + OBJECT_ID_BYTE_SIZE +

STACK_TRACE_SERIAL_NUMBER_BYTE_SIZE + U4 /*Length*/

+ CLASS_ID_BYTE_SIZE + U4 /*Id*/ * length;

strip_index_++;

strip_bytes_sum_ += HEAP_TAG_BYTE_SIZE + OBJECT_ID_BYTE_SIZE +

STACK_TRACE_SERIAL_NUMBER_BYTE_SIZE + U4 /*Length*/

+ CLASS_ID_BYTE_SIZE + U4 /*Id*/ * length;

}

array_serial_no =

ProcessHeap(buf,

first_index + HEAP_TAG_BYTE_SIZE + OBJECT_ID_BYTE_SIZE +

STACK_TRACE_SERIAL_NUMBER_BYTE_SIZE + U4 /*Length*/

+ CLASS_ID_BYTE_SIZE + U4 /*Id*/ * length,

max_len, heap_serial_no, array_serial_no);

}

case HPROF_PRIMITIVE_ARRAY_DUMP: {

int primitive_array_dump_index = first_index + HEAP_TAG_BYTE_SIZE /*tag*/

+ OBJECT_ID_BYTE_SIZE +

STACK_TRACE_SERIAL_NUMBER_BYTE_SIZE;

int length =

GetIntFromBytes((unsigned char *)buf, primitive_array_dump_index);

primitive_array_dump_index += U4 /*Length*/;

// 裁剪掉根本类型数组,无论是否在system space都进行裁剪

// 区别是数组左坐标,app space时带数组元信息(类型、长度)便利回填

if (is_current_system_heap_) {

strip_index_list_pair_[strip_index_ * 2] = first_index;

} else {

strip_index_list_pair_[strip_index_ * 2] =

primitive_array_dump_index + BASIC_TYPE_BYTE_SIZE /*value type*/;

}

array_serial_no++;

int value_size = GetByteSizeFromType(

((unsigned char *)buf)[primitive_array_dump_index]);

primitive_array_dump_index +=

BASIC_TYPE_BYTE_SIZE /*value type*/ + value_size * length;

// 数组右坐标

strip_index_list_pair_[strip_index_ * 2 + 1] = primitive_array_dump_index;

// app space时,不修改长度由于回填数组时会补齐

if (is_current_system_heap_) {

strip_bytes_sum_ += primitive_array_dump_index - first_index;

}

strip_index_++;

array_serial_no = ProcessHeap(buf, primitive_array_dump_index, max_len,

heap_serial_no, array_serial_no);

}

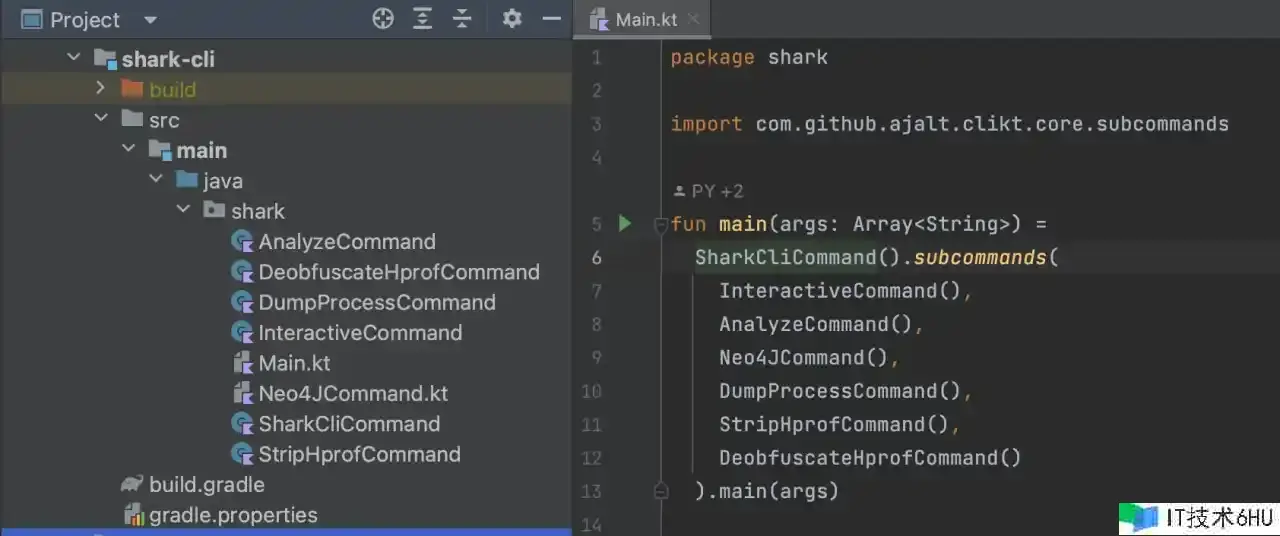

自界说 Hprof 分析脚本完结

例如 LeakCanary、Matrix,都有相关的指令行脚本完结,调用指令去分析 hprof 文件。

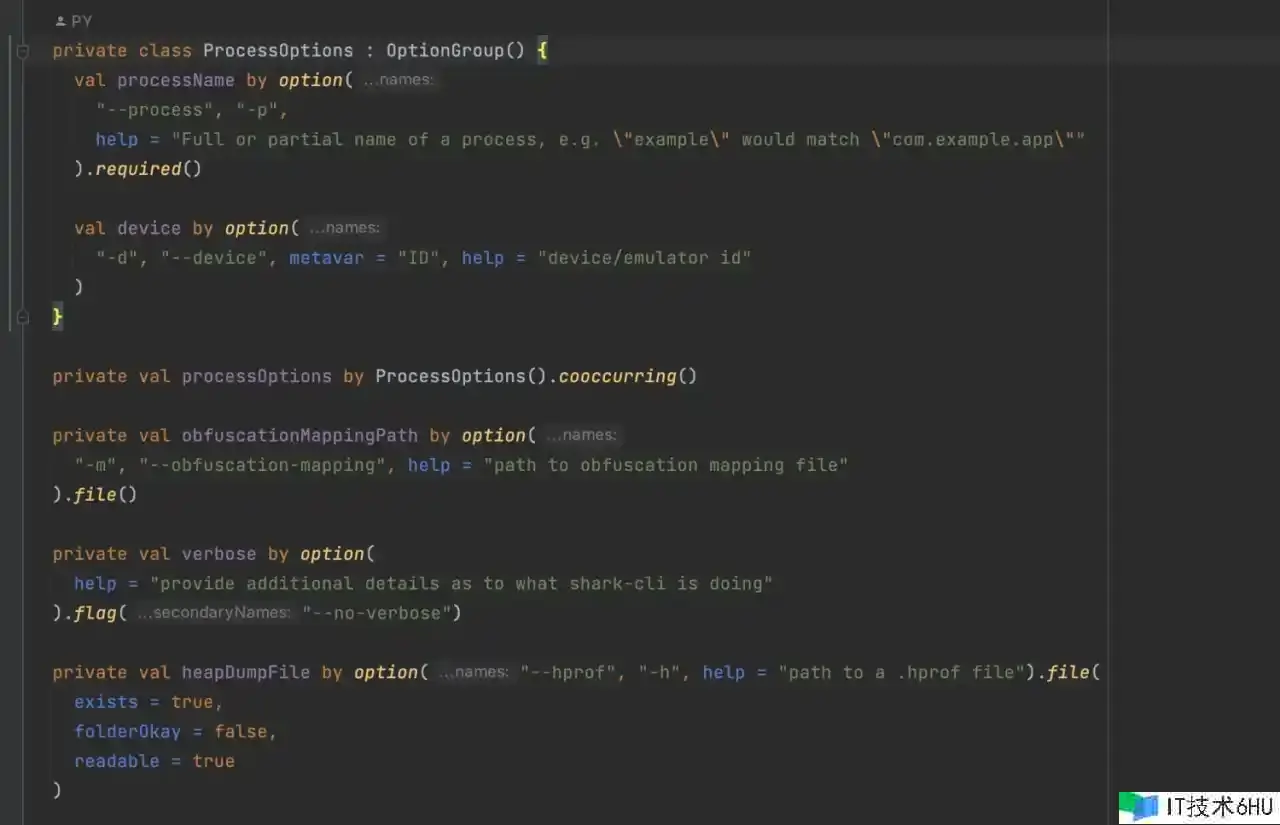

咱们也能够自界说一些分析脚本,能够运用 Clikt 去完结自界说的指令行功用。

这里运用 LeakCanary 项目里边的 shark-cli 代码进行分析。

LeakCanary 底层是基于 shark库,对 hprof 文件进行解析和分析。

主进口:SharkCliCommand,界说指令行所需求的参数。

一系列的子指令,完结相应的功用。

- DumpProcessCommand:经过 adb dump 出 hprof 文件,并 pull 到电脑上。

- AnalyzeCommand:对 hprof 文件进行分析。

例如 AnalyzeCommand,便是调用 shark 库的相关办法,创立 HeapAnalyzer 进行分析 hprof 文件。

class AnalyzeCommand : CliktCommand(

name = "analyze",

help = "Analyze a heap dump."

) {

override fun run() {

val params = context.sharkCliParams

analyze(retrieveHeapDumpFile(params), params.obfuscationMappingPath)

}

companion object {

fun CliktCommand.analyze(

heapDumpFile: File,

proguardMappingFile: File?

) {

val proguardMapping = proguardMappingFile?.let {

ProguardMappingReader(it.inputStream()).readProguardMapping()

}

val objectInspectors = AndroidObjectInspectors.appDefaults.toMutableList()

val listener = OnAnalysisProgressListener { step ->

SharkLog.d { "Analysis in progress, working on: ${step.name}" }

}

val heapAnalyzer = HeapAnalyzer(listener)

SharkLog.d { "Analyzing heap dump $heapDumpFile" }

val heapAnalysis = heapAnalyzer.analyze(

heapDumpFile = heapDumpFile,

leakingObjectFinder = FilteringLeakingObjectFinder(

AndroidObjectInspectors.appLeakingObjectFilters

),

referenceMatchers = AndroidReferenceMatchers.appDefaults,

computeRetainedHeapSize = true,

objectInspectors = objectInspectors,

proguardMapping = proguardMapping,

metadataExtractor = AndroidMetadataExtractor

)

echo(heapAnalysis)

}

}

}

文章参考

【Andorid进阶】LeakCanary源码分析,从头到尾搞个明白 –

JVM 系列(5)吊打面试官:说一下 Java 的四种引证类型 –

为什么各大厂自研的内存走漏检测结构都要参考 LeakCanary?由于它是真强啊!

分析hprof文件的两种主要裁剪门户 – Yorek’s Blog