前言

本章首要环绕 ServiceManager 进行解说,经过注册、发动等流程,玩转 ServiceManager;

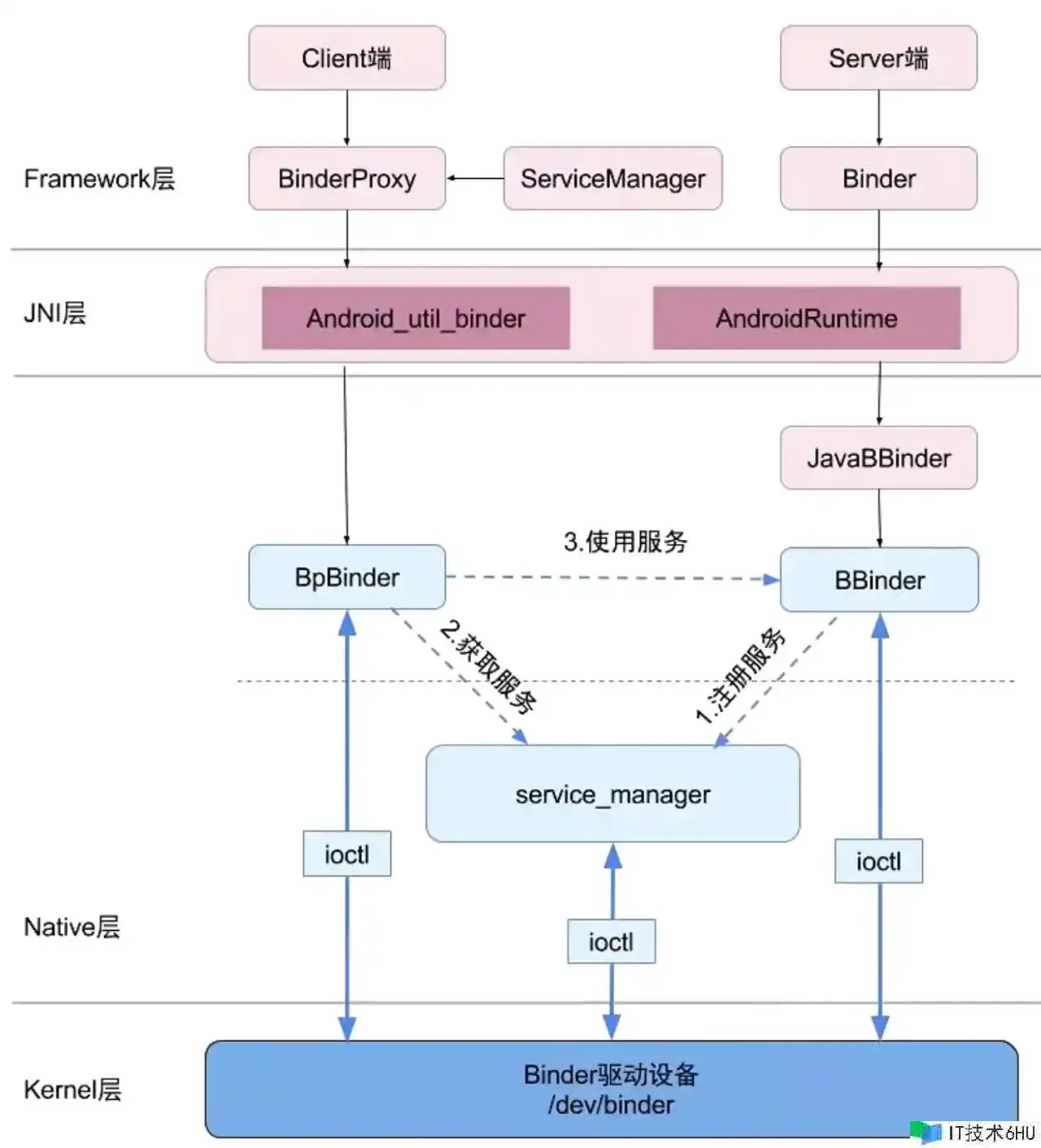

ServiceManager 是什么?

客户端想要调用体系的 AMS 或许 PMS,可是客户端直接调用不到,只能获取到 AMS-IBinder 目标,AMS 在发动的时分就会把 AMS-IBinder 目标注册到 ServiceManager 中,客户端只要找到 ServiceManager 之后,就能够把 AMS-IBinder 目标回来给客户端,客户端就能够经过 AMS-IBinder 同 AMS 进行通讯;

ServiceManager 它也是一个服务,可是它的句柄是确认的,也便是 handle = 0,而咱们客户端在调用体系服务的时分,只需求调用这个已知道的 handle = 0 的服务就能够获取到 ServiceManager 的署理目标,然后就能够 同其他体系服务通讯了;

ServiceManager 服务是怎样注册的?

那么 ServiceManager 是怎样注册的呢?咱们进入源码看一下,它的注册就在 init.rc 中:

// 翻开 ServiceManager 这个程序,就会 调用 ServiceManager 的 main 办法

service servicemanager /system/bin/servicemanager

class core

user system

group system

critical

onrestart restart healthd

onrestart restart zygote

onrestart restart media

onrestart restart surfaceflinger

onrestart restart drm

翻开 ServiceManager 这个程序,就会 调用 servicemanager.c 的 main 办法,咱们进入 main 办法看下:

int main(int argc, char **argv)

{

struct binder_state *bs;

// 这个办法做了两件工作,一是 open 办法,翻开 binder 驱动,二是 mmap 办法,建立映射联系

bs = binder_open(128*1024);

if (!bs) {

ALOGE("failed to open binder drivern");

return -1;

}

// 把 ServiceManager 设置成服务大管家

if (binder_become_context_manager(bs)) {

ALOGE("cannot become context manager (%s)n", strerror(errno));

return -1;

}

selinux_enabled = is_selinux_enabled();

sehandle = selinux_android_service_context_handle();

selinux_status_open(true);

if (selinux_enabled > 0) {

if (sehandle == NULL) {

ALOGE("SELinux: Failed to acquire sehandle. Aborting.n");

abort();

}

if (getcon(&service_manager_context) != 0) {

ALOGE("SELinux: Failed to acquire service_manager context. Aborting.n");

abort();

}

}

union selinux_callback cb;

cb.func_audit = audit_callback;

selinux_set_callback(SELINUX_CB_AUDIT, cb);

cb.func_log = selinux_log_callback;

selinux_set_callback(SELINUX_CB_LOG, cb);

// 循环监听有没有其他服务拜访

binder_loop(bs, svcmgr_handler);

return 0;

}

binder_open 这个办法做了两件工作,一是 open(128*1024) 办法,翻开 binder 驱动,二是 mmap 办法,建立映射联系;

binder_become_context_manager 把 ServiceManager 设置成服务大管家;

binder_loop 循环监听有没有其他服务拜访;

咱们进入 binder_become_context_manager 这个办法看下,它是怎样成为大管家的

int binder_become_context_manager(struct binder_state *bs)

{

return ioctl(bs->fd, BINDER_SET_CONTEXT_MGR, 0);

}

直接调用的 ioctl 办法,传入的参数是 BINDER_SET_CONTEXT_MGR,而 这个办法终究调用到 binder_ioctl 中,咱们进入 BINDER_SET_CONTEXT_MGR 这个 case 看下:

case BINDER_SET_CONTEXT_MGR:

ret = binder_ioctl_set_ctx_mgr(filp);

if (ret)

goto err;

break;

这个 case 中调用的是 binder_ioctl_set_ctx_mgr,咱们进入这个办法看下:

static int binder_ioctl_set_ctx_mgr(struct file *filp)

{

int ret = 0;

struct binder_proc *proc = filp->private_data;

struct binder_context *context = proc->context;

kuid_t curr_euid = current_euid();

// 先判别是不是已经存在了,存在则直接回来

if (context->binder_context_mgr_node) {

pr_err("BINDER_SET_CONTEXT_MGR already setn");

ret = -EBUSY;

goto out;

}

ret = security_binder_set_context_mgr(proc->tsk);

if (ret < 0)

goto out;

// 判别 sm 的 uid 是否是有用的,第一次肯定是无效的,所以直接进入 else

if (uid_valid(context->binder_context_mgr_uid)) {

if (!uid_eq(context->binder_context_mgr_uid, curr_euid)) {

pr_err("BINDER_SET_CONTEXT_MGR bad uid %d != %dn",

from_kuid(&init_user_ns, curr_euid),

from_kuid(&init_user_ns,

context->binder_context_mgr_uid));

ret = -EPERM;

goto out;

}

} else {

// 设置 uid

context->binder_context_mgr_uid = curr_euid;

}

// 经过 binder_new_node 创立 SM 的 binder_context_mgr_node 结构体

context->binder_context_mgr_node = binder_new_node(proc, 0, 0);

if (!context->binder_context_mgr_node) {

ret = -ENOMEM;

goto out;

}

context->binder_context_mgr_node->local_weak_refs++;

context->binder_context_mgr_node->local_strong_refs++;

context->binder_context_mgr_node->has_strong_ref = 1;

context->binder_context_mgr_node->has_weak_ref = 1;

out:

return ret;

}

到这儿,就把 ServiceManager 设置成了大管家;

咱们接下来看下 binder_loop 这个办法:

void binder_loop(struct binder_state *bs, binder_handler func)

{

int res;

// 结构体,这个结构体中有读和写,以及buffer

struct binder_write_read bwr;

uint32_t readbuf[32];

bwr.write_size = 0;

bwr.write_consumed = 0;

bwr.write_buffer = 0;

// BC_ENTER_LOOPER 是一个指令,进入循环的指令

readbuf[0] = BC_ENTER_LOOPER;

// 履行 binder_write 把 buffer 放进去,内部履行 ioctl 办法,终究调用到 binder_ioctl_write_read 办法

binder_write(bs, readbuf, sizeof(uint32_t));

for (;;) {

bwr.read_size = sizeof(readbuf);

bwr.read_consumed = 0;

bwr.read_buffer = (uintptr_t) readbuf;

res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr);

if (res < 0) {

ALOGE("binder_loop: ioctl failed (%s)n", strerror(errno));

break;

}

res = binder_parse(bs, 0, (uintptr_t) readbuf, bwr.read_consumed, func);

if (res == 0) {

ALOGE("binder_loop: unexpected reply?!n");

break;

}

if (res < 0) {

ALOGE("binder_loop: io error %d %sn", res, strerror(errno));

break;

}

}

}

// 履行 binder_write 把 buffer 放进去,内部履行 ioctl 办法,终究调用到 binder_ioctl_write_read 办法,咱们进入这个办法看下:

static int binder_ioctl_write_read(struct file *filp,

unsigned int cmd, unsigned long arg,

struct binder_thread *thread)

{

int ret = 0;

struct binder_proc *proc = filp->private_data;

unsigned int size = _IOC_SIZE(cmd);

void __user *ubuf = (void __user *)arg;

struct binder_write_read bwr;

if (size != sizeof(struct binder_write_read)) {

ret = -EINVAL;

goto out;

}

if (copy_from_user(&bwr, ubuf, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

binder_debug(BINDER_DEBUG_READ_WRITE,

"%d:%d write %lld at %016llx, read %lld at %016llxn",

proc->pid, thread->pid,

(u64)bwr.write_size, (u64)bwr.write_buffer,

(u64)bwr.read_size, (u64)bwr.read_buffer);

// 两个 if 来判别是要履行 read 仍是 write 办法

if (bwr.write_size > 0) {

ret = binder_thread_write(proc, thread,

bwr.write_buffer,

bwr.write_size,

&bwr.write_consumed);

trace_binder_write_done(ret);

if (ret < 0) {

bwr.read_consumed = 0;

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

if (bwr.read_size > 0) {

ret = binder_thread_read(proc, thread, bwr.read_buffer,

bwr.read_size,

&bwr.read_consumed,

filp->f_flags & O_NONBLOCK);

trace_binder_read_done(ret);

if (!list_empty(&proc->todo))

wake_up_interruptible(&proc->wait);

if (ret < 0) {

if (copy_to_user(ubuf, &bwr, sizeof(bwr)))

ret = -EFAULT;

goto out;

}

}

binder_debug(BINDER_DEBUG_READ_WRITE,

"%d:%d wrote %lld of %lld, read return %lld of %lldn",

proc->pid, thread->pid,

(u64)bwr.write_consumed, (u64)bwr.write_size,

(u64)bwr.read_consumed, (u64)bwr.read_size);

if (copy_to_user(ubuf, &bwr, sizeof(bwr))) {

ret = -EFAULT;

goto out;

}

out:

return ret;

}

bwr.write_size > 0 履行 write 或许 bwr.read_size > 0 履行 read 操作,咱们进入 write 的操作看下,这个办法终究履行的指令是咱们前面看到的 BC_ENTER_LOOPER 这个指令,咱们直接进入这个 case 看下

case BC_ENTER_LOOPER:

binder_debug(BINDER_DEBUG_THREADS,

"%d:%d BC_ENTER_LOOPERn",

proc->pid, thread->pid);

if (thread->looper & BINDER_LOOPER_STATE_REGISTERED) {

thread->looper |= BINDER_LOOPER_STATE_INVALID;

binder_user_error("%d:%d ERROR: BC_ENTER_LOOPER called after BC_REGISTER_LOOPERn",

proc->pid, thread->pid);

}

// 其实就做了一件工作,给当前 thread 的 looper 设置了一个状况

thread->looper |= BINDER_LOOPER_STATE_ENTERED;

break;

其实就做了一件工作,给当前 thread 的 looper 设置了一个状况;

咱们接着回到 binder_loop 办法往下看,下面一个是 for(;;) 无限循环,这儿仍然调用的是 res = ioctl(bs->fd, BINDER_WRITE_READ, &bwr); 这个办法,只是 case 传递了 BINDER_WRITE_READ,咱们继续进入这个办法看下,终究进入的是 binder_thread_read 办法,这个办法 终究调用到 ret = wait_event_freezable_exclusive(proc->wait, binder_has_proc_work(proc, thread)); 进入等候状况了

if (wait_for_proc_work) {

if (!(thread->looper & (BINDER_LOOPER_STATE_REGISTERED |

BINDER_LOOPER_STATE_ENTERED))) {

binder_user_error("%d:%d ERROR: Thread waiting for process work before calling BC_REGISTER_LOOPER or BC_ENTER_LOOPER (state %x)n",

proc->pid, thread->pid, thread->looper);

wait_event_interruptible(binder_user_error_wait,

binder_stop_on_user_error < 2);

}

binder_set_nice(proc->default_priority);

if (non_block) {

if (!binder_has_proc_work(proc, thread))

ret = -EAGAIN;

} else {

// binder 是阻塞的,所以终究进入到这儿

ret = wait_event_freezable_exclusive(proc->wait, binder_has_proc_work(proc, thread));

}

}

SM 的注册仍是比较简单的,总共就三步,第一步是 binder_open,第二步是 binder_become_context_manager(成为大管家,也叫设置为看护进程),第三步是 binder_loop

全体便是图上 1 、2 、3 三个步骤;

ServiceManager 服务是怎样获取的

咱们接下来看下 SM 是怎样获取的;获取 SM 的状况一般是两种,一种是注册服务到 SM,一种是经过 SM 去获取服务,这两种都需求拿到 SM 才干完成;SM 的 native 层获取一般是在 IServiceManager.cpp 中,咱们进入这个文件看下

sp<IServiceManager> defaultServiceManager()

{

if (gDefaultServiceManager != NULL) return gDefaultServiceManager;

{

AutoMutex _l(gDefaultServiceManagerLock);

while (gDefaultServiceManager == NULL) {

gDefaultServiceManager = interface_cast<IServiceManager>(

ProcessState::self()->getContextObject(NULL));

if (gDefaultServiceManager == NULL)

sleep(1);

}

}

return gDefaultServiceManager;

}

native 层的获取 SM 都是从这儿履行的,首先是一个单例,然后一个 while 循环来获取 SM,如果获取不到会进行一个休眠等候,首要的获取逻辑在下面这行代码

gDefaultServiceManager = interface_cast<IServiceManager>(

ProcessState::self()->getContextObject(NULL));

self()

咱们来逐步剖析这个办法都做了什么,首先咱们来看下 ProcessState::self()->getContextObject(NULL) 这个参数都做了什么操作;

首先是 self 办法,咱们进入这个 self 办法看下做了什么

sp<ProcessState> ProcessState::self()

{

Mutex::Autolock _l(gProcessMutex);

if (gProcess != NULL) {

return gProcess;

}

gProcess = new ProcessState;

return gProcess;

}

很简单,便是 new ProcessState 并回来,创立这个 ProcessState 的时分做了什么呢?咱们进入构造函数看一下

ProcessState::ProcessState()

: mDriverFD(open_driver())

, mVMStart(MAP_FAILED)

, mThreadCountLock(PTHREAD_MUTEX_INITIALIZER)

, mThreadCountDecrement(PTHREAD_COND_INITIALIZER)

, mExecutingThreadsCount(0)

, mMaxThreads(DEFAULT_MAX_BINDER_THREADS)

, mManagesContexts(false)

, mBinderContextCheckFunc(NULL)

, mBinderContextUserData(NULL)

, mThreadPoolStarted{

if (mDriverFD >= 0) {

// XXX Ideally, there should be a specific define for whether we

// have mmap (or whether we could possibly have the kernel module

// availabla).

#if !defined(HAVE_WIN32_IPC)

// mmap the binder, providing a chunk of virtual address space to receive transactions.

mVMStart = mmap(0, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

if (mVMStart == MAP_FAILED) {

// *sigh*

ALOGE("Using /dev/binder failed: unable to mmap transaction memory.n");

close(mDriverFD);

mDriverFD = -1;

}

#else

mDriverFD = -1;

#endif

}

LOG_ALWAYS_FATAL_IF(mDriverFD < 0, "Binder driver could not be opened. Terminating.");

}

第一步,是 open_driver() 经过 open 办法翻开 binder 设备(驱动),并经过 maxThreads 设置最大线程数;

static int open_driver()

{

int fd = open("/dev/binder", O_RDWR);

if (fd >= 0) {

fcntl(fd, F_SETFD, FD_CLOEXEC);

int vers = 0;

status_t result = ioctl(fd, BINDER_VERSION, &vers);

if (result == -1) {

ALOGE("Binder ioctl to obtain version failed: %s", strerror(errno));

close(fd);

fd = -1;

}

if (result != 0 || vers != BINDER_CURRENT_PROTOCOL_VERSION) {

ALOGE("Binder driver protocol does not match user space protocol!");

close(fd);

fd = -1;

}

size_t maxThreads = DEFAULT_MAX_BINDER_THREADS;

result = ioctl(fd, BINDER_SET_MAX_THREADS, &maxThreads);

if (result == -1) {

ALOGE("Binder ioctl to set max threads failed: %s", strerror(errno));

}

} else {

ALOGW("Opening '/dev/binder' failed: %sn", strerror(errno));

}

return fd;

}

咱们接着看创立的逻辑,

mVMStart = mmap(0, BINDER_VM_SIZE, PROT_READ, MAP_PRIVATE | MAP_NORESERVE, mDriverFD, 0);

经过 mmap 设置同享内存, 1M-8K(一般服务的大小);能够看到有一个常量参数 BINDER_VM_SIZE 它的值便是 #define BINDER_VM_SIZE ((110241024) – (4096 *2));

所以这个 self() 办法做了三件工作,分别是:

- 翻开驱动,open_driver() 办法;

- 设置最大线程数据,15;

- mmap,设置同享内存大小,1M-8K;

getContextObject(NULL)

咱们接下来看下 getContextObject(NULL) 做了些什么?这个办法直接调用了

sp<IBinder> ProcessState::getContextObject(const sp<IBinder>& /*caller*/)

{

return getStrongProxyForHandle(0);

}

这个办法直接调用了 getStrongProxyForHandle(0) 传入了 0;咱们进入这个办法看下都发生了什么?

sp<IBinder> ProcessState::getStrongProxyForHandle(int32_t handle)

{

sp<IBinder> result;

AutoMutex _l(mLock);

// 查找 handle 对应的资源线

handle_entry* e = lookupHandleLocked(handle);

if (e != NULL) {

IBinder* b = e->binder;

if (b == NULL || !e->refs->attemptIncWeak(this)) {

if (handle == 0) {

Parcel data;

// 测验 binder 是否已经准备就绪

status_t status = IPCThreadState::self()->transact(

0, IBinder::PING_TRANSACTION, data, NULL, 0);

if (status == DEAD_OBJECT)

return NULL;

}

// 创立 BpBinder 并回来;

b = new BpBinder(handle);

e->binder = b;

if (b) e->refs = b->getWeakRefs();

result = b;

} else {

result.force_set(b);

e->refs->decWeak(this);

}

}

return result;

}

这个办法就做了一件工作,便是创立 BpBinder,咱们能够看下,在创立这个 BpBinider 的时分做了什么?

BpBinder

BpBinder::BpBinder(int32_t handle)

: mHandle(handle)

, mAlive(1)

, mObitsSent(0)

, mObituaries(NULL)

{

ALOGV("Creating BpBinder %p handle %dn", this, mHandle);

extendObjectLifetime(OBJECT_LIFETIME_WEAK);

// 首要是调用 incWeakHandle 做一个弱引用的计数

IPCThreadState::self()->incWeakHandle(handle);

}

所以 ProcessState::self()->getContextObject(NULL) 这个参数本质便是回来 BpBinder 目标;这个 BpBinder 目标便是指向服务端的署理目标,咱们在这儿就能够认为是 ServiceManager;

BpBinder 是 BBinder 的署理目标,这儿能够认为它俩是一样的;

Java 层和 Native 层通讯的时分,需求一个 BinderProxy 和 ServiceManagerProxy,ServiceManagerProxy 里边有一个 BinderProxy,这个 BinderProxy 指向 BpBinder,也便是说 Java 层需求拿到这个 BpBinder 才干通讯,Java 经过操作 ServiceManagerProxy,而 ServiceManagerProxy 经过操作 Remote(BinderProxy),这个时分,这个 BinderProxy 它是 Java 层的,它要指向 Native 层的才干通讯,而这个 Native 层的便是 BpBinder,这个 BpBinder 便是署理目标;

Native 层也需求获取 BpBinder,可是它与 Java 层的获取方式不同,它是经过操作 BpServiceManager ,而 BpServiceManager 能够直接操作 BpBinder,这就少了一步,Java 层和 Native 层都是 BpBinder,再由 BpBinder 进行 transac 进行通讯

interface_cast

咱们接下来看下这个 interface_cast 办法,这个办法传入的参数便是 BpBinder;

template<typename INTERFACE>

inline sp<INTERFACE> interface_cast(const sp<IBinder>& obj)

{

return INTERFACE::asInterface(obj);

}

这个办法调用的是 INTERFACE::asInterface(obj),这儿是一个泛型操作,所以终究调用的是 IServiceManager 的 asInterface(obj) 办法; 可是这个办法并不在 IServiceManager 这个文件中,它运用的模板放进行了一个宏界说,咱们在找下这个宏界说

#define DECLARE_META_INTERFACE(INTERFACE)

static const android::String16 descriptor;

static android::sp<I##INTERFACE> asInterface(

const android::sp<android::IBinder>& obj);

virtual const android::String16& getInterfaceDescriptor() const;

I##INTERFACE();

virtual ~I##INTERFACE();

这儿经过宏界说之后会进行一个替换,替换之后的 C++ 代码完成如下:

static const android::String16 descriptor;

static android::sp< IServiceManager > asInterface(const

android::sp<android::IBinder>& obj)

virtual const android::String16& getInterfaceDescriptor() const;

IServiceManager ();

virtual ~IServiceManager();

该进程首要是声明asInterface(),getInterfaceDescriptor()办法,所以这儿咱们不必过多的关心;

咱们接着看下面的宏界说以及替换之后的逻辑

#define IMPLEMENT_META_INTERFACE(INTERFACE, NAME)

const android::String16 I##INTERFACE::descriptor(NAME);

const android::String16&

I##INTERFACE::getInterfaceDescriptor() const {

return I##INTERFACE::descriptor;

}

android::sp<I##INTERFACE> I##INTERFACE::asInterface(

const android::sp<android::IBinder>& obj)

{

android::sp<I##INTERFACE> intr;

if (obj != NULL) {

intr = static_cast<I##INTERFACE*>(

obj->queryLocalInterface(

I##INTERFACE::descriptor).get());

if (intr == NULL) {

intr = new Bp##INTERFACE(obj);

}

}

return intr;

}

I##INTERFACE::I##INTERFACE() { }

I##INTERFACE::~I##INTERFACE() { }

替换之后的逻辑如下:

const android::String16

IServiceManager::descriptor(“android.os.IServiceManager”);

const android::String16& IServiceManager::getInterfaceDescriptor() const

{

return IServiceManager::descriptor;

}

android::sp<IServiceManager> IServiceManager::asInterface(const

android::sp<android::IBinder>& obj)

{

android::sp<IServiceManager> intr;

if(obj != NULL) {

intr = static_cast<IServiceManager *>(

obj->queryLocalInterface(IServiceManager::descriptor).get());

if (intr == NULL) {

// 等价于 new BpServiceManager(BpBinder)

intr = new BpServiceManager(obj);

}

}

return intr;

}

IServiceManager::IServiceManager () {}

IServiceManager::~ IServiceManager() {}

展开之后,咱们首要关心的便是 asInterface 办法,这个办法具体做了什么,咱们来看下:

这儿便是创立了 BpServiceManager,构造办法中传入的 BpBinder,咱们来看下它是怎样运用 BpBinder 的;

BpServiceManager

BpServiceManager(const sp<IBinder>& impl)

: BpInterface<IServiceManager>(impl)

{

}

咱们来看下 BpInterface

template<typename INTERFACE>

class BpInterface : public INTERFACE, public BpRefBase

{

public:

BpInterface(const sp<IBinder>& remote);

protected:

virtual IBinder* onAsBinder();

};

这儿咱们直接看 BpInterface 的继承 BpRefBase

BpRefBase::BpRefBase(const sp<IBinder>& o)

: mRemote(o.get()), mRefs(NULL), mState(0)

{

extendObjectLifetime(OBJECT_LIFETIME_WEAK);

if (mRemote) {

mRemote->incStrong(this); // Removed on first IncStrong().

mRefs = mRemote->createWeak(this); // Held for our entire lifetime.

}

}

到此,咱们就找到了 mRemote 它来操作 BpBinder,也便是对应的 java 层调用 mRemote.transact() 的时分,便是调用的 BpBinder.transact() 来进行通讯;

所以这个办法也是做了三件工作

- new BpServiceManager(new BpBinder)

- remote.transcat() 远程调用

- remote = BpBinder

到此,ServiceManager 的注册与获取就写完了;

下一章预告

进程通讯注册与获取服务及线程池解说

欢迎三连

来都来了,点个重视点个赞吧,你的支持是我最大的动力~~~