前言

大家好,我是老A;

这个章节持续上一章节持续解说,首要解说下 java 层服务的注册与获取、线程池;咱们根据 AMS 来看下 java 层是怎么获取的;

SystemServer

SystemServer 的启动也是 main 函数,咱们进入这个 main 函数看下:

startService

/**

* The main entry point from zygote.

*/

public static void main(String[] args) {

new SystemServer().run();

}

里边很简单,便是直接调用了 run 办法,咱们进入这个 run 办法看下:

private void run() {

if (System.currentTimeMillis() < EARLIEST_SUPPORTED_TIME) {

Slog.w(TAG, "System clock is before 1970; setting to 1970.");

SystemClock.setCurrentTimeMillis(EARLIEST_SUPPORTED_TIME);

}

if (!SystemProperties.get("persist.sys.language").isEmpty()) {

final String languageTag = Locale.getDefault().toLanguageTag();

SystemProperties.set("persist.sys.locale", languageTag);

SystemProperties.set("persist.sys.language", "");

SystemProperties.set("persist.sys.country", "");

SystemProperties.set("persist.sys.localevar", "");

}

Slog.i(TAG, "Entered the Android system server!");

EventLog.writeEvent(EventLogTags.BOOT_PROGRESS_SYSTEM_RUN, SystemClock.uptimeMillis());

SystemProperties.set("persist.sys.dalvik.vm.lib.2", VMRuntime.getRuntime().vmLibrary());

if (SamplingProfilerIntegration.isEnabled()) {

SamplingProfilerIntegration.start();

mProfilerSnapshotTimer = new Timer();

mProfilerSnapshotTimer.schedule(new TimerTask() {

@Override

public void run() {

SamplingProfilerIntegration.writeSnapshot("system_server", null);

}

}, SNAPSHOT_INTERVAL, SNAPSHOT_INTERVAL);

}

// Mmmmmm... more memory!

VMRuntime.getRuntime().clearGrowthLimit();

VMRuntime.getRuntime().setTargetHeapUtilization(0.8f);

Build.ensureFingerprintProperty();

Environment.setUserRequired(true);

BinderInternal.disableBackgroundScheduling(true);

android.os.Process.setThreadPriority(

android.os.Process.THREAD_PRIORITY_FOREGROUND);

android.os.Process.setCanSelfBackground(false);

Looper.prepareMainLooper();

System.loadLibrary("android_servers");

performPendingShutdown();

createSystemContext();

mSystemServiceManager = new SystemServiceManager(mSystemContext);

LocalServices.addService(SystemServiceManager.class, mSystemServiceManager);

try {

startBootstrapServices();

startCoreServices();

startOtherServices();

} catch (Throwable ex) {

throw ex;

}

if (StrictMode.conditionallyEnableDebugLogging()) {

Slog.i(TAG, "Enabled StrictMode for system server main thread.");

}

Looper.loop();

throw new RuntimeException("Main thread loop unexpectedly exited");

}

首先会创立 SystemServiceManager(mSystemServiceManager = new SystemServiceManager(mSystemContext);)然后调用 startBootstrapServices 启动一系列相关服务,咱们进入这个 startBootstrapServices 办法看下:

private void startBootstrapServices() {

Installer installer = mSystemServiceManager.startService(Installer.class);

// Activity manager runs the show.

// 创立 AMS

mActivityManagerService = mSystemServiceManager.startService(

ActivityManagerService.Lifecycle.class).getService();

mActivityManagerService.setSystemServiceManager(mSystemServiceManager);

mActivityManagerService.setInstaller(installer);

// ...

}

第一步首先便是创立 AMS 服务,咱们进入这个 startService 办法看下,这个 startService 办法便是 SystemServiceManager 中的 startService 办法:

public <T extends SystemService> T startService(Class<T> serviceClass) {

try {

final String name = serviceClass.getName();

Slog.i(TAG, "Starting " + name);

Trace.traceBegin(Trace.TRACE_TAG_SYSTEM_SERVER, "StartService " + name);

// Create the service.

if (!SystemService.class.isAssignableFrom(serviceClass)) {

throw new RuntimeException("Failed to create " + name

+ ": service must extend " + SystemService.class.getName());

}

final T service;

try {

// 经过反射创立对应的 serviceClass 目标,这儿创立的便是 LifeCycle 目标

Constructor<T> constructor = serviceClass.getConstructor(Context.class);

service = constructor.newInstance(mContext);

}

// LifeCycle 增加到 ArrayList<SystemService> 调集中

mServices.add(service);

// 调用 LifyCycle 的 onStart 办法

service.onStart();

return service;

} finally {

Trace.traceEnd(Trace.TRACE_TAG_SYSTEM_SERVER);

}

}

经过反射创立对应的 serviceClass 目标,这儿创立的便是 LifeCycle 目标,并把这个目标增加到 ArrayList调集中,然后调用 LifeCycle 的 onStart 办法;

public static final class Lifecycle extends SystemService {

private final ActivityManagerService mService;

public Lifecycle(Context context) {

super(context);

mService = new ActivityManagerService(context);

}

@Override

public void onStart() {

mService.start();

}

public ActivityManagerService getService() {

return mService;

}

}

能够看到 onStart 办法中调用了 ActivityManagerService 的 onStart 办法,启动了 ActivityManagerService;

setSystemProcess

private void startBootstrapServices() {

// ...

//

// Set up the Application instance for the system process and get started.

mActivityManagerService.setSystemProcess();

}

获取 AMS 服务并调用 onStart 之后,咱们接下来看下,AMS 是怎么增加到 ServiceManager 中的,咱们进入这个 setSystemProcess 办法看下;

public void setSystemProcess() {

try {

// 将 AMS 增加到 ServiceManager 中

ServiceManager.addService(Context.ACTIVITY_SERVICE, this, true);

} catch (PackageManager.NameNotFoundException e) {

throw new RuntimeException(

"Unable to find android system package", e);

}

}

能够看到,第一行代码便是把 AMS 增加到 ServiceManager 中,咱们来看下 addService 详细是怎样增加的

public static void addService(String name, IBinder service) {

try {

getIServiceManager().addService(name, service, false);

} catch (RemoteException e) {

Log.e(TAG, "error in addService", e);

}

}

经过调用 getIServiceManager().addService 办法进行增加,这儿分为两步骤,一步是 getIServiceManager,一步是 addService

getIServiceManager()

咱们先来看下 getIServiceManager() 办法的详细完结;

private static IServiceManager getIServiceManager() {

if (sServiceManager != null) {

return sServiceManager;

}

// Find the service manager

sServiceManager = ServiceManagerNative.asInterface(BinderInternal.getContextObject());

return sServiceManager;

}

这儿进行了一个单例处理,如果有 ServiceManager 就回来,没有就创立一个 ServiceManager;这儿又要分为两步来剖析,一步是 ServiceManagerNative.asInterface,一步是 BinderInternal.getContextObject()

BinderInternal.getContextObject()

咱们来看下这个参数做了什么;

public static final native IBinder getContextObject();

直接走到了 native 层,这个终究进入的是 android_util_Binder.cpp 文件中的 getContextObject()办法,咱们进入这个办法看下:

static jobject android_os_BinderInternal_getContextObject(JNIEnv* env, jobject clazz)

{

sp<IBinder> b = ProcessState::self()->getContextObject(NULL);

return javaObjectForIBinder(env, b);

}

这个 self 上一章节咱们现已讲过了,这儿便是创立了一个 BpBinder,咱们接下来看下 javaObjectForIBinder 做了什么;

jobject javaObjectForIBinder(JNIEnv* env, const sp<IBinder>& val)

{

if (val == NULL) return NULL;

if (val->checkSubclass(&gBinderOffsets)) {

jobject object = static_cast<JavaBBinder*>(val.get())->object();

LOGDEATH("objectForBinder %p: it's our own %p!n", val.get(), object);

return object;

}

AutoMutex _l(mProxyLock);

// 查找 BinderProxy 目标

jobject object = (jobject)val->findObject(&gBinderProxyOffsets);

if (object != NULL) {

jobject res = jniGetReferent(env, object);

if (res != NULL) {

ALOGV("objectForBinder %p: found existing %p!n", val.get(), res);

return res;

}

LOGDEATH("Proxy object %p of IBinder %p no longer in working set!!!", object, val.get());

android_atomic_dec(&gNumProxyRefs);

val->detachObject(&gBinderProxyOffsets);

env->DeleteGlobalRef(object);

}

// 找不到,就会创立一个 BinderPrxoy 目标

object = env->NewObject(gBinderProxyOffsets.mClass, gBinderProxyOffsets.mConstructor);

if (object != NULL) {

// 将 BinderProxy 和 BpBinder 进行了一个绑定

env->SetLongField(object, gBinderProxyOffsets.mObject, (jlong)val.get());

val->incStrong((void*)javaObjectForIBinder);

jobject refObject = env->NewGlobalRef(

env->GetObjectField(object, gBinderProxyOffsets.mSelf));

// 将 BinderProxy 保存到 BpBinder 中

val->attachObject(&gBinderProxyOffsets, refObject,

jnienv_to_javavm(env), proxy_cleanup);

sp<DeathRecipientList> drl = new DeathRecipientList;

drl->incStrong((void*)javaObjectForIBinder);

env->SetLongField(object, gBinderProxyOffsets.mOrgue, reinterpret_cast<jlong>(drl.get()));

android_atomic_inc(&gNumProxyRefs);

incRefsCreated(env);

}

// 回来 BinderProxy

return object;

}

这个办法首先回去查找 BinderProxy,找不到就会创立一个 BinderProxy 目标,创立之后就会把 BindeProxy 和 BpBinder 进行一个绑定;

所以 BinderInternal.getContextObject() 这个办法终究拿到的便是一个 BinderProxy 目标;

ServiceManagerNative.asInterface

咱们接下来看下 asInterface 发生了什么;

static public IServiceManager asInterface(IBinder obj) {

if(obj == null) {

return null;

}

IActivityManager in = (IActivityManager)obj.queryLocalInterface(descriptor);

if (in != null) {

return in;

}

return new ServiceManagerProxy(obj);

}

这儿直接调用了 BinderProxy 的 queryLocalInterface 办法,咱们进入这个办法看下;

public IInterface queryLocalInterface(String descriptor) {

return null;

}

实际上这个办法直接回来了一个 null,所以直接回来了一个 ServiceManagerProxy 目标;

所以 ServiceManagerNative.asInterface 这个办法终究拿到的是一个 ServiceManagerProxy 目标;

addService

咱们接下来看看 addService 的详细完结,ServiceManagerProxy 是 ServiceManagerNative 的一个内部类;

public void addService(String name, IBinder service, boolean allowIsolated)

throws RemoteException {

Parcel data = Parcel.obtain();

Parcel reply = Parcel.obtain();

data.writeInterfaceToken(IServiceManager.descriptor);

data.writeString(name);

data.writeStrongBinder(service);

data.writeInt(allowIsolated ? 1 : 0);

mRemote.transact(ADD_SERVICE_TRANSACTION, data, reply, 0);

reply.recycle();

data.recycle();

}

这儿首要关怀的是 writeStrongBinder 和 transact 这两个办法;

writeStrongBinder

咱们来看下这个办法的详细完结

public final void writeStrongBinder(IBinder val) {

nativeWriteStrongBinder(mNativePtr, val);

}

进入 Parcel.java 中的

private static native void nativeWriteStrongBinder(long nativePtr, IBinder val);

这个对应的便是 android_os_Parcel.cpp 中的

{"nativeWriteStrongBinder", "(JLandroid/os/IBinder;)V", (void*)android_os_Parcel_writeStrongBinder},

static void android_os_Parcel_writeStrongBinder(JNIEnv* env, jclass clazz, jlong nativePtr, jobject object)

{

Parcel* parcel = reinterpret_cast<Parcel*>(nativePtr);

if (parcel != NULL) {

const status_t err = parcel->writeStrongBinder(ibinderForJavaObject(env, object));

if (err != NO_ERROR) {

signalExceptionForError(env, clazz, err);

}

}

}

终究调用的时分 Parcel.cpp 中的 writeStrongBinder 办法,咱们进入这个办法看下:

status_t Parcel::writeStrongBinder(const sp<IBinder>& val)

{

return flatten_binder(ProcessState::self(), val, this);

}

咱们进入这个 flatten_binder 看下:

status_t flatten_binder(const sp<ProcessState>& /*proc*/,

const sp<IBinder>& binder, Parcel* out)

{

flat_binder_object obj;

obj.flags = 0x7f | FLAT_BINDER_FLAG_ACCEPTS_FDS;

// 首先查询 binder 是否为空

if (binder != NULL) {

// 首要是查询是不是 BpBinder

IBinder *local = binder->localBinder();

if (!local) {

BpBinder *proxy = binder->remoteBinder();

if (proxy == NULL) {

ALOGE("null proxy");

}

const int32_t handle = proxy ? proxy->handle() : 0;

obj.type = BINDER_TYPE_HANDLE;

obj.binder = 0; /* Don't pass uninitialized stack data to a remote process */

obj.handle = handle;

obj.cookie = 0;

} else {

obj.type = BINDER_TYPE_BINDER;

obj.binder = reinterpret_cast<uintptr_t>(local->getWeakRefs());

obj.cookie = reinterpret_cast<uintptr_t>(local);

}

} else {

obj.type = BINDER_TYPE_BINDER;

obj.binder = 0;

obj.cookie = 0;

}

return finish_flatten_binder(binder, obj, out);

}

这个 localBinder 办法有两个完结

需求看下完结的是 IBinder 那么回来的便是空,完结的 BBinder 回来的便是 this,这儿完结的是 BBinder,所以回来的不为空,咱们来看 else 的逻辑

这儿便是将 binder 保存到了 obj 这个目标中;咱们接着看下 finish_flatten_binder 做了什么

inline static status_t finish_flatten_binder(

const sp<IBinder>& /*binder*/, const flat_binder_object& flat, Parcel* out)

{

return out->writeObject(flat, false);

}

这儿便是进行了序列化的写操作,这个 out 便是前面的 data;

所以这个 writeStrongBinder 办法便是将 AMS 放到 data 中;

transact

咱们接下来看下 transact 的详细完结;

这个 mRemote 便是 BinderProxy,所以咱们进入这个 BinderProxy 的 transact 办法看下:

public boolean transact(int code, Parcel data, Parcel reply, int flags) throws RemoteException {

Binder.checkParcel(this, code, data, "Unreasonably large binder buffer");

return transactNative(code, data, reply, flags);

}

这儿又调用到了 native 层的办法,终究调用的是 android_os_BinderProxy_transact 这个办法;

{"transactNative", "(ILandroid/os/Parcel;Landroid/os/Parcel;I)Z", (void*)android_os_BinderProxy_transact},

咱们进入这个办法看下:

static jboolean android_os_BinderProxy_transact(JNIEnv* env, jobject obj,

jint code, jobject dataObj, jobject replyObj, jint flags) // throws RemoteException

{

if (dataObj == NULL) {

jniThrowNullPointerException(env, NULL);

return JNI_FALSE;

}

Parcel* data = parcelForJavaObject(env, dataObj);

if (data == NULL) {

return JNI_FALSE;

}

Parcel* reply = parcelForJavaObject(env, replyObj);

if (reply == NULL && replyObj != NULL) {

return JNI_FALSE;

}

// 获取 BpBinder

IBinder* target = (IBinder*)

env->GetLongField(obj, gBinderProxyOffsets.mObject);

if (target == NULL) {

jniThrowException(env, "java/lang/IllegalStateException", "Binder has been finalized!");

return JNI_FALSE;

}

ALOGV("Java code calling transact on %p in Java object %p with code %" PRId32 "n",

target, obj, code);

bool time_binder_calls;

int64_t start_millis;

if (kEnableBinderSample) {

// Only log the binder call duration for things on the Java-level main thread.

// But if we don't

time_binder_calls = should_time_binder_calls();

if (time_binder_calls) {

start_millis = uptimeMillis();

}

}

//printf("Transact from Java code to %p sending: ", target); data->print();

// 调用 BpBinder 的 transcat 办法

status_t err = target->transact(code, *data, reply, flags);

//if (reply) printf("Transact from Java code to %p received: ", target); reply->print();

if (kEnableBinderSample) {

if (time_binder_calls) {

conditionally_log_binder_call(start_millis, target, code);

}

}

if (err == NO_ERROR) {

return JNI_TRUE;

} else if (err == UNKNOWN_TRANSACTION) {

return JNI_FALSE;

}

signalExceptionForError(env, obj, err, true /*canThrowRemoteException*/, data->dataSize());

return JNI_FALSE;

}

首先获取 data 和 reply,然后获取 BpBinder,然后调用 BpBinder 的 transact 办法;咱们来看下 BpBinder 中的 transact 是怎么完结的;

status_t BpBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

// Once a binder has died, it will never come back to life.

if (mAlive) {

status_t status = IPCThreadState::self()->transact(

mHandle, code, data, reply, flags);

if (status == DEAD_OBJECT) mAlive = 0;

return status;

}

return DEAD_OBJECT;

}

这儿直接调用的 IPCThreadState 的 transcat 办法,咱们跳转过去看下:

status_t IPCThreadState::transact(int32_t handle,

uint32_t code, const Parcel& data,

Parcel* reply, uint32_t flags)

{

status_t err = data.errorCheck();

flags |= TF_ACCEPT_FDS;

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BC_TRANSACTION thr " << (void*)pthread_self() << " / hand "

<< handle << " / code " << TypeCode(code) << ": "

<< indent << data << dedent << endl;

}

if (err == NO_ERROR) {

LOG_ONEWAY(">>>> SEND from pid %d uid %d %s", getpid(), getuid(),

(flags & TF_ONE_WAY) == 0 ? "READ REPLY" : "ONE WAY");

err = writeTransactionData(BC_TRANSACTION, flags, handle, code, data, NULL);

}

if (err != NO_ERROR) {

if (reply) reply->setError(err);

return (mLastError = err);

}

if ((flags & TF_ONE_WAY) == 0) {

#if 0

if (code == 4) { // relayout

ALOGI(">>>>>> CALLING transaction 4");

} else {

ALOGI(">>>>>> CALLING transaction %d", code);

}

#endif

if (reply) {

err = waitForResponse(reply);

} else {

Parcel fakeReply;

err = waitForResponse(&fakeReply);

}

#if 0

if (code == 4) { // relayout

ALOGI("<<<<<< RETURNING transaction 4");

} else {

ALOGI("<<<<<< RETURNING transaction %d", code);

}

#endif

IF_LOG_TRANSACTIONS() {

TextOutput::Bundle _b(alog);

alog << "BR_REPLY thr " << (void*)pthread_self() << " / hand "

<< handle << ": ";

if (reply) alog << indent << *reply << dedent << endl;

else alog << "(none requested)" << endl;

}

} else {

err = waitForResponse(NULL, NULL);

}

return err;

}

这个 flag 有几个值

flags |= TF_ACCEPT_FDS;

TF_ACCEPT_FDS = 0x10:允许回复中包括文件描述符;

TF_ONE_WAY:当时事务是异步的,不需求等待;

TF_ROOT_OBJECT:所包括的内容是根目标;

TF_STATUS_CODE:所包括的内容是 32-bit 的状态值;

writeTransactionData

所以这儿履行的是 同步操作,接着会调用 writeTransactionData ,咱们进入这个办法看下:

status_t IPCThreadState::writeTransactionData(int32_t cmd, uint32_t binderFlags,

int32_t handle, uint32_t code, const Parcel& data, status_t* statusBuffer)

{

binder_transaction_data tr;

tr.target.ptr = 0; /* Don't pass uninitialized stack data to a remote process */

tr.target.handle = handle;

tr.code = code;

tr.flags = binderFlags;

tr.cookie = 0;

tr.sender_pid = 0;

tr.sender_euid = 0;

const status_t err = data.errorCheck();

if (err == NO_ERROR) {

tr.data_size = data.ipcDataSize();

tr.data.ptr.buffer = data.ipcData();

tr.offsets_size = data.ipcObjectsCount()*sizeof(binder_size_t);

tr.data.ptr.offsets = data.ipcObjects();

} else if (statusBuffer) {

tr.flags |= TF_STATUS_CODE;

*statusBuffer = err;

tr.data_size = sizeof(status_t);

tr.data.ptr.buffer = reinterpret_cast<uintptr_t>(statusBuffer);

tr.offsets_size = 0;

tr.data.ptr.offsets = 0;

} else {

return (mLastError = err);

}

// 首要是这儿,向 out 写入 BC_TRANSCATION 指令

mOut.writeInt32(cmd);

mOut.write(&tr, sizeof(tr));

return NO_ERROR;

}

这个办法中心的当地便是向 out 写入 BC_TRANSCATION 指令;

waitForResponse

咱们接着拐回去看 transact 的逻辑,下面调用了 waitForResponse 办法

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

uint32_t cmd;

int32_t err;

while (1) {

if ((err=talkWithDriver()) < NO_ERROR) break;

err = mIn.errorCheck();

if (err < NO_ERROR) break;

if (mIn.dataAvail() == 0) continue;

cmd = (uint32_t)mIn.readInt32();

IF_LOG_COMMANDS() {

alog << "Processing waitForResponse Command: "

<< getReturnString(cmd) << endl;

}

switch (cmd) {

case BR_TRANSACTION_COMPLETE:

if (!reply && !acquireResult) goto finish;

break;

case BR_DEAD_REPLY:

err = DEAD_OBJECT;

goto finish;

case BR_FAILED_REPLY:

err = FAILED_TRANSACTION;

goto finish;

case BR_ACQUIRE_RESULT:

{

ALOG_ASSERT(acquireResult != NULL, "Unexpected brACQUIRE_RESULT");

const int32_t result = mIn.readInt32();

if (!acquireResult) continue;

*acquireResult = result ? NO_ERROR : INVALID_OPERATION;

}

goto finish;

case BR_REPLY:

{

binder_transaction_data tr;

err = mIn.read(&tr, sizeof(tr));

ALOG_ASSERT(err == NO_ERROR, "Not enough command data for brREPLY");

if (err != NO_ERROR) goto finish;

if (reply) {

if ((tr.flags & TF_STATUS_CODE) == 0) {

reply->ipcSetDataReference(

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t),

freeBuffer, this);

} else {

err = *reinterpret_cast<const status_t*>(tr.data.ptr.buffer);

freeBuffer(NULL,

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), this);

}

} else {

freeBuffer(NULL,

reinterpret_cast<const uint8_t*>(tr.data.ptr.buffer),

tr.data_size,

reinterpret_cast<const binder_size_t*>(tr.data.ptr.offsets),

tr.offsets_size/sizeof(binder_size_t), this);

continue;

}

}

goto finish;

default:

err = executeCommand(cmd);

if (err != NO_ERROR) goto finish;

break;

}

}

finish:

if (err != NO_ERROR) {

if (acquireResult) *acquireResult = err;

if (reply) reply->setError(err);

mLastError = err;

}

return err;

}

这个办法做的逻辑还比较多,但是中心的逻辑是 talkWithDriver 和

talkWithDriver

咱们来看下 talkWithDriver 的逻辑;

status_t IPCThreadState::talkWithDriver(bool doReceive)

{

if (mProcess->mDriverFD <= 0) {

return -EBADF;

}

binder_write_read bwr;

// Is the read buffer empty?

const bool needRead = mIn.dataPosition() >= mIn.dataSize();

// We don't want to write anything if we are still reading

// from data left in the input buffer and the caller

// has requested to read the next data.

const size_t outAvail = (!doReceive || needRead) ? mOut.dataSize() : 0;

bwr.write_size = outAvail;

bwr.write_buffer = (uintptr_t)mOut.data();

// This is what we'll read.

if (doReceive && needRead) {

bwr.read_size = mIn.dataCapacity();

bwr.read_buffer = (uintptr_t)mIn.data();

} else {

bwr.read_size = 0;

bwr.read_buffer = 0;

}

IF_LOG_COMMANDS() {

TextOutput::Bundle _b(alog);

if (outAvail != 0) {

alog << "Sending commands to driver: " << indent;

const void* cmds = (const void*)bwr.write_buffer;

const void* end = ((const uint8_t*)cmds)+bwr.write_size;

alog << HexDump(cmds, bwr.write_size) << endl;

while (cmds < end) cmds = printCommand(alog, cmds);

alog << dedent;

}

alog << "Size of receive buffer: " << bwr.read_size

<< ", needRead: " << needRead << ", doReceive: " << doReceive << endl;

}

// Return immediately if there is nothing to do.

if ((bwr.write_size == 0) && (bwr.read_size == 0)) return NO_ERROR;

bwr.write_consumed = 0;

bwr.read_consumed = 0;

status_t err;

do {

IF_LOG_COMMANDS() {

alog << "About to read/write, write size = " << mOut.dataSize() << endl;

}

#if defined(HAVE_ANDROID_OS)

// 1、经过 ioctol 调用到 Binder 驱动的 binder_ioctol_write_read 进行写(binder_thread_write)的调用

if (ioctl(mProcess->mDriverFD, BINDER_WRITE_READ, &bwr) >= 0)

err = NO_ERROR;

else

err = -errno;

#else

err = INVALID_OPERATION;

#endif

if (mProcess->mDriverFD <= 0) {

err = -EBADF;

}

IF_LOG_COMMANDS() {

alog << "Finished read/write, write size = " << mOut.dataSize() << endl;

}

} while (err == -EINTR);

IF_LOG_COMMANDS() {

alog << "Our err: " << (void*)(intptr_t)err << ", write consumed: "

<< bwr.write_consumed << " (of " << mOut.dataSize()

<< "), read consumed: " << bwr.read_consumed << endl;

}

if (err >= NO_ERROR) {

if (bwr.write_consumed > 0) {

if (bwr.write_consumed < mOut.dataSize())

mOut.remove(0, bwr.write_consumed);

else

mOut.setDataSize(0);

}

if (bwr.read_consumed > 0) {

mIn.setDataSize(bwr.read_consumed);

mIn.setDataPosition(0);

}

IF_LOG_COMMANDS() {

TextOutput::Bundle _b(alog);

alog << "Remaining data size: " << mOut.dataSize() << endl;

alog << "Received commands from driver: " << indent;

const void* cmds = mIn.data();

const void* end = mIn.data() + mIn.dataSize();

alog << HexDump(cmds, mIn.dataSize()) << endl;

while (cmds < end) cmds = printReturnCommand(alog, cmds);

alog << dedent;

}

return NO_ERROR;

}

return err;

}

1、经过 ioctol 调用到 Binder 驱动的 binder_ioctl_write_read 进行写(binder_thread_write)的调用,这个办法履行的 case 是 BC_TRANSCATION;

static int binder_thread_write(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed)

{

switch (cmd) {

case BC_TRANSACTION:

case BC_REPLY: {

struct binder_transaction_data tr;

if (copy_from_user(&tr, ptr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

binder_transaction(proc, thread, &tr, cmd == BC_REPLY, 0);

break;

}

}

}

这个case直接调用了 binder_transaction 办法,咱们进入这个 binder_transaction 办法看下,这个办法比较长,咱们来一步一步的剖析下:

static void binder_transaction(struct binder_proc *proc,

struct binder_thread *thread,

struct binder_transaction_data *tr, int reply,

binder_size_t extra_buffers_size)

{

int ret;

struct binder_transaction *t;

struct binder_work *tcomplete;

binder_size_t *offp, *off_end, *off_start;

binder_size_t off_min;

u8 *sg_bufp, *sg_buf_end;

struct binder_proc *target_proc;

struct binder_thread *target_thread = NULL;

struct binder_node *target_node = NULL;

struct list_head *target_list;

wait_queue_head_t *target_wait;

struct binder_transaction *in_reply_to = NULL;

struct binder_transaction_log_entry *e;

uint32_t return_error;

struct binder_buffer_object *last_fixup_obj = NULL;

binder_size_t last_fixup_min_off = 0;

struct binder_context *context = proc->context;

e = binder_transaction_log_add(&binder_transaction_log);

e->call_type = reply ? 2 : !!(tr->flags & TF_ONE_WAY);

e->from_proc = proc->pid;

e->from_thread = thread->pid;

e->target_handle = tr->target.handle;

e->data_size = tr->data_size;

e->offsets_size = tr->offsets_size;

e->context_name = proc->context->name;

if (reply) {

in_reply_to = thread->transaction_stack;

if (in_reply_to == NULL) {

binder_user_error("%d:%d got reply transaction with no transaction stackn",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

goto err_empty_call_stack;

}

binder_set_nice(in_reply_to->saved_priority);

if (in_reply_to->to_thread != thread) {

binder_user_error("%d:%d got reply transaction with bad transaction stack, transaction %d has target %d:%dn",

proc->pid, thread->pid, in_reply_to->debug_id,

in_reply_to->to_proc ?

in_reply_to->to_proc->pid : 0,

in_reply_to->to_thread ?

in_reply_to->to_thread->pid : 0);

return_error = BR_FAILED_REPLY;

in_reply_to = NULL;

goto err_bad_call_stack;

}

thread->transaction_stack = in_reply_to->to_parent;

target_thread = in_reply_to->from;

if (target_thread == NULL) {

return_error = BR_DEAD_REPLY;

goto err_dead_binder;

}

if (target_thread->transaction_stack != in_reply_to) {

binder_user_error("%d:%d got reply transaction with bad target transaction stack %d, expected %dn",

proc->pid, thread->pid,

target_thread->transaction_stack ?

target_thread->transaction_stack->debug_id : 0,

in_reply_to->debug_id);

return_error = BR_FAILED_REPLY;

in_reply_to = NULL;

target_thread = NULL;

goto err_dead_binder;

}

target_proc = target_thread->proc;

} else {

// 由于 cmd == BC_TRANSACTION 所以 reply == false,履行这儿的逻辑

if (tr->target.handle) {

struct binder_ref *ref;

ref = binder_get_ref(proc, tr->target.handle, true);

if (ref == NULL) {

binder_user_error("%d:%d got transaction to invalid handlen",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

goto err_invalid_target_handle;

}

target_node = ref->node;

} else {

// 拿到 Binder 目标,

target_node = context->binder_context_mgr_node;

if (target_node == NULL) {

return_error = BR_DEAD_REPLY;

goto err_no_context_mgr_node;

}

}

e->to_node = target_node->debug_id;

target_proc = target_node->proc;

if (target_proc == NULL) {

return_error = BR_DEAD_REPLY;

goto err_dead_binder;

}

if (security_binder_transaction(proc->tsk,

target_proc->tsk) < 0) {

return_error = BR_FAILED_REPLY;

goto err_invalid_target_handle;

}

if (!(tr->flags & TF_ONE_WAY) && thread->transaction_stack) {

struct binder_transaction *tmp;

tmp = thread->transaction_stack;

if (tmp->to_thread != thread) {

binder_user_error("%d:%d got new transaction with bad transaction stack, transaction %d has target %d:%dn",

proc->pid, thread->pid, tmp->debug_id,

tmp->to_proc ? tmp->to_proc->pid : 0,

tmp->to_thread ?

tmp->to_thread->pid : 0);

return_error = BR_FAILED_REPLY;

goto err_bad_call_stack;

}

while (tmp) {

if (tmp->from && tmp->from->proc == target_proc)

target_thread = tmp->from;

tmp = tmp->from_parent;

}

}

}

if (target_thread) {

e->to_thread = target_thread->pid;

target_list = &target_thread->todo;

target_wait = &target_thread->wait;

} else {

target_list = &target_proc->todo;

target_wait = &target_proc->wait;

}

e->to_proc = target_proc->pid;

/* TODO: reuse incoming transaction for reply */

// 初始化 t

t = kzalloc(sizeof(*t), GFP_KERNEL);

if (t == NULL) {

return_error = BR_FAILED_REPLY;

goto err_alloc_t_failed;

}

binder_stats_created(BINDER_STAT_TRANSACTION);

// 初始化 tcomplete

tcomplete = kzalloc(sizeof(*tcomplete), GFP_KERNEL);

if (tcomplete == NULL) {

return_error = BR_FAILED_REPLY;

goto err_alloc_tcomplete_failed;

}

binder_stats_created(BINDER_STAT_TRANSACTION_COMPLETE);

t->debug_id = ++binder_last_id;

e->debug_id = t->debug_id;

if (reply)

binder_debug(BINDER_DEBUG_TRANSACTION,

"%d:%d BC_REPLY %d -> %d:%d, data %016llx-%016llx size %lld-%lld-%lldn",

proc->pid, thread->pid, t->debug_id,

target_proc->pid, target_thread->pid,

(u64)tr->data.ptr.buffer,

(u64)tr->data.ptr.offsets,

(u64)tr->data_size, (u64)tr->offsets_size,

(u64)extra_buffers_size);

else

binder_debug(BINDER_DEBUG_TRANSACTION,

"%d:%d BC_TRANSACTION %d -> %d - node %d, data %016llx-%016llx size %lld-%lld-%lldn",

proc->pid, thread->pid, t->debug_id,

target_proc->pid, target_node->debug_id,

(u64)tr->data.ptr.buffer,

(u64)tr->data.ptr.offsets,

(u64)tr->data_size, (u64)tr->offsets_size,

(u64)extra_buffers_size);

if (!reply && !(tr->flags & TF_ONE_WAY))

t->from = thread;

else

t->from = NULL;

t->sender_euid = task_euid(proc->tsk);

t->to_proc = target_proc;

t->to_thread = target_thread;

t->code = tr->code;

t->flags = tr->flags;

t->priority = task_nice(current);

trace_binder_transaction(reply, t, target_node);

// 去 mmap 映射的空间内申请一块空间

t->buffer = binder_alloc_buf(target_proc, tr->data_size,

tr->offsets_size, extra_buffers_size,

!reply && (t->flags & TF_ONE_WAY));

if (t->buffer == NULL) {

return_error = BR_FAILED_REPLY;

goto err_binder_alloc_buf_failed;

}

t->buffer->allow_user_free = 0;

t->buffer->debug_id = t->debug_id;

t->buffer->transaction = t;

t->buffer->target_node = target_node;

trace_binder_transaction_alloc_buf(t->buffer);

if (target_node)

binder_inc_node(target_node, 1, 0, NULL);

off_start = (binder_size_t *)(t->buffer->data +

ALIGN(tr->data_size, sizeof(void *)));

offp = off_start;

// 进行数据的复制,所以== Binder 的一次数据复制便是在这儿完结的

if (copy_from_user(t->buffer->data, (const void __user *)(uintptr_t)

tr->data.ptr.buffer, tr->data_size)) {

binder_user_error("%d:%d got transaction with invalid data ptrn",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

goto err_copy_data_failed;

}

if (copy_from_user(offp, (const void __user *)(uintptr_t)

tr->data.ptr.offsets, tr->offsets_size)) {

binder_user_error("%d:%d got transaction with invalid offsets ptrn",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

goto err_copy_data_failed;

}

if (!IS_ALIGNED(tr->offsets_size, sizeof(binder_size_t))) {

binder_user_error("%d:%d got transaction with invalid offsets size, %lldn",

proc->pid, thread->pid, (u64)tr->offsets_size);

return_error = BR_FAILED_REPLY;

goto err_bad_offset;

}

if (!IS_ALIGNED(extra_buffers_size, sizeof(u64))) {

binder_user_error("%d:%d got transaction with unaligned buffers size, %lldn",

proc->pid, thread->pid,

(u64)extra_buffers_size);

return_error = BR_FAILED_REPLY;

goto err_bad_offset;

}

off_end = (void *)off_start + tr->offsets_size;

sg_bufp = (u8 *)(PTR_ALIGN(off_end, sizeof(void *)));

sg_buf_end = sg_bufp + extra_buffers_size;

off_min = 0;

for (; offp < off_end; offp++) {

struct binder_object_header *hdr;

size_t object_size = binder_validate_object(t->buffer, *offp);

if (object_size == 0 || *offp < off_min) {

binder_user_error("%d:%d got transaction with invalid offset (%lld, min %lld max %lld) or object.n",

proc->pid, thread->pid, (u64)*offp,

(u64)off_min,

(u64)t->buffer->data_size);

return_error = BR_FAILED_REPLY;

goto err_bad_offset;

}

hdr = (struct binder_object_header *)(t->buffer->data + *offp);

off_min = *offp + object_size;

switch (hdr->type) {

case BINDER_TYPE_BINDER:

case BINDER_TYPE_WEAK_BINDER: {

struct flat_binder_object *fp;

fp = to_flat_binder_object(hdr);

ret = binder_translate_binder(fp, t, thread);

if (ret < 0) {

return_error = BR_FAILED_REPLY;

goto err_translate_failed;

}

} break;

case BINDER_TYPE_HANDLE:

case BINDER_TYPE_WEAK_HANDLE: {

struct flat_binder_object *fp;

fp = to_flat_binder_object(hdr);

ret = binder_translate_handle(fp, t, thread);

if (ret < 0) {

return_error = BR_FAILED_REPLY;

goto err_translate_failed;

}

} break;

case BINDER_TYPE_FD: {

struct binder_fd_object *fp = to_binder_fd_object(hdr);

int target_fd = binder_translate_fd(fp->fd, t, thread,

in_reply_to);

if (target_fd < 0) {

return_error = BR_FAILED_REPLY;

goto err_translate_failed;

}

fp->pad_binder = 0;

fp->fd = target_fd;

} break;

case BINDER_TYPE_FDA: {

struct binder_fd_array_object *fda =

to_binder_fd_array_object(hdr);

struct binder_buffer_object *parent =

binder_validate_ptr(t->buffer, fda->parent,

off_start,

offp - off_start);

if (!parent) {

binder_user_error("%d:%d got transaction with invalid parent offset or typen",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

goto err_bad_parent;

}

if (!binder_validate_fixup(t->buffer, off_start,

parent, fda->parent_offset,

last_fixup_obj,

last_fixup_min_off)) {

binder_user_error("%d:%d got transaction with out-of-order buffer fixupn",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

goto err_bad_parent;

}

ret = binder_translate_fd_array(fda, parent, t, thread,

in_reply_to);

if (ret < 0) {

return_error = BR_FAILED_REPLY;

goto err_translate_failed;

}

last_fixup_obj = parent;

last_fixup_min_off =

fda->parent_offset + sizeof(u32) * fda->num_fds;

} break;

case BINDER_TYPE_PTR: {

struct binder_buffer_object *bp =

to_binder_buffer_object(hdr);

size_t buf_left = sg_buf_end - sg_bufp;

if (bp->length > buf_left) {

binder_user_error("%d:%d got transaction with too large buffern",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

goto err_bad_offset;

}

if (copy_from_user(sg_bufp,

(const void __user *)(uintptr_t)

bp->buffer, bp->length)) {

binder_user_error("%d:%d got transaction with invalid offsets ptrn",

proc->pid, thread->pid);

return_error = BR_FAILED_REPLY;

goto err_copy_data_failed;

}

/* Fixup buffer pointer to target proc address space */

bp->buffer = (uintptr_t)sg_bufp +

target_proc->user_buffer_offset;

sg_bufp += ALIGN(bp->length, sizeof(u64));

ret = binder_fixup_parent(t, thread, bp, off_start,

offp - off_start,

last_fixup_obj,

last_fixup_min_off);

if (ret < 0) {

return_error = BR_FAILED_REPLY;

goto err_translate_failed;

}

last_fixup_obj = bp;

last_fixup_min_off = 0;

} break;

default:

binder_user_error("%d:%d got transaction with invalid object type, %xn",

proc->pid, thread->pid, hdr->type);

return_error = BR_FAILED_REPLY;

goto err_bad_object_type;

}

}

if (reply) {

BUG_ON(t->buffer->async_transaction != 0);

binder_pop_transaction(target_thread, in_reply_to);

} else if (!(t->flags & TF_ONE_WAY)) {

BUG_ON(t->buffer->async_transaction != 0);

t->need_reply = 1;

t->from_parent = thread->transaction_stack;

thread->transaction_stack = t;

} else {

BUG_ON(target_node == NULL);

BUG_ON(t->buffer->async_transaction != 1);

if (target_node->has_async_transaction) {

target_list = &target_node->async_todo;

target_wait = NULL;

} else

target_node->has_async_transaction = 1;

}

t->work.type = BINDER_WORK_TRANSACTION;

list_add_tail(&t->work.entry, target_list);

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;

list_add_tail(&tcomplete->entry, &thread->todo);

if (target_wait)

wake_up_interruptible(target_wait);

return;

err_translate_failed:

err_bad_object_type:

err_bad_offset:

err_bad_parent:

err_copy_data_failed:

trace_binder_transaction_failed_buffer_release(t->buffer);

binder_transaction_buffer_release(target_proc, t->buffer, offp);

t->buffer->transaction = NULL;

binder_free_buf(target_proc, t->buffer);

err_binder_alloc_buf_failed:

kfree(tcomplete);

binder_stats_deleted(BINDER_STAT_TRANSACTION_COMPLETE);

err_alloc_tcomplete_failed:

kfree(t);

binder_stats_deleted(BINDER_STAT_TRANSACTION);

err_alloc_t_failed:

err_bad_call_stack:

err_empty_call_stack:

err_dead_binder:

err_invalid_target_handle:

err_no_context_mgr_node:

binder_debug(BINDER_DEBUG_FAILED_TRANSACTION,

"%d:%d transaction failed %d, size %lld-%lldn",

proc->pid, thread->pid, return_error,

(u64)tr->data_size, (u64)tr->offsets_size);

{

struct binder_transaction_log_entry *fe;

fe = binder_transaction_log_add(&binder_transaction_log_failed);

*fe = *e;

}

BUG_ON(thread->return_error != BR_OK);

if (in_reply_to) {

thread->return_error = BR_TRANSACTION_COMPLETE;

binder_send_failed_reply(in_reply_to, return_error);

} else

thread->return_error = return_error;

}

由于 cmd == BC_TRANSACTION 所以 reply == false,履行 else 的逻辑;

这个办法做了如下处理

- 获取 target_node Binder目标

- 获取 proc 目标

- 保存到 todo,wait 中

- 创立 t,tcomplete

- 数据的一次复制

- binder_transcation_binder 转换成 handle

- thread_transcatin_sack = t 方便 sm 找到 client

- t->work.type = BINDER_WORK_TRANSCATION; 给 ServiceManager 处理事情

- tcomplete->type = BINDER_WORK_TRANSCATION_COMPLETE; 给 client 挂起

- wake_up_interruptible 唤醒 ServiceManager

咱们接下来看下 client 的挂起逻辑;

binder 在处理完 transact 之后,会接着回去,经过 binder_thread 进来的,这个时分有数据了,就会履行 read 操作;

static int binder_thread_read(struct binder_proc *proc,

struct binder_thread *thread,

binder_uintptr_t binder_buffer, size_t size,

binder_size_t *consumed, int non_block)

{

// 放 BR_NOOP

if (*consumed == 0) {

if (put_user(BR_NOOP, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

}

while (1) {

uint32_t cmd;

struct binder_transaction_data tr;

struct binder_work *w;

struct binder_transaction *t = NULL;

// 咱们前面现已将数据吸入todo了,所以这儿不为空,就会进入这个 list_first_entry 办法获取 w 所以这个 w 也不会为空;这个 w 便是前面说的 BINDER_WORK_TRANSACTION_COMPLETE

if (!list_empty(&thread->todo)) {

w = list_first_entry(&thread->todo, struct binder_work,

entry);

}

//

switch (w->type) {

case BINDER_WORK_TRANSACTION_COMPLETE: {

cmd = BR_TRANSACTION_COMPLETE;

if (put_user(cmd, (uint32_t __user *)ptr))

return -EFAULT;

ptr += sizeof(uint32_t);

binder_stat_br(proc, thread, cmd);

binder_debug(BINDER_DEBUG_TRANSACTION_COMPLETE,

"%d:%d BR_TRANSACTION_COMPLETEn",

proc->pid, thread->pid);

list_del(&w->entry);

kfree(w);

binder_stats_deleted(BINDER_STAT_TRANSACTION_COMPLETE);

} break;

}

}

}

咱们前面现已将数据吸入todo了,所以这儿不为空,就会进入这个 list_first_entry 办法获取 w 所以这个 w 也不会为空;这个 w 便是前面说的 BINDER_WORK_TRANSACTION_COMPLETE;

进入这个 switch 之后,获取的 cmd 指令便是 BR_TRANSACTION_COMPLETE,获取这个指令之后,就会回来,回来到 talkWithDriver,这个 talkWithDriver 在 waitForResponse 中调用的,咱们拐回去看下:

接着在 waitForResponse 中履行 cmd = BR_TRANSACTION_COMPLETE 这个指令;

status_t IPCThreadState::waitForResponse(Parcel *reply, status_t *acquireResult)

{

switch (cmd) {

case BR_TRANSACTION_COMPLETE:

// reply 这个当地咱们是同步,所以这个当地不会进去,持续 while 循环,持续 talkWithDriver 的调用

if (!reply && !acquireResult) goto finish;

break;

}

}

reply 这个当地咱们是同步,所以这个当地不会进去,持续 while 循环,持续 talkWithDriver 的调用,又会走到 binder_thread_read 持续读数据,终究履行到 ret = wait_event_freezable(thread->wait, binder_has_thread_work(thread)) 挂起;

到此 client 就被挂起了,在这等待 ServiceManager 处理完结之后对它唤醒;

ServiceManager 处理增加的服务

咱们接下来看下 ServiceManager 是怎么处理增加的服务的;

要看 ServiceManager 怎么处理增加的服务,咱们需求回顾前面讲的 ServiceManager 是在哪里挂起的,它的挂起就在 binder_thread_read 办法中的下面这个逻辑

ret = wait_event_freezable_exclusive(proc->wait, binder_has_proc_work(proc, thread));

引发之后,ServiceManager 要处理的指令便是 BINDER_WORK_TRANSCATION,然后就会走到下面的逻辑:

if (t->buffer->target_node) {

struct binder_node *target_node = t->buffer->target_node;

tr.target.ptr = target_node->ptr;

tr.cookie = target_node->cookie;

t->saved_priority = task_nice(current);

if (t->priority < target_node->min_priority &&

!(t->flags & TF_ONE_WAY))

binder_set_nice(t->priority);

else if (!(t->flags & TF_ONE_WAY) ||

t->saved_priority > target_node->min_priority)

binder_set_nice(target_node->min_priority);

cmd = BR_TRANSACTION;

} else {

tr.target.ptr = 0;

tr.cookie = 0;

cmd = BR_REPLY;

}

}

这儿获取到的要处理的指令便是 BR_TRANSACTION,经过 binder 驱动将这个指令回来,咱们接着来看看下这个指令的解析,直接进入 binder.c 文件中的 binder_parse 办法看下;

int binder_parse(struct binder_state *bs, struct binder_io *bio,

uintptr_t ptr, size_t size, binder_handler func)

{

switch(cmd) {

case BR_TRANSACTION: {

struct binder_transaction_data *txn = (struct binder_transaction_data *) ptr;

if ((end - ptr) < sizeof(*txn)) {

ALOGE("parse: txn too small!n");

return -1;

}

binder_dump_txn(txn);

if (func) {

unsigned rdata[256/4];

struct binder_io msg;

struct binder_io reply;

int res;

// 初始化 reply

bio_init(&reply, rdata, sizeof(rdata), 4);

bio_init_from_txn(&msg, txn);

// 函数指针,指向的是调用 binder_loop 办法传入的 svcmgr_handler

res = func(bs, txn, &msg, &reply);

//

binder_send_reply(bs, &reply, txn->data.ptr.buffer, res);

}

ptr += sizeof(*txn);

break;

}

}

}

这个解析做了三件事情

- bio_init 初始化 reply

- res = func(bs, txn, &msg, &reply); 函数指针,指向的是调用 binder_loop 办法传入的 svcmgr_handler(用来注册一切的服务)

- binder_send_reply

咱们进入这个 svcmgr_handler 函数看下,这个函数会调用到注册的逻辑,履行 do_add_service 函数

int svcmgr_handler(struct binder_state *bs,

struct binder_transaction_data *txn,

struct binder_io *msg,

{

switch(txn->code) {

case SVC_MGR_ADD_SERVICE:

s = bio_get_string16(msg, &len);

if (s == NULL) {

return -1;

}

handle = bio_get_ref(msg);

allow_isolated = bio_get_uint32(msg) ? 1 : 0;

// 履行这个函数,进行服务的注册

if (do_add_service(bs, s, len, handle, txn->sender_euid,

allow_isolated, txn->sender_pid))

return -1;

break;

}

}

咱们进入这个 do_add_service 办法看下:

int do_add_service(struct binder_state *bs,

const uint16_t *s, size_t len,

uint32_t handle, uid_t uid, int allow_isolated,

pid_t spid)

{

// 先查找服务

si = find_svc(s, len);

if (si) {

if (si->handle) {

ALOGE("add_service('%s',%x) uid=%d - ALREADY REGISTERED, OVERRIDEn",

str8(s, len), handle, uid);

svcinfo_death(bs, si);

}

si->handle = handle;

} else {

si = malloc(sizeof(*si) + (len + 1) * sizeof(uint16_t));

if (!si) {

ALOGE("add_service('%s',%x) uid=%d - OUT OF MEMORYn",

str8(s, len), handle, uid);

return -1;

}

si->handle = handle;

si->len = len;

memcpy(si->name, s, (len + 1) * sizeof(uint16_t));

si->name[len] = '0';

si->death.func = (void*) svcinfo_death;

si->death.ptr = si;

si->allow_isolated = allow_isolated;

si->next = svclist;

// 将服务保存到 svclist 中

svclist = si;

}

}

所以说 ServiceManager 运用的是 svclist 来保存一切服务的;

咱们接着拐回去看 binder_send_reply 函数,看下都发送了哪些指令;

void binder_send_reply(struct binder_state *bs,

struct binder_io *reply,

binder_uintptr_t buffer_to_free,

int status)

{

struct {

uint32_t cmd_free;

binder_uintptr_t buffer;

uint32_t cmd_reply;

struct binder_transaction_data txn;

} __attribute__((packed)) data;

data.cmd_free = BC_FREE_BUFFER;

data.buffer = buffer_to_free;

data.cmd_reply = BC_REPLY;

data.txn.target.ptr = 0;

data.txn.cookie = 0;

data.txn.code = 0;

if (status) {

data.txn.flags = TF_STATUS_CODE;

data.txn.data_size = sizeof(int);

data.txn.offsets_size = 0;

data.txn.data.ptr.buffer = (uintptr_t)&status;

data.txn.data.ptr.offsets = 0;

} else {

data.txn.flags = 0;

data.txn.data_size = reply->data - reply->data0;

data.txn.offsets_size = ((char*) reply->offs) - ((char*) reply->offs0);

data.txn.data.ptr.buffer = (uintptr_t)reply->data0;

data.txn.data.ptr.offsets = (uintptr_t)reply->offs0;

}

binder_write(bs, &data, sizeof(data));

}

发送 BC_REPLY 给 binder 驱动,并调用 binder_write 让驱动写,就会调用到驱动层的 binder_thread_write 函数,履行 BC_REPLY;

case BC_REPLY: {

struct binder_transaction_data tr;

if (copy_from_user(&tr, ptr, sizeof(tr)))

return -EFAULT;

ptr += sizeof(tr);

binder_transaction(proc, thread, &tr,

cmd == BC_REPLY, 0);

break;

}

就会履行 binder_transaction 逻辑,此刻 cmd 的值便是 BC_REPLY; 这个办法前面现已剖析了,咱们能够直接看 reply 的逻辑;

t->work.type = BINDER_WORK_TRANSACTION;

list_add_tail(&t->work.entry, target_list);

tcomplete->type = BINDER_WORK_TRANSACTION_COMPLETE;

list_add_tail(&tcomplete->entry, &thread->todo);

if (target_wait)

wake_up_interruptible(target_wait);

履行到这儿,就会把 reply 的逻辑回来给 client,client 就会被唤醒;

咱们接下来看下 ServiceManager 怎么处理 transcat

这儿咱们直接到 IPCThreadState 的 executeCommand 函数看下:

status_t IPCThreadState::executeCommand(int32_t cmd)

{

case BR_TRANSACTION:

{

if (tr.target.ptr) {

if (reinterpret_cast<RefBase::weakref_type*>(

tr.target.ptr)->attemptIncStrong(this)) {

error = reinterpret_cast<BBinder*>(tr.cookie)->transact(tr.code, buffer,&reply, tr.flags);

reinterpret_cast<BBinder*>(tr.cookie)->decStrong(this);

} else {

error = UNKNOWN_TRANSACTION;

}

}

}

}

就会调用到 BBinder 的 transact 函数,咱们进入 BBinder 的这个函数看下;

status_t BBinder::transact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags)

{

data.setDataPosition(0);

status_t err = NO_ERROR;

switch (code) {

case PING_TRANSACTION:

reply->writeInt32(pingBinder());

break;

default:

err = onTransact(code, data, reply, flags);

break;

}

if (reply != NULL) {

reply->setDataPosition(0);

}

return err;

}

接着就会调用 onTranscat 函数,这个 onTranscat 调用的是 android_util_Binder.cpp 中的 JavaBBinder 中的 onTranscat 函数

virtual status_t onTransact(

uint32_t code, const Parcel& data, Parcel* reply, uint32_t flags = 0)

{

jboolean res = env->CallBooleanMethod(mObject, gBinderOffsets.mExecTransact,

code, reinterpret_cast<jlong>(&data), reinterpret_cast<jlong>(reply), flags);

}

这个终究调用的是 Binder.java 的 executeTransac 办法;

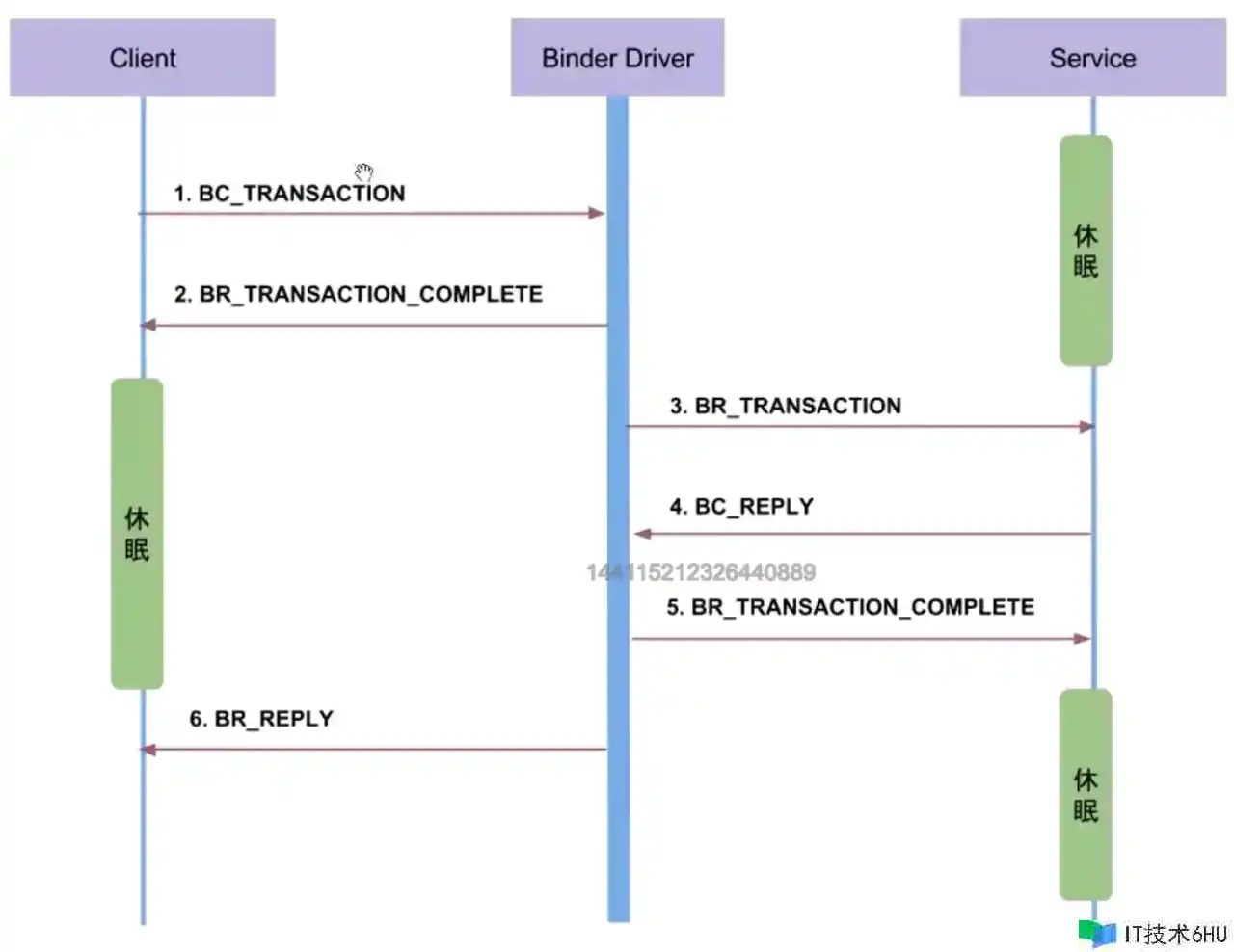

全体便是这样的一个流程图;

到此,完好的进程对服务的注册与获取就解说完了;

下一章预告

Dalvik VM进程系统浅剖析;

欢迎三连

都看到最终了,点个关注点个赞吧,你的支撑是我最大的动力~~~