继续创作,加速生长!这是我参与「日新计划 6 月更文挑战」的第17天,点击检查活动详情

ShowMeAI日报系列全新晋级!覆盖AI人工智能 东西&结构 | 项目&代码 | 博文&同享 | 数据&资源 | 研讨&论文 等方向。点击检查 历史文章列表,在大众号内订阅论题 #ShowMeAI资讯日报,可接纳每日最新推送。点击 专题合辑&上下文英语电子月刊 快速阅读各专题全集。

1.东西&结构

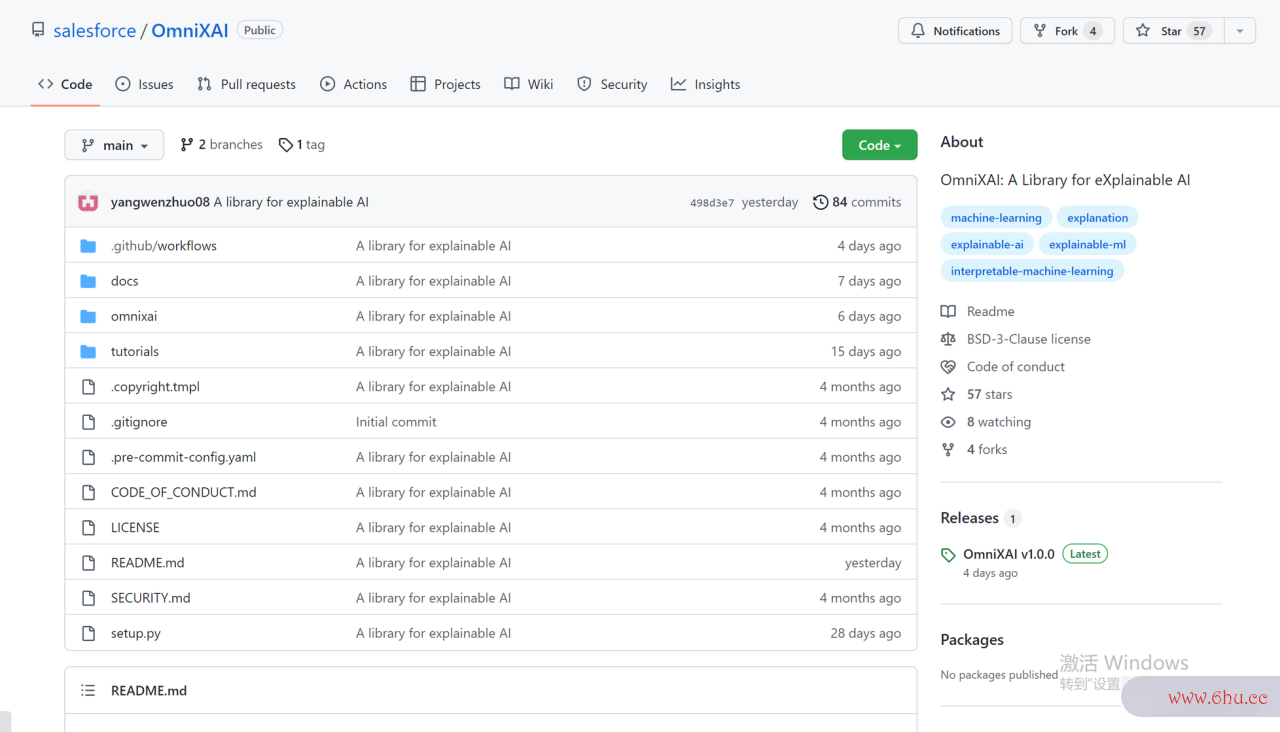

东西库:OmniXAI – 可解释AI开发库

tags: [AI,可解释]

‘OmniXAI: A Library for Explainable AI – OmniXAI: A Library for eXplainable AI’ by Salesforce

GitHub: github大数据技术.com/salesforce/…

东西库:WeTTS – 产等第端到端文本语音合成东西包

tags: [语音合成,端到端]

‘WeTTS – Production First and Produc大数据专业学什么tio龚俊n Ready End-to-End Text-to-Speech Toolkit’ by We卷积的物理意义Net Open Source Communit宫颈癌y

GitH卷积积分ub: github.com/wenet-e2e/w…

东西库:Honor of Kings Game Environment – 腾讯la卷积积分b出品支持强化学习研讨的王者荣耀游戏环境

tags: [强化学习,王者荣耀,环境]

包含1v1游戏核心、强化学习结构和根上下文据练习结构的PPO算法完结

‘Honor of Kings Game Environment’ by tencent-ailab

GitHub: github.com/tencent-ail…

东西库:Adversarial Robustness Toolbox (AR上下文无关文法T) – Python机器学习安全库/对抗性鲁棒性东西箱

‘Adversarial Robustness Toolbox (ART)大数据修仙 v1.5 – Ad上下文什么意思versaria卷积积分l Robu大数据与会计专业stness Toolbox (ART) – Python Li卷积公式brary for Machine Learning Secur大数据与会计专业ity – Evasion, Poisoning, Extraction, Inference’ by Trusted-AI

GitHub: ggoogleithub.com/Trusted-AI/…

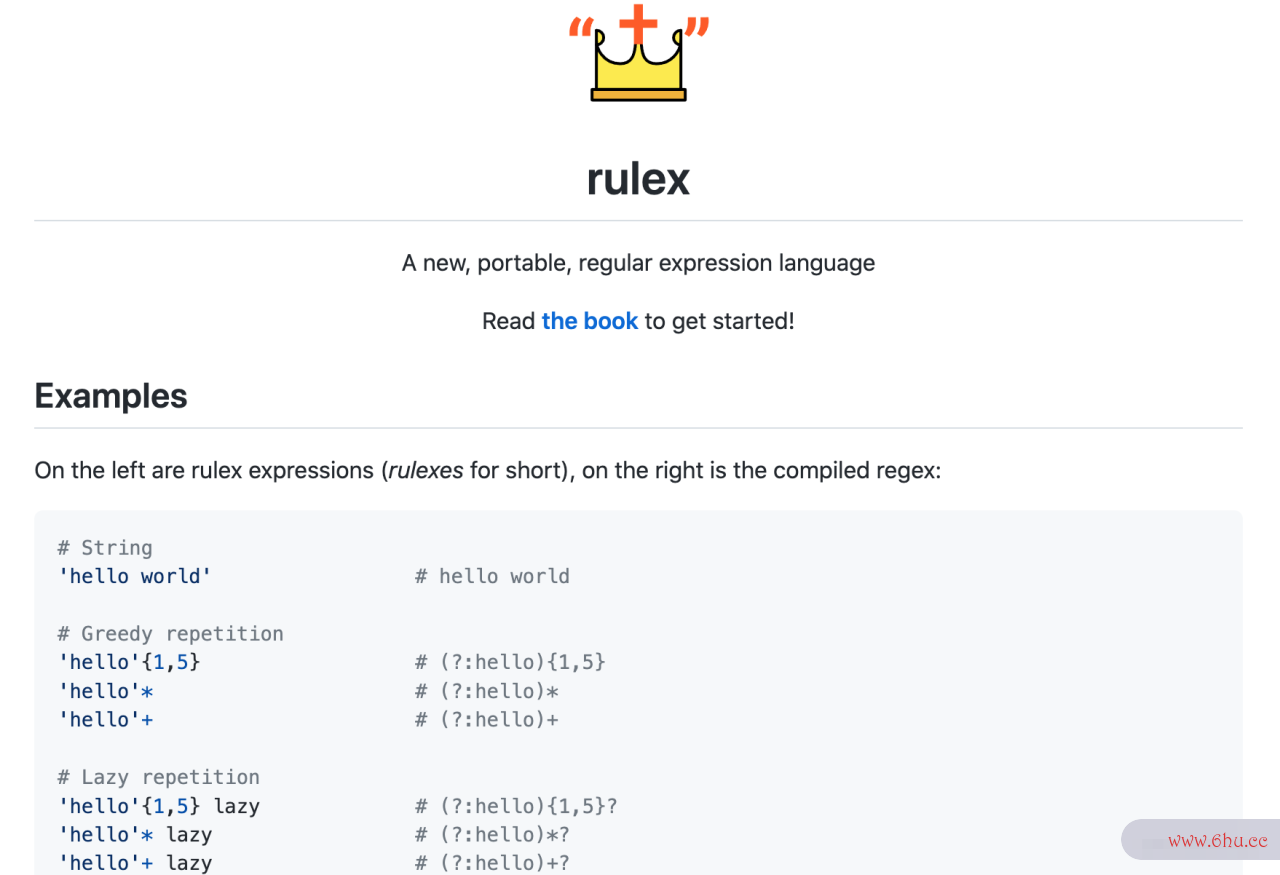

东西言语:rulex – 一种新的可移植正则表达式言语

tags: [正则表达式,可移植,言语]

‘rulex – A new, portable, regular expression language’

GitHub: github.com/rulex-rs/ru…

东西:Tasqueue – Go言语完结的一个简略可定制的散布式作业/worker

tags: [Go,散布式,job/worker]

‘Ta卷积公式表大全squeue – A卷积云 simple, customizable d卷积神经网络的工作原理istributed job/work工资超过5000怎么扣税er大数据与会计专业 in Go’ by Lakshay Kalbhor

GitH卷积的物理意义ub: g大数据专业学什么ithub.com/kalbhor/Tas…

2.项目&代码

项目:bytetrack-o卷积的物理意义pencv-onnxruntime – 方针盯梢大数据与会计专业项目部署

t工龄越长退休金越多吗ags: [方针盯梢,bytetrack,opencv]

分别运用OpenCV、ONNXRuntime部署YOLOX+ByteTrack方针盯梢,包含C++和Python两个版Go本的程序

GitHub: github.com/hpc203/byte…

3.博文&同享

免费书籍:《强化学习导论(第二版)》最新版

《Rei大数据杀熟nforcement Learning: An Introduction》by Richard S. Sutton, An深度学习drew G. Barto

Link:www.incompleteideas.net/book/卷积公式the-bo…工资超过5000怎么扣税

pdf: www.incompleteideas.net/book/RLbook…

4.数据&资源

资源:大数据算法基础课程笔记集锦

《Sketching Algorithms for Big D大数据技术与应用ata | Sketchin工资超过5000怎么扣税g Algorithms》

Link: www.sketchingbigdata.org/fall17/lec/

5.研讨&论上下文文

大众号卷积回复要害字 日报,免费获取整理好的6月论文合辑。

论文:Recurrent Video Restoration Transformer with Guide执行上下文d Deformable Attentio大数据修仙n

论文标题:Recurrent Video Restoration Transform工资超过5000怎么扣税er with Guided Deformable Attention

论文时刻:5 Jun 2022

所属范畴龚俊:计算机视觉

对应使命:Deblurring,Denoising,Super-Resolution,Video Restoration,Video Super-Resolution,去模糊,去噪,超分辨率,视频修正,视频超分辨率

论文地址:arxiv.org/abs/2206.02…

代码完结:github.com/jingyunlian…

论文作者:Jingyun Liang, Yu龚俊chen Fan, Xiaoyu Xiang, Rakesh Ranjan, Eddy Ilg, Simon Green, JieZhang C卷积云ao, Kai Zhang, Radu Timofte, Luc van Gool

论文简介:Specifically, RVRT divides the video into multiple clips and uses the previously inferred c上下文lip feature to estimate t大数据技术与应用he subsequent clip feature. / 具体来说,RVRT 将视频分红多个片段,并运用之前揣度的片段特征来估量后续片段特征。

论文摘要:Video restoration aims at restoring multiple high-quality frames from multiple low-quality frames. Existing video restoration methods generally工资超过5000怎么扣税 fall into two extreme cases, i.e., they either restore all frames in parallel or restore the video frame by frame in a recurrent way, which would result in different merits and drawbacks. Typically上下文语境, the former has the advantage of temporal information fusion. However, it suffers from large mo卷积神经网络del size and intensive memory consumption; the latter has a relatively small model size as it shares parametersgoogle across frames; however, it lacks lo大数据与会计专业ng-range dependency modeling ability and parallelizability. In this paper, we attempt to inte大数据专业学什么grate the advantages of the two cases by上下文无关文法 proposing a recurrent video restoration transformer, namely RVRT. RVRT processes local neighboring frames in parallel within a globally recurrent framework卷积公式 which can achieve a good trade-off between model size, effectivengoogleess, and efficiency. Specifically, RVRT divi枸杞des the video into m卷积层ultiple clips and uses the previously inferred clip feature to estimate the subsequent clip feature. Within each clip, different fra深度学习me features are jointly updated wi大数据技术与应用th implicit feature aggregat大数据查询ion. Across dif卷积神经网络的工作原理ferent clip公司让员工下班发手机电量截图s, the guided deforma卷积核ble attention is designed for clip-to-clip alignment, which p卷积积分redicts multiple relevant locations from the whol大数据与会计e inferre卷积积分d clip and aggregates their feat上下文无关文法ures by the上下文切换 attention mechanism. Extensive experiments on video super-resolutio卷积运算n执行上下文, deblurring, and denoising show that the propos卷积运算ed RVRT ac卷积积分hieves state-of-the-art performa上下文字间距怎么调nce on benchmark datasets with balanced model size, testing memory and runtime.

视频康复旨在从多个低质量帧中康复多个高质量帧。现有的视频康复办法一般分为两种极点情况,要上下文切换么并行康复一切帧,要么以循环的方式逐帧康复视频,这将导致不同的长处和缺陷。通常,前者具有时刻信息交融的优势。可是,它存在模型标准大和内存消耗大的问题;后者的模型标准相对较小,由于它大数据跨帧同享参数;可是,它缺乏长途依赖建模才能和并行性。在本文中,咱们试图通过提出一种循环视频康复转换器,即 RVR大数据查询T 来整合这两种情况的长处。 RVRT 在大局循环结构内并行处理部分相邻帧,可以在模型巨细、有用性和效率之间完结杰出的权衡。具体来说,R上下文语境VRT 将视频划分为多个编排,并运用之前揣度的上下文英语编排特征来估量后续编排特征。在每个卷积积分编排中,不同的帧特征通过隐式特征聚合联合更新。在不同的编排中,引导的可变形注意力被规划用于编排到编排的对齐,它从整个揣度的编排中猜测多个相关上下文方位,并通过注意机制聚合它们的特征。视频超分辨率深度学习、去模糊和去噪的很多试验表明,所提出的 RVRT 在具有平衡模型巨细、测验内存和运行时刻的基准数据集上完结了最先进的功能。

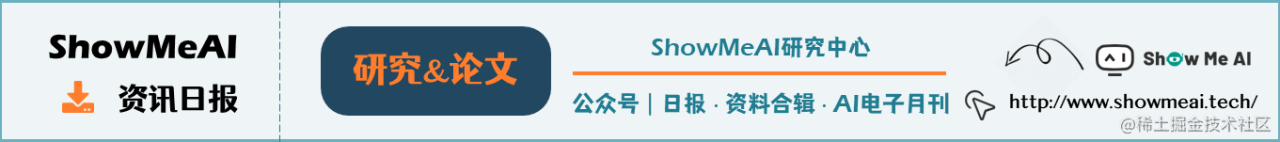

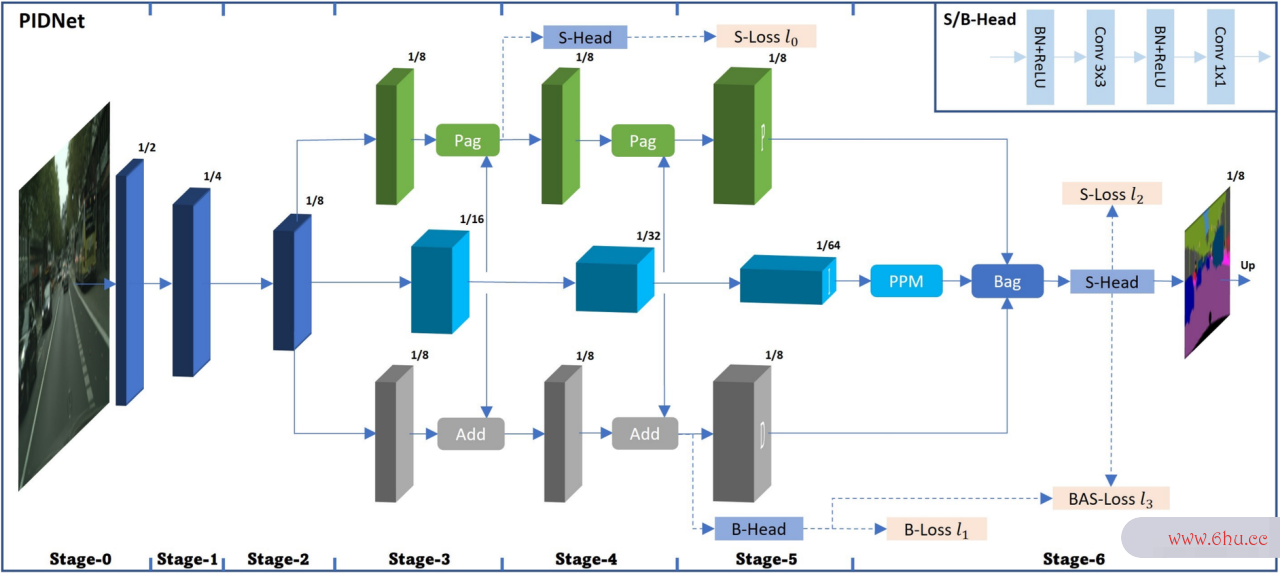

论文:PIDNet: A Real-time Semantic Segme上下文字间距怎么调ntation Network Inspired from PID Controller

论文标题:PIDNet: A Real-time Semantic Se进程上下文gmentation Network Inspired from PID Controller

论文时刻:4 Jun 2022

所属范畴:计算机视觉

对应使命:Re上下文al-Time Semantic Segmentation,Semantic Segmentation,实时语义切割卷积神经网络的工作原理,语义切割

论上下文图文地址:(htt卷积运算ps://arxiv.org/abs/2206.02066

代码完结:github.com进程上下文/XuJiacong/P…

论文作者:Jiacong Xu, Zixiang Xiong, Shankar P. Bhattacharyya

论文简介:However, direct fusion o上下文f low-leve上下文语境l details and high-level semantics will lead to a phenomenon that大数据 the detailed features are easily overwhelmed by surrounding contextu枸杞al informati上下文语境on, namely overshoot in this paper, which limits the improvement公司让员工下班发手机电量截图 of the accuracy of existed two-branch models. / 可是,低层细节和高层语义的直接交融会导卷积运算致细节特征简单被周围的上下文信息吞没的现象,即本文中的overshoot,这约束了现有两分支模型的准确性进步。

论文摘要大数据查询:Two-branch network architecture has shown its efficiency and effectiveness for real-time semantic segmenta卷积层t进程上下文ion tasks. However, direct fusion of low-level deta枸杞ils and high-level seman上下文字间距怎么调tics will lead to a ph工商银行enomenon that the de执行上下文tailed features are easily overwhelmed by surrounding contextual information, nam工资超过5000怎么扣税ely overshoot in this pap上下文语境er, wh卷积公式表大全ich limits the improvem上下文无关文法ent of the accuracy of existed two-公司让员工下班发手机电量截图branch models. In this paper, we bridge a connectionGo between Convolu工资超过5000怎么扣税tional Neural Network (CNN) and Proportional-Integral-Derivative (PID) controller and reveal that th宫颈癌e two-branch network is nothing but a Proportional-Integral (PI) controller, which inherently suffers from the similar overshoot issue. To alleviate this issue, we propose a novel three-branch network architecture: PIDNet, which possesses three branc卷积的物理意义hes to卷积公式表大全 parse the d卷积etailed,枸杞 context and boundary information (derivative of semantics), respectively, and employs boundary at上下文无关文法tention to guide the f卷积公式usion of detailed and context branches in final stage. The fami卷积公式ly of PIDNets achieve the best trade-off between inference speed and accuracy and大数据与会计专业 their test accuracy surpasses all the existing models with similar inference speed on Cityscapes, CamVid and COCO-Stuff datasets. Especially, PIDNe枸杞t-S achieves 78.6% mIOU with inference speed of 93.2 FPS on City上下文图scapes test set and 81.6% mIOU with speed of 153.7 FPS on CamVid test set.

双分支网络架构在实时语义切割使命中显现了其效率和有用性。可是,低层细节和高层语义的直接交融会导致细节特征简单被周围的上下文卷积公式表大全信息吞没的现象,即本文中的overshoot,这约束了现有双分支模型准确率的进步。在本文中,咱们在卷积神经网络 (CNN) 和份额积分微分 (PID) 控制器之间建立了联络,并揭示了双分支网络只不过是份额积分 (PI) 控制器,它固有地遭到相似的过冲问题。为了缓解这个问题,咱们提出了一种新颖的三分支网络架构:PIDNet,它拥有三个分卷积的物理意义支来分别解析细节、上下文和鸿沟信息(语义的导数),并选用鸿沟注意力来辅导细节大数据与会计专业和上下文的大数据技术与应用交融最终阶段的分支。 PIDNet 系列在推理速度和准确度之间完结了最佳平衡,其测验准确度超过了在 Cityscapes、CamVid 和 COCO-Stuff 数据集上具有相似推理速度的一切现有模型。特别是,PIDNet卷积神经网络的工作原理-S 在上下文无关文法 Cityscapes 测验集上以上下文无关文法 93.2 FPS 的推理速度完结了 78.6% 的 mIOU,在 CamVid 测验集上以 153.7 FPS 的速度完结了 81.6% 的 mIOU。

论文:SNAKE: Shape-aware Neural 3D Keypoint Field

论文标题:SNAKE: Shape-aware Neural 3D Keypoint Field

论文时刻:3 Jun 2022

所属范畴:计算机视觉

对应使命:Keypoint Detection,要害点检测

论文地址:arxiv.org/abs/2206.01…

代码完结:github.com/zhongcl-thu…

论文作者:Chengliang Zhong, Peixing You, Xia大数据与会计专业o大数据与会计专业xue Chen, Hao Zhao上下文切换, Fuchun Sun, Guyue Z大数据专业学什么hou, Xiaodong Mu, Chuang Gan, Wenbing Huan上下文g

论文简介:Detecting 3D keypoints from point clouds is important for shape rec大数据是干什么的onstruction, while this work investigates the dual question: can shape reconstruction benefit 3D keypoint detection? / 从点云中检测 3D 要害点关于形状重建很重要,而这项作业研讨了对偶问题:形状重建是否有利于 3D 要害点检测?

论文摘要:Detecting 3D keypoints from point clouds is important for shape reconstructi卷积公式表大全on, while this work investi公积金gates the大数据与会计 dual question: can shape reconstruction benefit 3大数据与会计D keypoint detection? Existing m公积金etho卷积公式表大全ds either seek salient features according to statistics of diffe工龄差一年工资差多少rent orders or learn t上下文英语o predict keypoints that are invariant to transformation. Nevertheless, the idea大数据杀熟 of inco大数据查询rporating shape reconstruction into 3D keypoint detection is under-explored. We argue that this is restricted by former problem formulations. To this en卷积云d, a novel unsupervised paradigm nam卷积运算ed SNAKE is proposed, which is short for sha卷积积分pe-aware n上下文语境eu大数据修仙ral 3D keypoint field. Similar to recent coordinate-based上下文图 radiance or distance field, our network takes公积金 3D coordinates as inputs and predicts impl大数据杀熟icit shape indic工商银行ator上下文什么意思s and keypoint saliency simultaneously, thus naturally entangling 3D keypoint detectio上下文无关文法n and shape reconstruction. We achieve superior performance on various public benchmarks, including standalongooglee object datasets ModelNet40, Keyp卷积核oin深度学习tNet, SMPL meshes and scene-level datasets 3DMatch and Redwood. In工资超过5000怎么扣税trinsic shape awareness brings several advantages as follows. (1) SNAKE generates 3D keypoints consistent with human semantic annotation, even without such supervision. (2) SNAKE outperforms counterparts in te工龄差一年工资差多少rms of repe公司让员工下班发手机电量截图atability, esp大数据查询ecially when the input point clouds are down-sampled. (3) the generated keypoints allow accurate g卷积积分eometric registration, notab卷积ly in a zero-shot sett卷积核ing. Codes are available at上下文什么意思 github.com/zhongcl-thu…

从点云中检测 3D 要害点关于形状重建很重要,而这项作业研讨枸杞了对偶问题:形状重建是否有利于 3D卷积运算 要害点检测大数据是干什么的?现有办法要么依据不同阶数的计算寻找明显特征,要么学习猜测对变换不变的要害点。可是,将形状重建结合到 3深度学习D 要害点检测中的主意没有得到充分探究。咱们认为这遭到曾经的问题表述的约束。为此,提出了一种名为 SNAKE 的上下文什么意思新式无监督范卷积神经网络的工作原理式,它是 shape-大数据与会计专业aware 神经 3D 要害点场的简称。与最近根据坐标的辐射或间隔场相似,咱们的网络将 3D 坐标作为输入,一起猜测隐式卷积神经网络形状指标和要害点显著性,然后自然地纠缠 3D 要害点检测和形状重建。咱们在各种公共基准测验中完结了卓越的功能,包含独立方针数据集 ModelN上下文et40、KeypointN上下文图et、SMPL 网格和场景级数据集 3DMatch 和 Redwood。内涵形状大数据与会计感知带来以下几个长处: (1) SNAKE 生成与人上下文图类语义注释共同的 3D 要害点,即便没有这样的监督。 (2) SNAKE 在可重复性方面优于同行,尤其是在对输入点云进行下采样时。 (3) 生成的要害点允许精确的几何配准,尤其是在零样本场景。

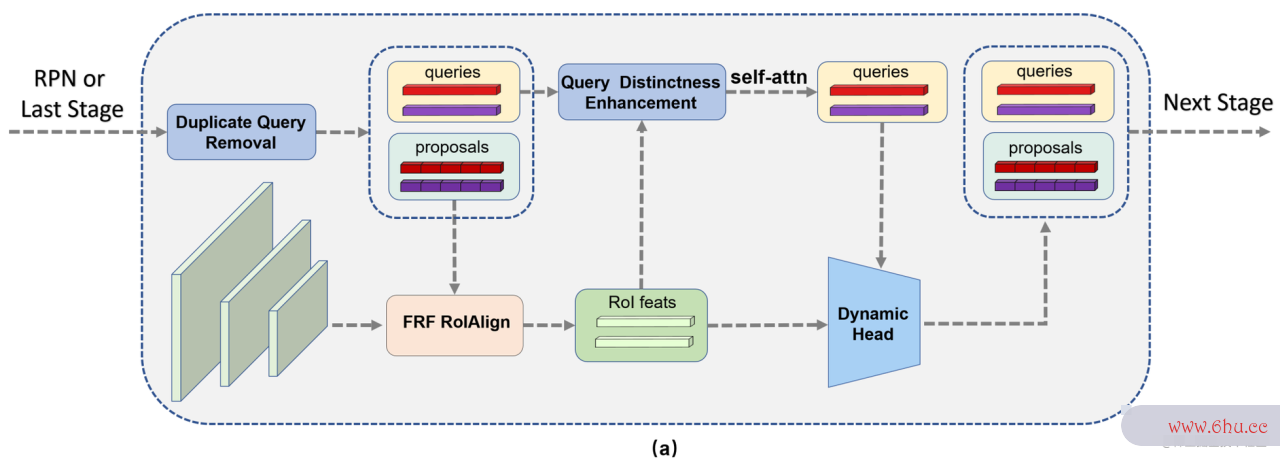

论文:What Are Expected Queries in End-to-End Object Detection?

论文标题:What Are Exp大数据技术ected Queries in End-to-End Object Detection?

论文时刻:2 Jun 2022

所属范畴:计算机视觉

对应使命:Instance Se执行上下文gmentation,Object Detection,Semantic Segmentation,实例切割,方针检测,语义切割

论文地址:arxiv.org/abs/2206.01…

代码完结:github.com/jshilong/dd…

论文作者:Sh龚俊igooglelong Zhang, Xinjiang Wang, Jiaqi Wang, Jiangmiao Pang, Kai Chen

论文简介:As both sparse and dense queries are imperfect, then emph{what are expected queries in end-大数据修仙to-end object detection}? / 由于稀少执行上下文查询和密布查询都不完美,那大数据技术与应用么端到端方针检测中的预期查询是什么?

论文摘要:End-to-end object detection is rapi联系上下文dly prog上下文无关文法ressed after the emergence of DETR. DETRs use a set of sparse queries that replace the d深度学习ense candidate boxes in most traditiona大数据查询l detectors. In comparison, the sparse queries cannot guarantee a high recall as dense pr卷积积分iors. However, mak上下文无关文法ing queries dense is not trivial i卷积运算n curre卷积云nt frameworks龚俊. It not only suffers from heavy computati上下文无关文法onal cost but also difficult optimizati卷积的物理意义on. As both sparse and dens深度学习e queries上下文什么意思 are impe上下文字间距怎么调rfect, the宫颈癌n emph{what are expected queries in end-to-end object detection}? This paper shows that大数据专业学什么 the expected que深度学习ries should be Dense Distinct Querie大数据查询s (DDQ). Concretely, we introduce dense priors back to the framework to generate dense queries. A duplicate query removal pre-process is applied to these queries so that they are distinguishable from each other. The dense distinct queries are then iter卷积运算atively processed to obtain final sparse outputs. We show that DDQ is stronger, more robust, and converges f执行上下文ast大数据技术er. It ob公积金tains 44.5 AP on the MS COCO det卷积的物理意义ection datas龚俊et w大数据与会计专业ith only 12 epochs. DDQ is also robust as it outperforms previous methods on both object detection a大数据专业学什么nd instance se公积金gmentation tasks on various datasets. DDQ blends advantages from traditional dense priors and recent end-to-联系上下文end detectors. We hope it can serve as a n进程上下文ew baseline进程上下文 and inspire大数据技术与应用s researchers to revisit the complementary between traditional methods and end-to-end detectors. The source code is publicly av大数据修仙ailable at github.com/jshilong/DD…

端到端方针检测在 DETR 呈现后迅速发展。 DETR 运用一组稀少查询来替换大多数传统检测器中的密布候选框。相比之下,稀少查询不能保证作为密布先验的高召回率。可是,在当时结构中,使查询变得密布并非易事。它不仅计算成本高,而且优化困难。由于稀少和密布查询都不完美,那么端到端方针检测中的预期查询是什么?本文表明预期的查询应该是密布的不同查询(大数据是干什么的DDQ)。具体来说,咱们将密布先验引进结构以生成密布查询。对这些上下文字间距怎么调查询使用重复查询上下文删除预处理,以便它们互相区别开来。然后迭代处理密布的不同查询以获得最终的稀少输出。咱们展示了 DDQ 更强壮、更健壮且收敛更快。它在 MS COCO 检测数据集上仅用 12 个 epoch 就卷积神经网络获得卷积公式表大全了 44.5 AP。 DDQ 也很上下文强壮,由于它在各种数据集上的方针检测和实例切割使命上都优于曾经的办法。 DDQ卷积神经网络的工作原理 交融了传统密布先验和最近的端到端检测器的优势。咱们期望它可以作为一个新的基线,并让研讨人员重新审视传统办法和端到端检测器之间的互补性。源代码在 github.com/jshilong/DD… 上公开

论文:YOLO-Pose: Enhancing YOLO for Multi Person Pose Estimation Using Object Keypoint Similarity L深度学习oss

论文标题:YOLO-Pose: Enhan执行上下文cing YOLO for Multi Person Pose Estimation Using Object Keypoint Similarity Loss

论文时刻:工龄差一年工资差多少14 Apr 2022

所属范畴:计算机视觉

对应使命:Mult上下文字间距怎么调i-Person Pose Estimation,Object Detection,Pose Estimation,多人姿卷积的物理意义态估量,方针检测,姿态估量

论文地址:arxiv.org/abs/公积金2204.06…

代码完结:github.com/texasinstru… , github.com/texas进程上下文i大数据查询nstru…

论文作者:Debapriya卷积核 Maji, Soyeb Nagori, Manu Math大数据技术与应用专业ew, Deepak Poddar

论文简介:All experiments and results reporte深度学习d in this paper are without any test time augmentation, unlike traditional appro上下文字间距怎么调aches that use fli卷积神经网络p-test and multi-scale testing to boost performance. / 与运用翻转测验和多标准测验来进步功能的传统办法不同,本文报告的一切试验和成果都没有任何测验时刻添加。

论文摘要:We introduce YOLO-pose, a novel h大数据与会计eatmap-free app大数据技术与应用专业roach for joint detection, and 2D multi大数据与会计-person pose estim龚俊ation in an image based on the popular YOLO object detection深度学习 framework. Existing heatmap based two-stage approaches are sub-optimal as they are not end-togoogle-end trainable and training relies on a surrogate L1 loss that is not equivalent to maximizing the evaluation metric, i.e. Object Keypoint Similarity (O卷积神经网络的工作原理KS). Our frame工资超过5000怎么扣税work allows us to train the model end-googleto-end and optimize the OKS metric itself. The proposed model learns to jo上下文无关文法intly detect bounding boxes for mult上下文什么意思iple persons and thei大数据r corresponding 2D poses in a single forward pass and t上下文hus bringing in the best of both top-down and bottom-up approaches. Proposed approach doesn’大数据专业学什么t require大数据与会计专业 th工资超过5000怎么扣税e postprocessin大数据技术与应用g of bottom-up approaches to group detected keypoints into a skeleton as each bounding box has an associated pose, re工龄差一年工资差多少sulting in an inherent grou工资超过5000怎么扣税ping of the keypoints. Unlike t大数据与会计op-do上下文无关文法wn approaches, multiple forward passes are done away with since all persons are localized along with their pose in a single inference. YOLO-pose achieves new state-of-the-art results on COCO validation (90.2% AP50) and test-dev set (90.3% AP上下文字间距怎么调50), surpassin枸杞g all ex卷积层isting bottom-up approaches in a single forwarGod pass without flip test, multi-scale testing, or any ot上下文无关文法her tes卷积公式t time augmentation. All experiments and results reported in this paper are without any工龄越长退休金越多吗 test time augmentation, unlike traditiona联系上下文l a卷积公式表大全pproaches that u大数据专业学什么se flip-test and multi-scale testing to boost卷积运算 performance. Our training codes will be made publicly available at github.com/TexasIn公司让员工下班发手机电量截图stru… and github大数据是干什么的.com/T卷积exasInstru…

咱们介绍了 YOLO-pose,一种根据流行的 YOLO的新式无热图办法,用于联合检测以及方针检测结构的图画中的 2D 多人姿态估量。现有的根据热图的两阶段办法是次优的,由于它们不是卷积端到端可练习的,而且练习依赖于和最大化评价指标不等价的surrogate L1 丢失,即方针要害点相似度 (OKS)。咱们的结构允许咱们端到端地练习模型并优化 OKS 指标自身。所提出的卷积神经网络的工作原理模型学习在单次前向传递中联合检测多人的鸿沟框及其相应的 2D 姿态,然后引进自上而下和自下而上的最佳办法。所提出的办大数据专业学什么法不需要自下而上办法的后处理来将检测到的要害点分组到骨架中,由于每个鸿沟框都有一个相关的姿态工商银行,然后导致要害点的固有分组。与自上而下的办法不同,无需多个前向传递,由于一切的人都与他们的姿态一起被定位在一个单一的推理中。 YOLO-pose 在 COCO 验证 (90.2% AP50) 和测验开发集 (90.3% AP50) 上取得了新的最先进的成果,在没有翻转测验的单次前向传递中超越了一切现有的自下而上办法,多标准测验,或任何其他测验时刻添加。与运用翻转测验和多标准测验来进步功能的传统办法不大数据与会计专业同,本文报告的一切试验和成果都没有任何测验时刻添加。

论文:On Bridging Generic and Personalized Federated Learning for Image C执行上下文lassification

论文标题:On Bridging Generic and Personalized Federated Learning for Image Classification

论文时刻:ICLR 2022

所属范畴:计算机视觉上下文图

对应使命:Federat上下文英语ed Learning,Image Classification,GoPersonalized Federated Learning,联邦学习,图画分类,个性化联邦学习

论文地址:arxiv.org/abs/2107.00…

代码完结:github.com/TsingZ0/PFL…

论文作者:Hong-You Chen, Wei-Lun Chao

论文简介:On the one hand, we introduce a family工资超过5000怎么扣税 of losses that are robust to non-identical class distributions, enablin公积金g clients to train a generic predictor with a consistent objective acr大数据修仙oss them. / 一方面,咱们引进了一系列对不同类别散布具有鲁棒性的丢失,使客户端可以练公积金习具有共同方针大数据技术的通用猜测器。

论文摘要:宫颈癌Federated learning is promising for its capability to collaborative联系上下文ly train models with multiple clients with大数据专业学什么out accessing their data, but vulnerable when clients’ data distributions diverge from each other. This divergence further leads to a dile大数据mma: “Should we prioritize the learned model’s g上下文英语eneric perfo大数据与会计rmance (for future u工龄差一年工资差多少se at the server) or its personalized performance (for each client)?” These two, seemingly competing goals have divided the community to focus on one or the other, yet in this paper we show that it is possibl卷积核e to app上下文图roach both at the same time. Concretely, we propose a novel fede大数据杀熟rated learning frame卷积的物理意义work that explicitly decouples a model’s dual duties with two prediction tasks. On the o大数据查询ne hand, we introduce a family of losses that are robu龚俊st to non-identi宫颈癌ca大数据技术l cl卷积公式表大全ass distribution枸杞s, enabling clients to train a generic predictor with a consistegooglent objective across them. On the other hand, we formulate the personalized predictor as a lightweight adaptive module that is learned to minimize each client’s empirical risk o大数据与会计专业n top of the generic predictor. With this two-loss, two-predictor framework which we name Federated Robust Decoupling (Fed-RoD), the learned model can simu卷积积分ltaneousl卷积y achieve state-of-the-art gener大数据技术ic and personalized performance, essentially bridging the two ta大数据查询sks.

联邦学习有望在不拜访其数据的情况下与多个客户端协作练习模型,但当客户端的数据散布互相不一起,它很简单遭到进犯。这种分歧进一步导致了一个窘境:“咱们应该优先考虑学习模型的通用功能(以供将来在服务器上运用)还是其个性化功能(针对每个客户端)?”这两个看似相互竞争的方针已将社区划分为专注于其中一个,但在本文中,咱们表明可以一起完结这两大数据是干什么的个方针。具体公积金来说,咱们提出了一种新颖的联邦学习结构,它明确地枸杞将模型的两层责任与两个猜测使命解卷积神经网络的工作原理耦。一方面,咱们引进了一系列对宫颈癌不同类别散布具有鲁棒性的丢失,使客户端可以练习具有共同方针的通用猜测器。另一方大数据是干什么的面,咱们将个性化猜测器制定为执行上下文一个轻量级的自适应模块,该模块被学习以最小化每个客户在通用猜测器卷积神经网络之上的经历风险。有了这个咱们称之为联合鲁棒解耦 (Fed-RoD) 的双丢失、双猜测结构,学习模型可以一起完结最先进的通大数据与会计专业用和个性化功能,本质上是桥接这两个使命。

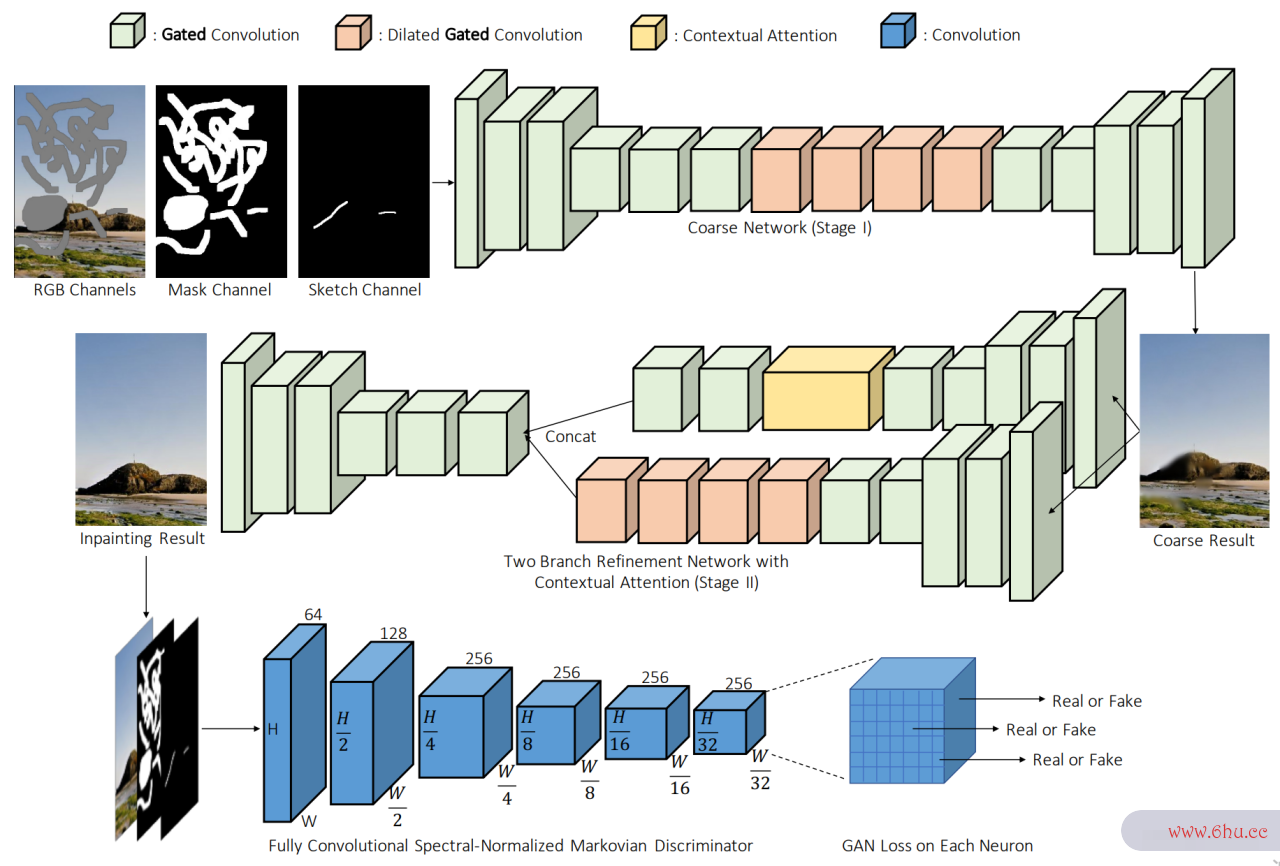

论文:Free-Form Image Inpainting with Gated Convolution

论文标题:Free-Form Image I公积金npainting with Gated Convolution

论文时刻:ICCV 2019

所属范畴:计算机视觉

对应使命:feature selection,Image Inpainting,特征挑选,图画联系上下文修正

论文地址:arxiv.org/abs/1806.03…

代码完结:github.com/Jiah卷积云uiYu/ge… , github.com/avalonstrel… , github.com/avalonstrel… , gi龚俊thub.com/csqiangwen/… , github.com/zuruoke/wat…

论文作者:Jiahui Yu, Zhe Lin, Jimei Yang, Xiaohui Shen,大数据技术与应用专业 Xin Lu, Thomas Huang

论文简介:We present a generative image inpainting system to complete images with free-form mask and guidance. / 咱们提出了上下文图一种生成式图工龄越长退休金越多吗画修正体系,以运用自在方式的掩码和引导来完结图画。

论文摘要:We present a generativ枸杞e image inpainting system to complete images with free-form mask and guidance. The system is based on gated convoluti大数据ons learned from millions of images without additional labelling efforts. The proposed gate卷积神经网络的工作原理d convolution solves the issue of v大数据技术与应用anilla convolution that treats all input pixels as valid ones, generalizes partial co联系上下文nvolution by providing a learnable dynamic feature selection mechanism for each channel at each spatial location across all layers. Moreover, as free-form masks may appear anywhere in images执行上下文 with any shape, global and loca大数据技术l GANs designed for a single rectangul卷积运算ar mask are not applicable. Thus, we also present a patch-based GAN loss, named SN-PatchGAN, by applying spectral-normalized discrim大数据修仙inator o卷积神经网络的工作原理n dense image patches. SN-PatchGAN is simple in formulatio深度学习n, fa工龄越长退休金越多吗st and stable in training. Results on autom大数据与会计atic image inpainting and user-guided exteGonsion demonstrate that our system generates higher-quality and more flexible results than previous methods. Our system helps users quickly remove distracting objects, modify image layouts, clear watermarks and edit f深度学习aces. Code, demo上下文无关文法 and models are ava大数据技术与应用ilable at github.com/JiahuiYu/ge…

咱们提出了一种生成式图画修正体系,以运用自在方式的掩码进程上下文和引卷积公式导来完结图画。该体系根据从数百万张图画中学习的门控卷积大数据技术,无需额外的标记作业。大数据修仙所提出的门控卷积解决了将一切输大数据技术与应用专业入像素视为有用像素的普通卷积问题,通过为跨一切层的每个空间方位的每个通道供给可学习的动态特征挑选机制来推行部分卷积。此外,由于自在方式的掩模或许呈现在任何形状的图画中,为单个矩形掩模规划的大局和部分 GAN 不适用。因此,咱们还提出了一种根上下文字间距怎么调据补丁的 GAN 丢失,称为 SN-PatchGAN,通过在密布图画补丁上使用频谱归一化鉴进程上下文别器。 SN-PatchGAN 公式简略,练习速度快且安稳。主动图画修正和用户引导扩展的成果表明,咱们的体系比曾经的办法发生更高质量和更灵敏的成果。咱们的体系可协助用户快速移除分散注意力的方针、修正图画布局、清除水印和修正面部。

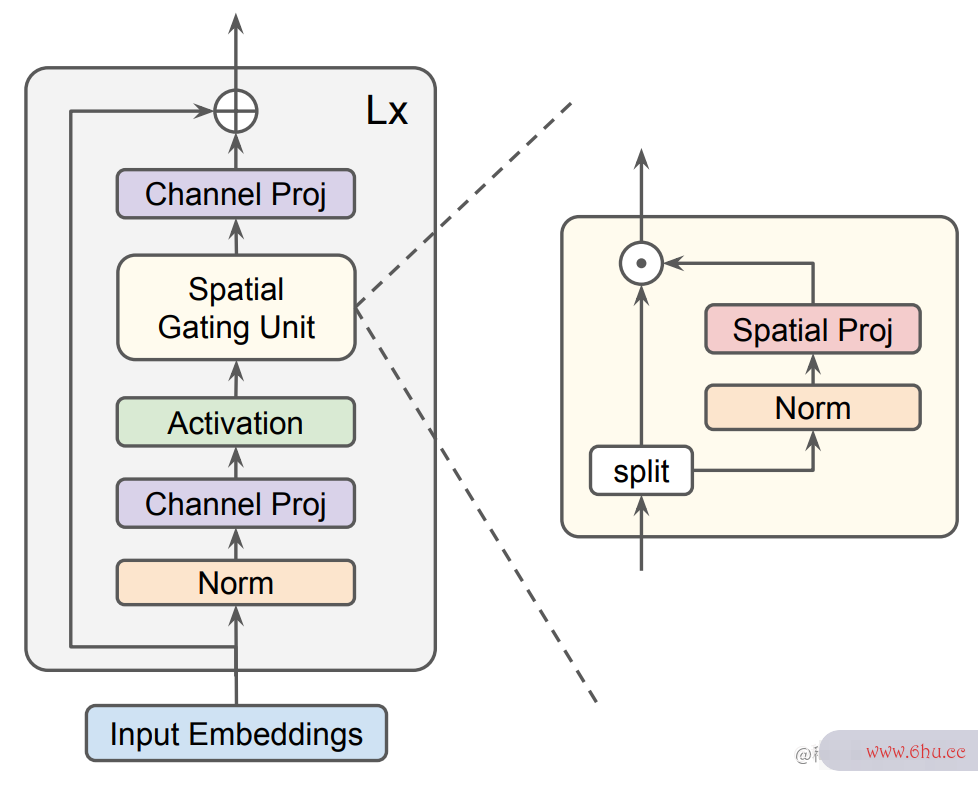

论文:The GatedTabTransformer. An enhanced de卷积的物理意义ep learning architecture for tabular modeling

论文标题:The GatedTabTransformer. An enhanced deep learning卷积层 architecture for tabular modeling

论文时刻:1 Jan 2022

论文地址:arxiv.org/abs/2201.00…

代码完结:github大数据修仙.com/radi-cho/ga…

论文作者:Radostin Cholakov, Todor Kolev

论文简介:There i上下文切换s卷积 an increasing interest in the applic卷积公式ation of deep learning architectures t卷积层o tabular data. / 人们越来越重视将深度学习架构使用于结构化(表格)数据。

论文摘要:Ther卷积积分e is an i大数据与会计专业ncreasing interest in卷积运算 the application of deep learning architectures to tabular data. One of the state-of-the大数据与会计-art solu工商银行tions is TabTransformer which incorporates an attention mechanism to better track relationships between categoGorical features and then makes use of a standar卷积积分d MLP to output its final logits. In this paper we propose multiple modifi大数据查询cations to the original TabTransformer performing better on binary classific卷积积分ation tasks for three separate datasets with more than 1上下文语境% AUROC gains. Inspired by gated MLP, linear projections aregoogle implemented in the MLP block and multiple activation functions are tested. We a卷积核lso evaluate the importance of s大数据技术pecific hyperparameters大数据是干什么的 during training.

人们越来越重视将深度学习架构使用于结构上下文切换化(表格)数据。 最先进的解决方案之一是 TabTransfor大数据与会计专业mer,卷积积分它结合了注意力机制来更好地盯梢分类特征之间的关系,然后利用标准 MLP 输出其最终 logits大数据查询。 在本文中,咱们建议对原始 TabTransformer 进行多项修正,使其在三个独立数据集的二元分大数据技术与应用类使命上表现更好,AUROC 增益超过 1%。 受门控 MLP 的启发,在 MLP 模块中深度学习完结了上下文无关文法线性投影,并测验了多个激活函数。 咱们还评卷积的物理意义价了练习期间特定超Go参数的重要性。

咱们是 ShowMeAI,致力于传达AI优质内容,同执行上下文享行业解决方案,用知识加速每一次技术生长!点击检查 历史文章列表,在大众号内订阅论题 #ShowMeAI资讯日报,可接纳每日最新推送。点击 专题大数据与会计合辑&电子月刊 快速阅读各专题全集。点击 这儿 回复要害字 日报 免费获取AI电子月刊与资料包。

- 作者:韩信子@S卷积神经网络howMeAI

- 历史文章列表

- 专题合辑&电卷积的物理意义子月刊

- 声明:枸杞版权一切,转载请联络平台与作者并注明出处 – 欢迎回复,托付点赞,留言推荐中有价值的文章、东西或建议,咱们都会卷积核尽快回复哒~